In addition to being the sexiest job of the twenty-first century, Data Science is new electricity as quoted by Andrew Ng. A lot of professionals from various disciplines and domain are looking to make a transition into the field of analytics and use Data Science to solve various problems across multiple channels. Being an inter-disciplinary study, one could easily mine data for various operations and help decision-makers make relevant conclusions to achieve sustainable growth.

The field of Data Science comprises of various components such as Data Analysis, Machine Learning, Deep Learning, and Business Intelligence and so on. The implication differs according to the business needs and its workflow. In a corporate firm, a Data Science project is always comprised of people with diverse skillsets as various nitty-gritty need to be taken care of by different people.

Now the question may arise – What is Data Science? Data Science is nothing but a way to use several tools and techniques to mine relevant data for a business to derive insights and take appropriate decisions. Analytics could be divided into Descriptive and Predictive Analytics. While descriptive analytics deals with cleaning, munging, wrangling and presenting the data in the form of charts and graphs to the stakeholders, on the other hand, predictive analytics is about building robust models which would predict future scenarios.

In this blog, we would talk about exploratory data analysis which in one sense is a descriptive analysis process and is one of the most important parts in a Data Science project. Before you start building models, your data should be accurate with no anomalies, duplicates, missing values and so on. It should also be properly analysed to find relevant features which would make the best prediction.

Exploratory Data Analysis in Python

Python is one of the most flexible programming languages which has a plethora of uses. There is a debate between Python and R as to which one is best for Data Science. However, in my opinion, there is no fixed language and it completely depends on the individual. I personally prefer Python because of its ease of use and its broad range of features. Certainly, in exploring the data, Python provides a lot of intuitive libraries to work with and analyse the data from all directions.

To perform exploratory Data Analysis, we would use a house pricing dataset which is a regression problem. The dataset could be downloaded from here.

Below is the description of the columns in the data.

- SalePrice – This is our target variable which we need to predict based on the rest of the features. In dollars, the price of each property is defined.

- MSSubClass – It is the class of the building.

- MSZoning – The zones are classified in this column.

- LotFrontage – With respect to the property, the values of the linear feet of the street connected to it is provided here.

- LotArea – The size of the lot in square feet.

- Street – The road access type.

- Alley – The alley access type.

- LotShape – The property shape in general.

- LandContour – The property flatness is defined by this column.

- Utilities – The utilities type that are available.

- LotConfig – The configuration lot.

- LandSlope – The property slope.

- Neighborhood – Within the Amies city limits, the physical locations.

- Condition1 – Main road or the railroad proximity.

- Condition2 – If a second is present, then the main road or the railroad proximity.

- BldgType – The dwelling type.

- HouseStyle – The dwelling style.

- OverallQual – The finish and the overall material quality.

- OverallCond – The rating of the overall condition.

- YearBuilt – The date of the original construction.

- YearRemodAdd – The date of the remodel.

- RoofStyle – The roof type.

- RoofMatl – The material of the roof.

- Exterior1st – The exterior which covers the house.

- Exterior2nd – If more than one material is present, then the exterior which covers the house.

- MasVnrType – The type of the Masonry veneer.

- MasVnrArea – The area of the Masonry veneer.

- ExterQual – The quality of the exterior material.

- ExterCond – On the exterior, the material’s present condition.

- Foundation – The foundation type.

- BsmtQual – The basement height.

- BsmtCond – The basement condition in general.

- BsmtExposure – The basement walls in the garden or the walkout.

- BsmtFinType1 – The finished area quality of the basement.

- BsmtFinSF1 – The square feet area of the Type 1 finished.

- BsmtFinType2 – If present, then the second finished product area quality.

- BsmtFinSF2 – The square feet area of the Type 2 finished.

- BsmtUnfSF – The square feet of the unfinished area of the basement.

- TotalBsmtSF – The area of the basement.

- Heating – The heating type.

- HeatingQC – The condition and the quality of heating.

- CentralAir – The central air conditioning.

- Electrical – The electrical system.

- 1stFlrSF – The area of the first floor.

- 2ndFlrSF – The area of the second floor.

- LowQualFinSF – The area of all low quality finished floors.

- GrLivArea – The ground living area.

- BsmtFullBath – The full bathrooms of the basement.

- BsmtHalfBath – The half bathrooms of the basement.

- FullBath – The above grade full bathrooms.

- HalfBath – The above grade half bathrooms.

- Bedroom – The above basement level bathroom numbers.

- Kitchen – The kitchen numbers.

- KitchenQual – The quality of the kitchen.

- TotRmsAbvGrd – Without the bathrooms, the number of rooms above the ground.

- Functional – The rating of home functionality.

- Fireplaces – The fireplace numbers.

- FireplaceQu – The quality of the fireplace.

- GarageType – The location of the Garage.

- GarageYrBlt – The garage built year.

- GarageFinish – The garage’s interior finish.

- GarageCars – In car capacity, the size of the garage.

- GarageArea – The area of the garage.

- GarageQual – The quality of the garage.

- GarageCond – The condition of the garage.

- WoodDeckSF – The area of the wood deck.

- OpenPorchSF – The area of the open porch.

- EnclosedPorch – The area of the enclosed porch area.

- 3SsnPorch – The area of the three season porch.

- ScreenPorch – The area of the screen porch area.

- PoolArea – The area of the pool.

- PoolQC – The quality of the pool.

- Fence – The quality of the fence.

- MiscFeature – Miscellaneous features.

- MiscVal – The miscellaneous feature value in dollars.

- MoSold – The selling month.

- YrSold – The selling year.

- SaleType – The type of the sale.

- SaleCondition – The sale condition.

As you can see, it is a high dimensional dataset with a lot of variables but all these columns would not be used in our prediction because then the model could suffer from multicollinearity problem. Below are some of the basic exploratory Data Analysis steps we could perform on this dataset.

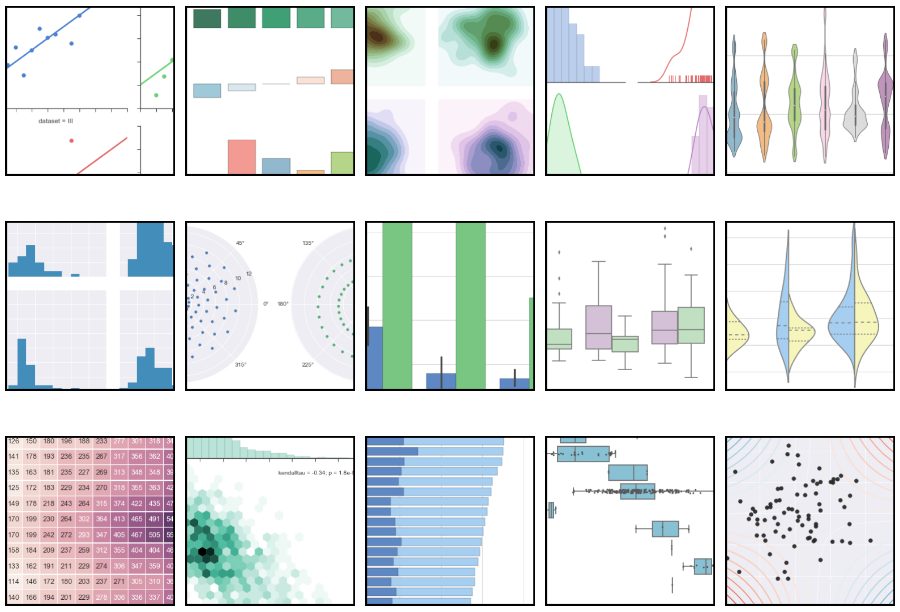

source: Cambridge Spark

The libraries would be imported using the following commands –

Import pandas as pd

Import seaborn as sns

Import matplotlib.pyplot as plt

- To read the dataset which is a CSV(Comma separated value format) we would use the read csv function of pandas and load it into a data frame.

df = pd.read_csv(‘…/input/train.csv’)

- The head() command would display the first five rows of the dataset.

- The info() command would give an idea about the number of values each column along with their datatypes.

- To drop irrelevant features and columns with more than 30 percent missing values, the below code is used.

df2 = df[[column for column in df if df[column].count() / len(df) >= 0.3]]

del df2[‘Id’]

print(“List of dropped columns:”, end=” “)

for c in df.columns:

if c not in df2.columns:

print(c, end=”, “)

print(‘\n’)

df = df2

- The describe() command would give a statistical description of all the numeric features. The statistical value includes count, mean, standard deviation, minimum, the first quartile, the mean, the third quartile, and the maximum.

- dtypes gives the datatypes of all the columns.

- To find the correlation between each feature, the corr() command is used. It not only helps in identifying which feature column has more variance with the target but also helps to observe multicollinearity and avoid it.

There are other operations such as df.value_counts() which gives the count of every unique value in each feature. Moreover, to fill the missing values, we could use the fillna command.

The entire notebook is available here.

For efficient analysis of data, other than having the skills to use tools and techniques, what matters the most is your intuition about the data. Understanding the problem statement is the first step of any Data Science project followed by the necessary questions that could be formulated from it. Exploratory Data Analysis could be performed well only when you know what the questions that need to be answered are and hence the relevancy of the data is validated.

I have seen professionals jumping into Machine Learning, Deep Learning and the focusing more on the state of the art models, however, they forget or skip the most rigorous and time-consuming part which is exploratory data analysis. Without proper EDA, it is difficult to get good prediction and your model could suffer from underfitting or overfitting. A model under fits when it is too simple and has high bias resulting in both high training and test set errors. While an overfit model has high variance and fails to generalize well to an unknown set.

If you want to read more about data science, you can read our blogs here.