This article was written by Pranjal Srivastava.

Sequence prediction problems have been around for a long time. They are considered as one of the hardest problems to solve in the data science industry. These include a wide range of problems; from predicting sales to finding patterns in stock markets’ data, from understanding movie plots to recognizing your way of speech, from language translations to predicting your next word on your iPhone’s keyboard.

With the recent breakthroughs that have been happening in data science, it is found that for almost all of these sequence prediction problems, Long short Term Memory networks, a.k.a LSTMs have been observed as the most effective solution.

LSTMs have an edge over conventional feed-forward neural networks and RNN in many ways. This is because of their property of selectively remembering patterns for long durations of time. The purpose of this article is to explain LSTM and enable you to use it in real life problems. Let’s have a look!

Note: To go through the article, you must have basic knowledge of neural networks and how Keras (a deep learning library) works.

Table of Contents

- Flashback: A look into Recurrent Neural Networks (RNN)

- Limitations of RNNs

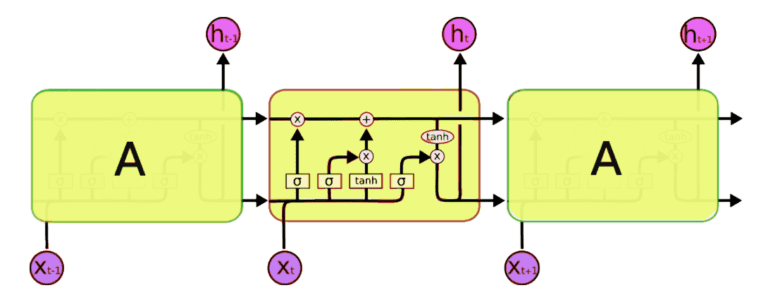

- Improvement over RNN : Long Short Term Memory (LSTM)

- Architecture of LSTM

- Forget Gate

- Input Gate

- Output Gate

- Text generation using LSTMs.

To read the rest of the article, with illustrations, click here.