Interview with John McDonald

When I first read about the Model Context Protocol (MCP), I was deep in designing a new cognitive memory system when it dropped. I realized I needed to integrate MCP into my workflow immediately. This open standard from Anthropic wasn’t just another protocol, it was the missing link to creating truly interoperable, intelligent systems. On this episode of the AI Think Tank, I sat down with my longtime colleague and product leader John McDonald to dig deep into what MCP means for developers, enterprises, and AI agents.

John has spent two decades at the intersection of data, APIs, and enterprise platforms with a resume that includes CVS, Athena Health, and Constant Contact. We go way back, from our early careers in Redmond to MIT’s intensive AI bootcamps. So when MCP hit the scene, we knew it would be a catalyst for the next wave of LLM interoperability.

From SQL to MCP: History echoes

We kicked off the conversation with some nostalgia. John recounted his early career at a database company called Microrim, where he was first introduced to SQL. “Back then, ODBC changed the game,” he said. “You could develop an app once and connect it to any database that supported the protocol.” That’s exactly what MCP promises for language models today.

It’s no secret that many AI systems today suffer from poor interoperability and fragmented context handling. “This reminds me of the early client-server days,” John added. “Everyone had their own integration method, and it was a mess until we aligned on standards.” MCP echoes ODBC and USB-C, but for LLMs, it’s a way to decouple the application from the data source and standardize how context is shared across tools and services.

The death of RAG? Maybe not, but it’s evolving

MCP arrives as Retrieval-Augmented Generation (RAG) reaches a crossroads. “RAG is on its way out,” I said during our talk. “It’s no longer a competitive advantage. It’s just assumed now.” John and I have been experimenting with context injection, fine-tuning, and agents for a long time, but RAG was always a bandage. MCP offers something better, a framework.

We agreed that large context windows aren’t enough on their own. It’s not just about how much data you can cram in, it’s how intelligently that data is managed. “The quality is only as good as the context you provide,” John reminded us. “It’s like giving a document to someone and saying, ‘Summarize this,’ without ever letting them reread it later.” This is where MCP shines.

MCP: The missing context layer

So, what is MCP, practically speaking?

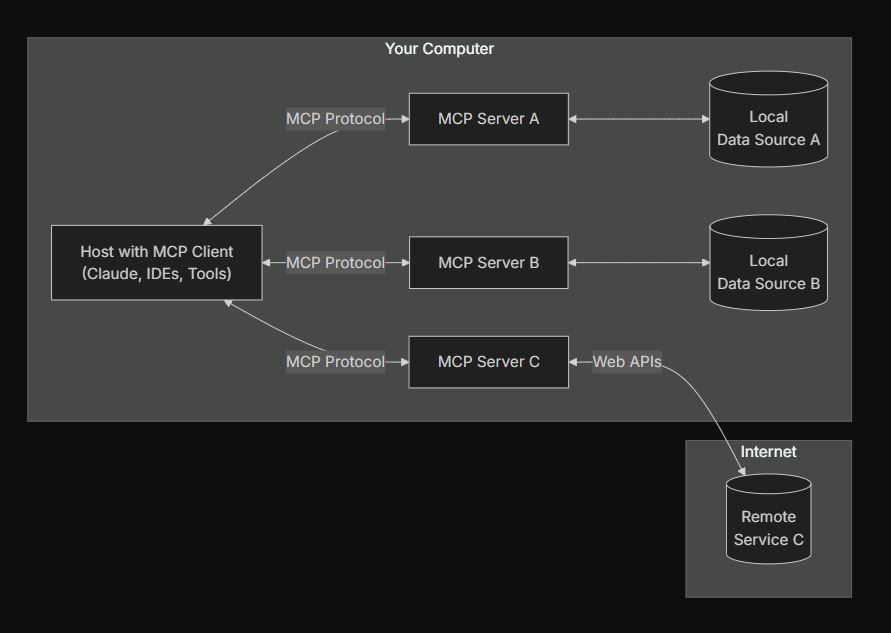

It’s a server-based open standard that enables bi-directional communication between LLMs and external systems. Each server represents a context source, a database, an API, a file system, or even other tools like GitHub, Gmail, or Salesforce. An agent can query these servers dynamically to build or refresh its context.

“You don’t need to know what’s inside the black box,” John said. “If the server supports MCP, you can connect and pull or push context in a standardized way.” This dramatically reduces integration complexity. Developers no longer have to write unique code for every system they touch.

We explored how MCP separates the model, the context, and the tools in a clean, modular architecture. Context becomes first-class, on par with prompts and tools. Anthropic even describes MCP as a way to “augment LLMs through a loop.” Whether it’s agentic reasoning, dynamic memory, or API orchestration, MCP is the glue that holds it all together.

Agent awareness: The real paradigm shift

One of the most exciting developments in AI has been the rise of agents, software constructs that autonomously execute tasks using LLMs, tools, and context. MCP gives these agents memory. Not just a static prompt or a one-shot query, but a dynamic, evolvable context they can query, flush, or refresh at will.

“You can have agents call back to the LLM for inference, but with rules,” John explained. “Like, don’t exceed this token limit, or only use cheaper models for non-critical tasks.” This is a game changer for budget control and reliability. Instead of always invoking the most expensive model, agents can choose their tools intelligently.

From my own work, I’ve seen this emerge firsthand. I built a cognitive memory system that mimicked episodic memory. It auto-journaled, tracked sentiment, and knew how to anchor itself to a directive. “I want you to build XYZ software,” I’d tell it, and it would hold on to that mission across sessions. Now, with MCP, I can decouple that memory system from the model and connect it to any context-aware agent.

Standards as enablers, not constraints

A theme throughout our conversation was how standards like MCP unlock innovation, not stifle it. “You don’t want to spend all your time on integration,” John said. “Let’s focus on the tasks, not how to fetch the data.” That’s what drove REST to replace SOAP, and it’s what will make MCP essential in the agent era.

We also drew parallels to the Language Server Protocol (LSP), which enabled IDEs to support multiple programming languages. “That protocol did for code editors what MCP is doing for context,” he said. “Once you have a common language, you can plug in any capability.”

In fact, one of the first killer apps of MCP is expected to be developer tools. IDEs, Copilot-like agents, and testing frameworks can all benefit from an intelligent, standardized way of accessing build logs, Git repos, and deployment systems.

Use cases beyond the hype

I challenged John to think about real-world applications. “Imagine a retail company with multiple stores,” I said. “Inventory data is siloed, scattered across spreadsheets, APIs, and databases. An agent using MCP can stitch those together, infer stock levels, and make recommendations in real time.”

He shared a similar story. “In the bootcamp, people kept building hacks to connect their app to Salesforce or Google Docs. MCP turns those hacks into scalable integrations. Now with official servers from GitHub, Superbase, Figma, and others, it’s already happening.”

This is also a leap forward for accessibility. You no longer need enterprise budgets or fine-tuned models to get real results. “You can do a lot with 3.5 Turbo and smart orchestration,” I reminded listeners. “A small model, a good context pipeline, and MCP, that’s a powerful stack.”

The other side: Security and vulnerability

Of course, no new standard is without risks. As more applications start using MCP, we’ll see the same security concerns that plagued early cloud apps: data leakage, OAuth token abuse, and prompt injection.

“MCP makes integration easier,” I said, “but now bad actors have a common doorway too.” John agreed: “Enterprises will need their own registries of whitelisted MCP servers. Sandboxing is going to be huge. Just like app stores eventually enforced permissions, we’ll need guardrails for agents.”

We also talked about man-in-the-middle attacks, rogue agents, and the danger of improperly scoped tool permissions. It’s all familiar territory for developers and IT security professionals. The challenge will be educating the next wave of AI builders.

Looking ahead: A year from now

We both agreed that MCP is just the beginning. In under six months, OpenAI and Google have both embraced it. “Give it another year,” I said, “and we’ll have proprietary MCP servers with enterprise features, authentication, cost controls, even blockchain verification.”

MCP isn’t the final piece, but it’s a cornerstone. It works beautifully alongside other emerging standards like A2A (agent-to-agent communication), tool registries, and structured orchestration layers.

With tools like PulseMCP.com emerging to track and index active MCP servers, we’re watching a true ecosystem take shape.

Final thoughts

MCP feels like a turning point. If you’re a builder, a product manager, or even just a curious developer, this is your time. The work we put in now will define how the next generation of AI systems operate, communicate, and evolve. Now let’s get to it!

🔗 Find MCP servers: https://pulsemcp.com

Join us as we continue to explore the cutting-edge of AI and data science with leading experts in the field. Subscribe to the AI Think Tank Podcast on YouTube. Would you like to join the show as a live attendee and interact with guests? Contact Us