This is part 2 of a three-part series on the Economics of Ethics. In Part I of the Economics of Ethic series, we talked about economics as a framework for the creation and distribution of society value. With AI’s ability to learn and adapt billions of times faster than humans, society must get the definition and codification of ethical behaviors right.

In Part II we will discuss the difference between financial and economic measures, the role of laws and regulations in an attempt to establish moral or ethical codes, and the potential ramifications of the recently released “AI Bill of Rights” from the White House Office of Science and Technology Policy (OSTP).

Here’s the problem with the data and AI ethics conversation – if we can’t measure it, then we can’t monitor it, judge it, or change it. We must find a way to transparently instrument and measure ethics. And that’ll become even more important as AI becomes more infused into the fabric of societal decisions around employment, credit, financing, housing, healthcare, education, taxes, law enforcement, legal representation, digital marketing and social media, news and content distribution, and more. We must solve this AI codification and measurement challenge today and that is the objective of the “Economics of Ethics” blog series

Difference Between Financial and Economic Metrics

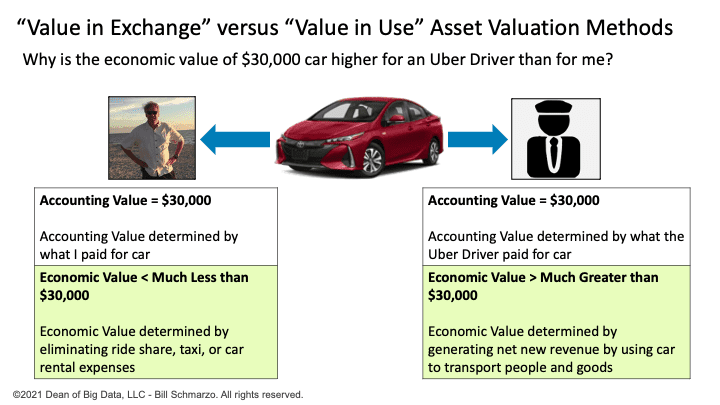

First, a history lesson. In the seminal book “The Wealth of Nations”, Adam Smith explains the key difference between financial and economic measurement with respect to value creation:

“The word VALUE has two different meanings, and sometimes expresses the utility of some particular object, and sometimes the power of purchasing other goods which the possession of that object conveys. The one may be called ‘value in use;’ the other ‘value in exchange.’”

That is, finance is a ‘value in exchange’ valuation method where value is determined by possession of an asset. Economics, on the other hand, is a ‘value in use’ valuation method where value is determined by how the asset is used to create value (Figure 1).

- “Value in exchange” is a finance/accounting-based valuation methodology in that the value of an asset is determined by what you or someone else is willing to pay for that asset.

- “Value in use” is an economics-based asset valuation methodology in that the value of an asset is determined by what additional value one can create from the use of that asset.

Figure 1: “Features Part 3: Features as Economic Assets”

To further understand the critical differences between financial metrics and economic metrics:

- Financial metrics are used to evaluate the financial performance of an organization. They are typically focused on the short term and are used to assess the financial performance of an organization at given point in time. Examples of financial metrics include revenue, profit, earnings per share (EPS), return on investment (ROI), and debt-to-equity ratio. Financial metrics focus primarily on measuring and tracking short-term financial performance.

- Economic metrics are used to evaluate the overall health and well-being of society. Economic metrics are focused on the long term and are used to track the health of an economy or society over time and help policymakers make decisions about economic policy. Examples of economic metrics include gross domestic product (GDP), unemployment rate, and inflation rate. Economic metrics focus on longer-term performance and consequences across a broader range of value dimensions including education, healthcare, justice, safety, diversity, society, environmental and citizen well-being.

The difference in timeframes is a big difference. For example, we see individuals and organizations “game” financial metrics by taking actions that have short-term benefits to them but have substantial long-term costs to society. Some of these examples are very obvious and recent (Bernie Madoff’s Ponzie Scheme, Elizabeth Holmes and Theranos, Purdue Pharma with oxycontin, FTX crypto facade). Even less nefarious are the recent reactions by companies to potential of a recession, and the actions some companies are taking by laying off people with years of domain expertise and tribal knowledge – just the expertise and experience that organizations need to leverage AI / ML to create new sources of customer, product, service, and operational value.

However, the more deadly examples are not as obvious and can be the subject of wild conspiracy theories to avoid the proactive ethics to do good (climate change, marriage rights, public education, income disparity, public healthcare, judicial fairness, prison reform).

Many of these nefarious examples have a common theme: people and organizations who are seeking to maximize short-term personal financial gain at the cost of longer-term economic and society benefits.

The Role of Laws and Regulations on Ethics

One way society tries to dissuade short-term gaming and persuade long-term focus is through laws and regulations. As Mark Stouse recently posted “What are laws but social engineering in an attempt to establish moral codes?”

Properly constructed laws and regulations can introduce significant economic incentives and heavy penalties related to compliance to those laws and regulations. Laws and regulations can change behaviors by changing associated rewards and penalties.

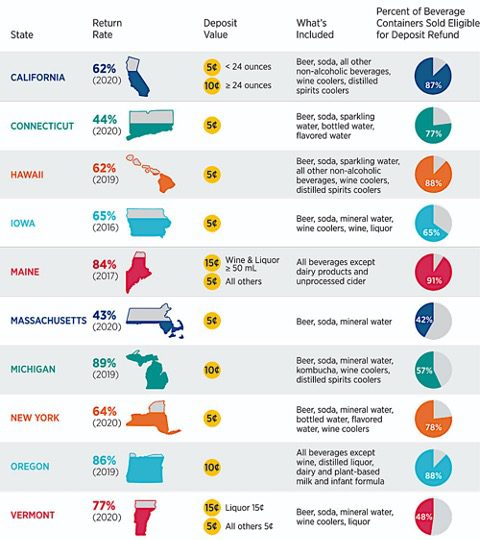

For example, states with container deposit laws have a beverage container recycling rate of around 60%, while non-deposit states reach only about 24%. Iowa’s redemption rate measured significantly higher at 71% (Figure 2). This law keeps an estimated 1.65 billion containers out of Iowa’s landfills, ditches, and waters each year[1].

Figure 2: Bottle Bill Fact Sheet; Source: Tomra.com

An understanding of economics and broadening the measures and timeframe against which we are tracking those measures can help us design laws and regulations that incentivize the right ethical behaviors (and penalize the wrong ethical behaviors). Careful consideration needs to be given to the construction of these laws and regulations to avoid the unintended consequences that gave rise to the phrase “The road to hell is paved with good intentions.”

Ramifications from the “AI Bill of Rights”

On October 4, 2022, the White House Office of Science and Technology Policy (OSTP) published “Blueprint for an AI Bill of Rights,” a set of five principles and associated practices to help guide the design, use, and deployment of artificial intelligence (AI) technologies to protect the rights of the American public in the age of artificial intelligence.

To quote the preface of the extensive paper:

“Among the great challenges posed to democracy today is the use of technology, data, and automated systems in ways that threaten the rights of the American public. Too often, these tools are used to limit our opportunities and prevent our access to critical resources or services. These problems are well documented. In America and around the world, systems supposed to help with patient care have proven unsafe, ineffective, or biased. Algorithms used in hiring and credit decisions have been found to reflect and reproduce existing unwanted inequities or embed new harmful bias and discrimination. Unchecked social media data collection has been used to threaten people’s opportunities, undermine their privacy, or pervasively track their activity—often without their knowledge or consent”

The paper articulates an AI “Bill of Rights” that outlines how AI should be used (Figure 3):

- You should be protected from unsafe or ineffective systems

- You should not face discrimination by algorithms and systems should be used and designed in an equitable way.

- You should be protected from abusive data practices via built-in protections and you should have agency over how data about you is used

- You should know that an automated system in being used and understand how and why it contributes to outcomes that impact you.

Figure 3: The AI Bill of Rights

This is a great start as the AI Bill of Rights increases awareness of the challenges with and represents a big step for the government in getting involved in ensuring the proper and ethical use of AI. But it is only a start as the next step would be for White House Office of Science and Technology Policy to start identifying and gaining cross industry and cross discipline alignment on the economic measures against which the AI Bill of Rights will be measured. Once we have identified those measures, then we can create AI-related laws and regulations – with the appropriate incentives and penalties – to enforce the adherence to this AI Bill of Rights.

Economics of Ethics Summary – Part II

If we are going to use economics to create a framework around which we can define and measure ethical behaviors and decisions, then we must understand the differences between financial metrics – which tend to be short-term and narrow in definition – and economic metrics – which tend to be longer-term and more holistic in definition.

An understanding of economics and broadening the measures and timeframe against which we are tracking those measures can help us design laws and regulations that incentivize the right ethical behaviors (and penalize the wrong ethical behaviors). Careful consideration needs to be given to the construction of these laws and regulations to avoid the unintended consequences that gave rise to the phrase “The road to hell is paved with good intentions.”

In Part III, I will discuss what organizations can do to identify and avoid unintended unethical consequences. Finally, I’ll introduce the “Economics of Ethics” worksheet that organizations can use to formalize the process of identifying the variables and metrics that we can use to not only measure ethical behaviors but also help to minimize the consequences of unintended consequences.

[1] “Bottle Bill is good for our state’s environment and economy” https://www.desmoinesregister.com/story/opinion/columnists/iowa-view/2018/03/20/bottle-bill-good-our-states-environment-and-economy/441535002/