This is part 1 of a three-part series on the Economics of Ethics.

Here’s the problem with the data and AI ethics conversation – if we can’t measure it, then we can’t monitor it, judge it, or change it. We must find a way to transparently instrument and measure ethics. And that’ll become even more important as AI becomes more infused into the fabric of societal decisions around employment, credit, financing, housing, healthcare, education, taxes, law enforcement, legal representation, digital marketing and social media, news and content distribution, and more. We must solve this AI codification and measurement challenge today and that is the objective of the “Economics of Ethics” blog series

Take heed, the day of judgment draweth nigh. Artificial Intelligence (AI) is going to force our hand with respect to how as a society we define and measure ethical behaviors. No longer can we allow short-term pursuits override long-term benefits. The larger benefits of society must be prioritized over individual instant gratification exploitation that is so prevalent today. With AI’s ability to learn and adapt billions of times faster than humans (thanks to Moore’s Law), society must get the definition and codification of ethical behaviors right. Otherwise, we will end up working for AI versus AI working for us.

The warnings are everywhere about the dangers of poorly constructed, inadequately defined AI models that could run amok over humankind.

“AI, by mastering certain competencies more rapidly and definitively than humans, could over time diminish human competence and the human condition itself as it turns it into data. Philosophically, intellectually — in every way — human society is unprepared for the rise of artificial intelligence.” – Henry Kissinger, MIT Speech, February 28, 2019

“The development of full artificial intelligence (AI) could spell the end of the human race. It would take off on its own, and re-design itself at an ever-increasing rate. Humans, limited by slow biological evolution, couldn’t compete and would be superseded.” – Stephen Hawking, BBC Interview on Dec 2, 2014

And don’t expect Asimov 3 rules of robotics (AI) to protect us:

- Law #1: A robot (AI) may not injure a human being or, through inaction, allow a human being to come to harm.

- Law #2: A robot (AI) must obey orders given it by human beings except where such orders would conflict with the First Law.

- Law #3: A robot (A) must protect its own existence as long as such protection does not conflict with the First or Second Law.

These laws may make for good books and movies, but they are insufficient in guiding the AI models that are used to make employment, credit, financing, housing, healthcare, education, taxes, law enforcement, legal representation, digital marketing and social media, content and news distribution and many more decisions that directly impact society.

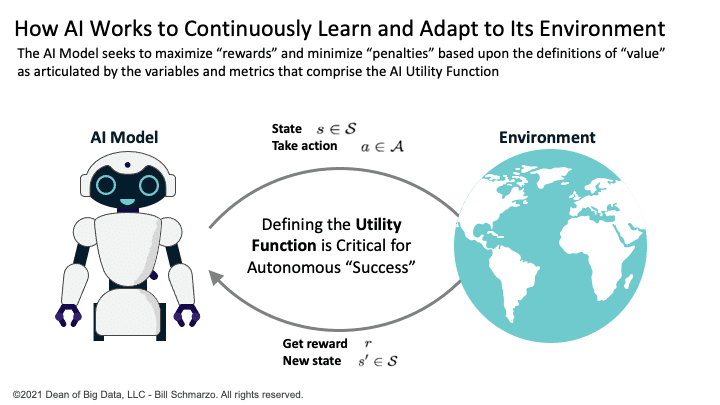

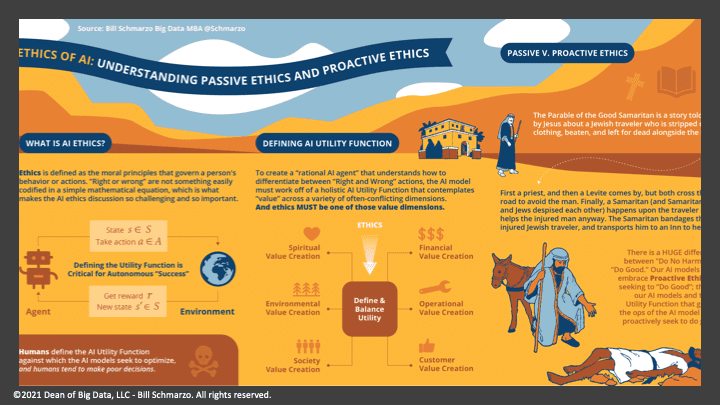

Consequently, codifying “Ethics” is a major challenge in getting Artificial Intelligence (AI) models to do the “right” things in making ethical decisions. Codifying ethics as variables and metrics is especially important because AI models are continuously learning and adapting – at speeds unfathomable by humans – to maximize “rewards” and minimize “penalties” based upon the variables and metrics that comprise the AI Utility Function (Figure 1).

Figure 1: Understanding How AI Models Work

In my blog “Is God an Economist?”, I suggested that society can achieve positive economic outcomes driven by the ethics of fairness, equality, justice, and generosity taught by the Bible. And the lessons in the Bible are really economic lessons; lessons about the creation and distribution of a more holistic definition of wealth of “value”.

Economics is a framework for the creation and distribution of wealth or value

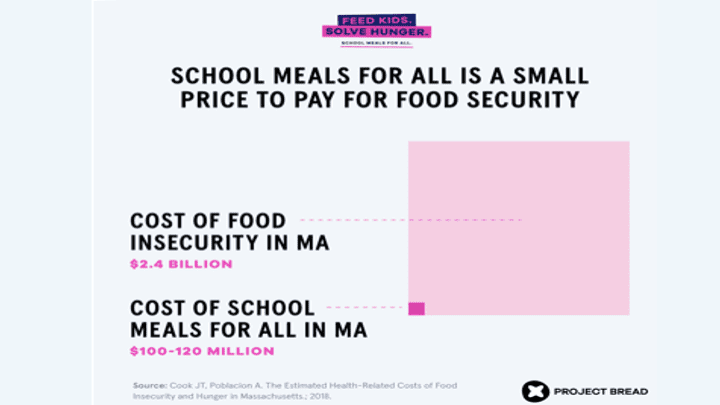

In one example, a Massachusetts study shows the value potential of a fairness program called “meals for all students”. Massachusetts spends $2.4B annually on student mental health issues, diabetes, obesity, and cognitive development issues. Implementing “School Meals for All” would cost $100-120M annually, an investment that pays 10x dividends in improved health and education outcomes while creating society-contributing humans (Figure 2).

Figure 2: “It’s just Good Economics: The Case for School Meals for All?”

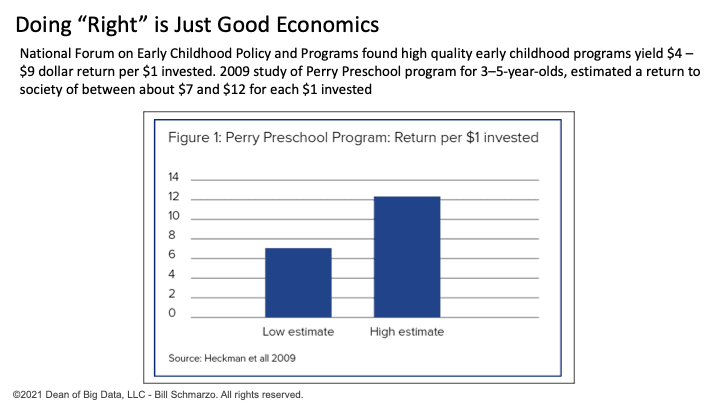

Another example, the National Forum on Early Childhood Policy and Programs found that early childhood programs can yield a $4 – $9 dollar return per $1 invested. In another study (2009 study of Perry Preschool), a childhood program for 3–5-year-olds delivered an estimated $7 – $12 per $1 invested (Figure 3)[1].

Figure 3: Ethical Behaviors Just Make Good Economic Sense

Yes, ethical behaviors are just good economics. But before we try to “codify” ethical behaviors, we first need to get alignment on what we mean by the word “ethics”.

What are Ethics? Ethics are Proactive.

Ethics is the moral principles that govern a person’s behavior or actions, where moral principles are the principles of “right and wrong” that are generally accepted by an individual or a social group.

Or as my mom use to say, “Ethics is what you do when no one is watching.”

An important characteristic of ethics is that ethics is proactive, not passive. Ethics is a proactive endeavor for which one must be held responsible for taking the appropriate action. And if you don’t know the difference between passive and active ethics, then it’s time for a bible lesson refresher – The Parable of the Good Samaritan.

The Parable of the Good Samaritan is told by Jesus about a Jewish traveler who is stripped of clothing, beaten, and left for dead alongside the road. First a priest and then a Levite comes by, but both cross the road to avoid the man. Finally, a Samaritan (Samaritans and Jews despised each other) happens upon the traveler and helps the battered man anyway. The Samaritan bandages his wounds, transports him to an Inn on his beast of burden to rest and heal, and pays for the Samaritan’s care and accommodations at the Inn (Figure 4).

Figure 4: “AI Ethics Challenge: Understanding Passive versus Proactive Ethics”

The priest and the Levite operated under the Passive Ethics of “Do No Harm”. Technically, they did nothing wrong. But that mindset is insufficient in a world driven by AI models. Our AI models must embrace Proactive Ethics by seeking to “Do Good”; that is, the AI Utility Function that guides the continuous learning and adapting aspects of the AI model must proactively seek to do good.

Economics of Ethics Summary – Part I

In Part I of the Economics of Ethic series, we talked about economics as a framework for the creation and distribution of society value. With AI’s ability to learn and adapt billions of times faster than humans, society must get the definition and codification of ethical behaviors right. Otherwise, we will end up working for AI versus AI working for us.

In Part II we will discuss the difference between financial and economic measures, and the role of laws and regulations in an attempt to establish societal moral or ethical codes.

[1] “High Return on Investment (ROI)” https://www.impact.upenn.edu/early-childhood-toolkit/why-invest/what-is-the-return-on-investment/