It is easy, when dealing with data, to think of it as something tangible. More precisely, data as water (or as oil) has become such a powerful metaphor that many managers, and even more than a few more technically-oriented people, have come to accept the fact that data engineering is largely about moving data from one database to another, potentially larger one.

However, the metaphor leaks … badly. A health insurance record, a DNA transcription, and a collection of Twitter messages are all data – that is to say, they are fossilized imprints of specific processes that occurred in the past. Yet what they describe, and how they describe it, are structurally very different. The wonder of digitization is that we can in fact send this content, across the wires (or the ether) regardless of its form, but that does not in the least obviate the fact that the data being sent varies wildly from one scenario to the next.

This is one reason why data warehouses, in general, provide only moderate benefit, at a potentially high cost. In most data warehouses, information is stored in what amounts to a single common data store in distinct virtualized databases, so that queries can be accomplished without the need of having to work with multiple connections simultaneously. However, this does nothing about the semantics of the data – the data in these stores are still bound to specific keys, the boundaries between datasets, while softer than they were with data in separate machines, are still significant, and the semantics in how that data store remains wildly divergent from one package to the next.

This is about the point where machine learning and deep learning advocates will argue that this can be solved via some kind of kernel-based neural network algorithm. For some classes of operations, especially those involving classification based upon re-entrant learning such as image, voice, and sound recognition, this is certainly the case.

Semantic systems, such as RDF triple stores, make the barriers between data even thinner, first by creating virtualized graphs that act as collections of information, then by making both queries and updates using a comprehensive language (Sparql or possibly GQL). This makes it possible to query both the data and its associated metadata regardless of which graph the information is in. The semantic approach doesn’t solve the underlying semantic problem completely, but it takes you much farther down the path

However, something that’s emerging out of the RDF world is the notion of Data Shapes which echos the XML Schematron language from the early 2000s. It is possible to create a schema with data shapes, as most schema languages specify properties such as relationships, cardinality, and pattern restrictions, but the data shape both generalizes this concept and, unlike schemas, can be applied to specific instances – even if the types of those instances are unknown.

The W3C Shape Constraint Language (or SHACL), which can be used to identify such shapes, provides such a framework, and opens up a very different view on data, especially when expressed in RDF, than is available in most other schematic languages. This marriage of validation, constraint management, and reporting, when expressed with such shapes, can, in turn, drive additional actions, can define user interfaces, and can even change the interfaces (relationships) that can apply to given objects.

This becomes especially powerful when SHACL makes use of SPARQL to identify especially rich patterns (is an enzyme created from a laboratory organism in a laboratory in Prague and genetically engineered using tools developed in France compliant with regulations in Germany). The difference between this and a query, however, is that SHACL can tell you not only what is not compliant, but more importantly, can tell you why it’s not. In many cases is far more valuable information to a business looking to do business in Germany than knowing what is compliant, because it offers up insights to bringing those non-compliant products to market.

The power of SHACL is compelling enough that this particular RDF product is increasingly being integrated into Javascript and JSON toolchains, something that RDF to date has had difficulty achieving. It is also, surprisingly, providing a proving ground for combining Javascript, RDF, JSON, and SPARQL, pointing to the growing realization that the RDF stack may be important in a graph-oriented future.

Kurt Cagle

Community Editor,

Data Science Central

To subscribe to the DSC Newsletter, go to Data Science Central and become a member today. It’s free!

Data Science Central Editorial Calendar

DSC is looking for editorial content specifically in these areas for April 2022, with these topics having higher priority than other incoming articles.

- Autonomous Drones

- Knowledge Graphs and Modeling

- Military AI

- Cloud and GPU

- Data Agility

- Metaverse and Shared Worlds

- Astronomical AI

- Intelligent User Interfaces

- Verifiable Credentials

- Automotive 3D Printing

DSC Featured Articles

- Avoid RegTech myopia with a data-centric approach Alan Morrison on 23 Mar 2022

- The long game: Feedback loops and desiloed systems by design (Part II of II) Alan Morrison on 23 Mar 2022

- How Metadata Improves Security, Quality, and Transparency Lewis Wynne-Jones on 22 Mar 2022

- Business Analytics from Application Logs and SQL Server Database using Splunk Roopesh Uniyal on 22 Mar 2022

- Is the Data Scientist the Weak Link in Data-driven Value Creation? Bill Schmarzo on 22 Mar 2022

- Tips for Weaving and Implementing a Successful Data Mesh Alberto Pan on 22 Mar 2022

- How to Modernize Enterprise Data and Analytics Platform (Part 2 of 4) Alaa Mahjoub, M.Sc. Eng. on 22 Mar 2022

- AI SEO: How AI Helps You Optimize Content for Search Results Karen Anthony on 22 Mar 2022

- Understanding the Role of Augmented Data Catalogs in Data Governance Indhu on 22 Mar 2022

- Security Issues in The Metaverse Stephanie Glen on 22 Mar 2022

- History of the Metaverse in One Picture Stephanie Glen on 21 Mar 2022

- Google Colab to a Ploomber pipeline: ML at scale Michael Ido on 20 Mar 2022

- Biases in CLIP and the Stanford HAI report ajitjaokar on 20 Mar 2022

- How to Modernize Enterprise Data and Analytics Platform (Part 1 of 4) Alaa Mahjoub, M.Sc. Eng. on 20 Mar 2022

- DSC Weekly Digest 15 March 2022: Beware the Ides of … Kurt Cagle on 20 Mar 2022

- Think AI Can’t Take Your Job? Think Again Zachary Amos on 15 Mar 2022

- Selling Your Digital Content on Amazon, Without Kindle Vincent Granville on 14 Mar 2022

- Data Augmented Healthcare Part 1 Scott Thompson on 14 Mar 2022

- Why is Data Back-Up Necessary? The Benefits of Availing Technical Support Karen Anthony on 13 Mar 2022

- Cloud-Based Mobile App Testing: A Complete Guide Ryan Williamson on 13 Mar 2022

- Opening the Pod Bay Door: Regulating multi-purpose AI ajitjaokar on 13 Mar 2022

- Automotive AR and VR — Prototyping in the Virtual World Nikita Godse on 13 Mar 2022

- Why do you need a metadata management system? Definition and Benefits. Indhu on 13 Mar 2022

- When Good Data Goes Bad Sameer Narkhede on 12 Mar 2022

- Top 5 Fundamental Concepts of Data Engineering Karen Anthony on 10 Mar 2022

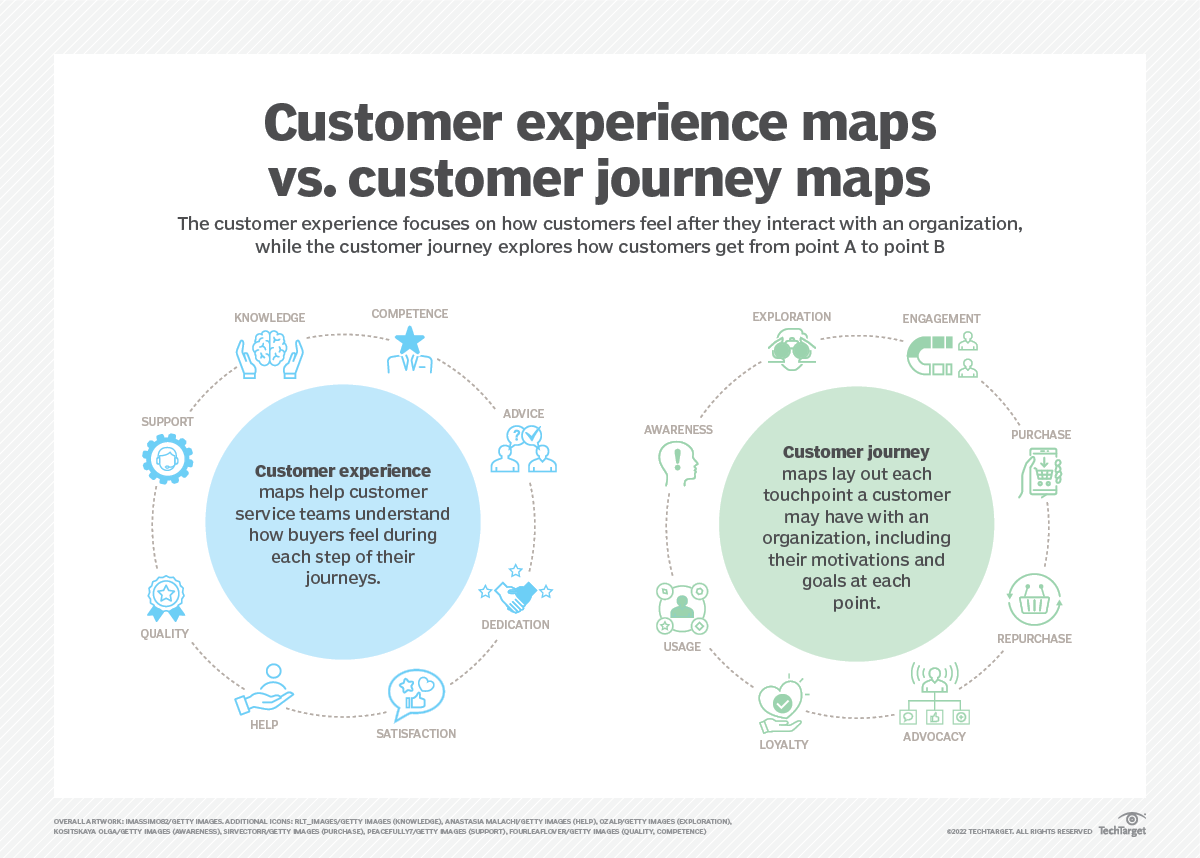

Picture of the Week