Over the last thirty years, there have been two camps that have emerged (I’m not sure that calling them schools of thought is even warranted) taking one of the two positions: 1) The advent of robots and computers would take away my job, or 2) The computer and robotics age will herald the rise of all kinds of new jobs. Thirty years in, the answer to whether #1 or #2 is correct is “Yes”, or put less succinctly, it’s complicated.

The reality is that this is actually not a very useful question to ask. I suspect a better one may be:

“Will the advent of automation reduce my ability to participate in the economy?”

This requires a bit of parsing out. What does “participating in the economy” mean? In essence, an economy is a way to ensure that you have housing, food, clothing, entertainment, education, transportation, and so forth sufficient to your needs (and potentially to your desires). In the U.S. (and most other countries) the way that is done is through capitalism – you sell your labor (physical or mental) to others for tokens (currency) that can then be exchanged for goods and services, or you provide currency in order to facilitate the production of said goods or services (investing). The primary difference between capitalism and communism (from an economic standpoint), is that the number of tokens that you receive for labor or goods is set by a marketplace in the former, and by the government in the latter. In practice, even the largest Communist country, China, actually uses a mostly capitalistic model for just about everything, with the primary distinction between the two models being the degree of dissent that is tolerated (an important distinction, but not immediately germane here).

Ultimately, the number of tokens that one receives for providing those goods or services can be thought of as the value of those goods and services, and that value is determined by a great number of factors. In the physical world, one of the biggest value determinants is scarcity. Because physical things are unique (they occupy space and time) then energy must be expended upon materials to create those things. Labor too is a function of scarcity – the fewer the number of people capable of doing a job, the longer it takes for a given person to accomplish a specific job, and the more necessary that job is, the more valuable the labor of that person is. Perception too goes into that equation – because a CEO is responsible for the overall direction of a company, the perception is that it should be compensated for accordingly, even when that CEO personally may have very little impact upon the success of a company. Finally, value is determined by luck, which can be seen as yet another rarifying factor: is the need for that role higher at a particular time than it is at some other time?

One other critical factor involved in economics is the size of any barrier to entry into a given market. The cost of setting up a factory is several orders of magnitude higher than setting up a software company, so the barrier to entry is considerably higher, reducing the number of potential investors while simultaneously increasing the degree to which an investor can dictate the direction of a company (and can dictate terms on returns from an investment).

Something changed about twenty-five years ago. The virtual expanse was opened up, and this new “virtual reality” was based upon a very different physics. Specifically, the cost of creating the first book (as an example) is relatively fixed – it is the time that it takes to actually put together a cohesive, interesting narrative, and embed it within a software “package.” The cost to sell the second copy is so close to zero as to be zero in physical terms, as is the cost to produce the ten-thousandth copy. If a book is actively sought after by millions of people, it clearly has value, but for the creator of that book, the lack of uniqueness means that they make no money – they cannot participate in the economy, despite having produced a work of great value.

Over the last ten years, we’ve wrestled with this conundrum of creating scarcity in the virtual world. The whole Bitcoin / Etherium / NFT /Blockchain efforts are primarily the logical successors of digital rights protection in the CD days, in that they attempt to create uniqueness by replacing space with time – associating value with unique events in time, such as finding a given arbitrary large prime number, or recording when a given atom of uranium decays. There are, of course, numerous problems with such schemes, including who determines which scheme should be used and then ascertaining which group’s ledger becomes the ledger of record. There is also the danger inherent in quantum computers, which could in theory solve most encryption key sets overnight, rendering the whole system moot.

In practice, what ends up happening is that a market emerges, and people determine value by the process of negotiation. Most real-world currencies exist in the same way – their inherent value is determined by many factors, always fluctuating, but typically such currencies hold value by consensus.

Automation changes this somewhat, but ironically not in the way that most people believe. In real life, the cost of manufacturing of most goods is well below the final price that those goods end up commanding. In a work from home environment (where physical proximity to a job no longer becomes a determinant), the cost of mental labor goes down a bit where there’s a differential in cost of living but the supply of tightly talented people in any given area is still the biggest determinant, just that there are many more companies bidding across a labor pool that is only somewhat larger than it had been, especially if that labor is also able to produce goods or provide services themselves.

On the flip-side, physical labor also tends to go up, because proximity does become a constraint there. Humans are versatile creatures, and many operations that we tend to look upon as being “easy” to do – such as cleaning a hotel room – actually require a lot of flexibility that most robots will likely not have for decades, if ever. That doesn’t mean that certain tasks couldn’t be automated – vacuuming, for instance, could in theory, be accomplished by robots, but you are still talking about vacuuming up pens, string, gum, and all other kinds of things that are likely to turn the average Roomba into a dead Roomba within a few days.

Some jobs of course will go away, though surprisingly few. GPT3 and natural language generator functions will likely eliminate the role of copywriters for catalogs or press releases because these are already highly formulaic. For fiction writers, they will simply become parts of the toolbox – programs that can sit back and correct grammar and style silently, while at the same time, not being sufficiently complex to build compelling storylines because few computer applications (especially machine learning) handles novel situations well.

The same argument can be made for driverless vehicles. The typical truck driver does far more than steer the vehicle – he assesses hazardous situations, deals with emergencies, provides security for the vehicle, and is responsible for the manifest. He or she will still be in the driver’s seat twenty years from now, they just won’t have to drive as dangerously to do their job.

Ultimately, I suspect that the negative impact that automation will have on jobs is already past. Most of us are already utilizing computer automation and AI in dozens of different ways daily, and the massive job losses that were characteristic of the early computer era (where the machines DID replace a lot of mechanical rote work) have already happened, and instead, the ability of these machines as augmentative helpers will simply mean that people who are already too stressed and overworked may actually gain a measure of control over their lives.

DSC Featured Articles

- Is the GPU the new CPU? Kurt Cagle on 26 Jan 2022

- Tips for Learning Hadoop in a Month of Lunches Karen Anthony on 25 Jan 2022

- Banking Data: The Best Way to Understand Carbon Footprint Evan Morris on 25 Jan 2022

- Wearable Technology Demand Continues to Grow PragatiPa on 25 Jan 2022

- Notes to New and Returning DSC Writers Kurt Cagle on 25 Jan 2022

- The Shape of Data Kurt Cagle on 25 Jan 2022

- What Are Lagrange Points? Kurt Cagle on 24 Jan 2022

- How Cyber Security Consulting Can Prevent Attacks in BFSI’s Digital Era PragatiPa on 24 Jan 2022

- Data Literacy Education Framework – Part 1 Bill Schmarzo on 24 Jan 2022

- Top 10 Most In-Demand Skills in the World of Data Science Karen Anthony on 24 Jan 2022

- DSC Weekly Digest for January 18, 2022: Web 3 Isn’t About Blockchain But Rather Gaming Kurt Cagle on 24 Jan 2022

- Digital Twin Technology: Reconciling the Physical and Digital Nikita Godse on 24 Jan 2022

- Can Digital Twins Prevent a Pandemic? Stephanie Glen on 24 Jan 2022

- Cloud certifications: pros and cons ajitjaokar on 23 Jan 2022

- 5 Alternatives to Search Engine Optimization EdwardNick on 22 Jan 2022

- Quasi A/B test: An overlooked opportunity in company-sponsored research Coveo Data Science Team on 21 Jan 2022

- Deciphering the SQL Fix Recovery Model Karen Anthony on 21 Jan 2022

- A Framework for Understanding Transformation: Part II Howard M. Wiener on 20 Jan 2022

- From Excel to Visualization via Ploomber Rowan Molony on 20 Jan 2022

- Top 12 Software Development Trends in 2022 You Should Watch For Avani Trivedi on 19 Jan 2022

- Internet of Robotic Things : Robotics and Intelligence Evolving to Make the New Era Nikita Godse on 18 Jan 2022

- 5 Features Of Employee Scheduling Software For Your Team Evan Morris on 18 Jan 2022

- Why Microsoft Power BI Is Among The Most Popular Business Analytics Tools ImensoSoftware on 17 Jan 2022

- CDO Challenge: Providing Clear “Line of Sight” from Data to Value Bill Schmarzo on 17 Jan 2022

- Notable enterprise data trendsetters, 2002 – 2022 Alan Morrison on 17 Jan 2022

DSC Editorial Calendar

DSC is looking for editorial content specifically in these areas for February, with these topics having higher priority than other incoming articles.

- Solid and Decentralized Federated Graphs

- GPUs

- Personal Knowledge Graphs

- Universal Augmented Reality

- Javascript and AI

- UI, UX and AI

- GNNs and LNNs

- Digital Twins

- Digital Mesh

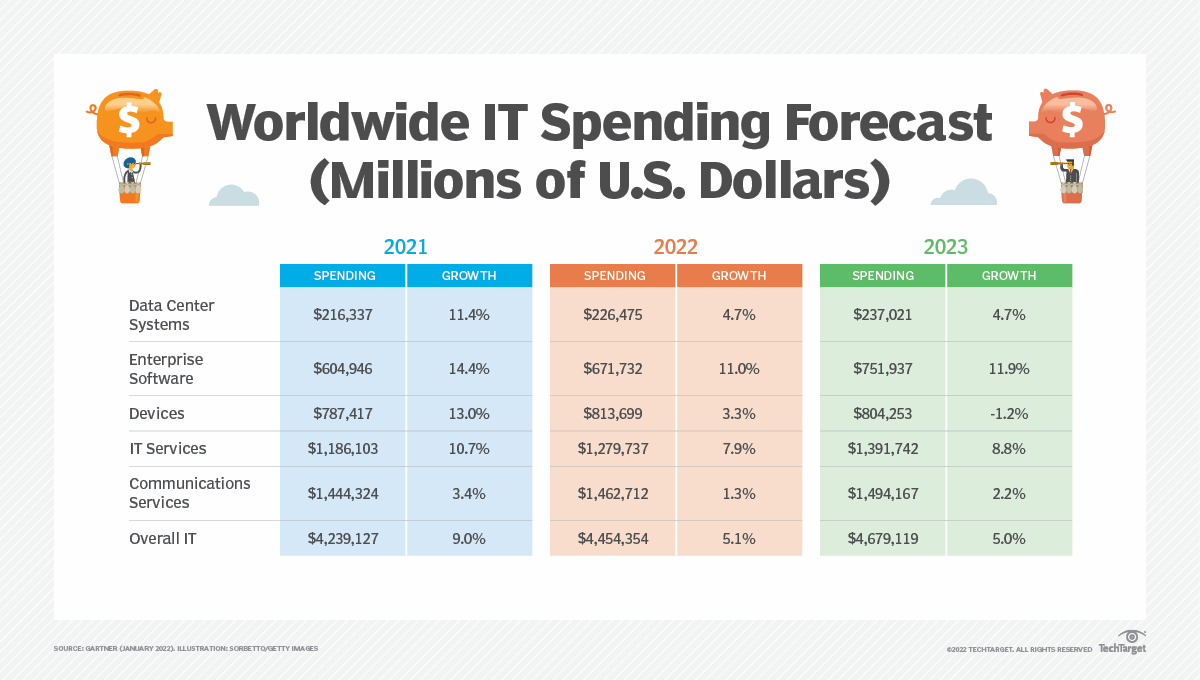

Picture of the Week

Worldwide IT Spending Forecast