|

Announcements

Machine Learning, Indexing and QueryingProgrammers, when first learning their trade, spend a few weeks or months working on the basics – the syntax of the language, how to work with strings and numbers, how to assign variables, and how to create basic functions. About this time, they also encounter two of their first data structures: lists and dictionaries. Lists can be surprisingly complex structures, but in most cases, they consist of sequences of items with pointers (or links) from one item to the next. While navigation can be handled by traversing the linked list (also known as an array), most often this is shortcircuited by passing in a numeric index that can be given from 0 (or 1 in some languages) to the position of whatever item is required. A similar structure is known as a dictionary. In this particular case, a dictionary takes a symbol rather than a position indicator and returns an item. This is frequently referred to as a look-up table. The symbol, or key, can be a number, a word, a phrase, or even a set of phrases, and once the keys are given, the results can vary from a simple string to a complex object. Almost every database on the planet makes use of a dictionary index of some sort, and most databases actually have indexes that in turn are used to look up other indexes. One significant way of thinking about indexing is that an index (or a dictionary) is a way of storing computations. For instance, in a document search index, the indexer will read through a document as it is loaded, then every time a new word or phrase occurs (barring stop words such as articles or prepositions) that word is used to indicate the document. The indexer will also pick up stem forms of a given word (which are indexed to a root term) so that different forms of the same word will be treated as one word. This is a fairly time-consuming task, even on a fast processor, but the advantage to this approach is that once you have performed it once, then you only need do it again when the document itself changes. Otherwise, instead of having to search through the same document every time a query is made, you instead search the index, find which documents are indexed by the relevant keywords, then retrieve those documents within milliseconds. You can even use logical expressions to string keywords together, finding either the union or intersection of documents that satisfy that expression, and then return pointers to just the corresponding documents. Machine learning, in this regard, can be seen as being another form of index, especially when such learning is done primarily for classification purposes. The training of a machine learning model is very much analogous to the processing of documents into an index for word usage or semantic matching, save that what is being consumed are test vectors, and what is produced is the mapping of a target vector to a given configuration. Additionally, natural language processing is increasingly moving towards models where context is becoming important, such as Word2Vec, BERT, and most recently the GPT-2 and GPT-3 evolutions. These are shifting from statistical modeling and semantic analysis to true neural networks, with context coming about in part by the effective use of indexing. By being able to identify, encode, and then reference abstract tokenization of linguistic patterns in context, the time-consuming part of natural language understanding (NLU) can be done independently of the utilization of these models. A query, in this regard, can also be seen as being a key, albeit a more sophisticated one. In RDF semantics, for instance, the queries involve finding patterns in linked indexes, then using these to retrieve assertions that can be converted into data objects (either tables or structured content). Graph embeddings present another approach to the same problem. It is likely that the next stage of evolution in this realm of search and query will be the creation of dynamically generated queries against machine learning models, in essence treating the corresponding machine learning model into a contextual database that can be mined for inferential insight. In that regard, neural-network-based machine learning systems will increasingly take on the characteristics of indexed databases, in a manner similar to that currently employed by SQL databases, structured document repositories, and n-tuple data stores. At this point, such query systems are likely still a few years out, but the groundwork is increasingly leaning towards the notion of model as database. This in turn will drive applications driven by such systems, in both the natural language understanding realm (NLU) and the natural language generation (NLG) one. It is my expectation that these areas, perhaps more even than automated visual recognition, will become the hallmark of artificial intelligence in the future, as the understanding of language is essential to the development of any cognitive social infrastructure. In media res, Kurt Cagle To subscribe to the DSC Newsletter, go to Data Science Central and become a member today. It’s free! Data Science Central Editorial CalendarDSC is looking for editorial content specifically in these areas for September, with these topics having higher priority than other incoming articles.

DSC Featured Articles

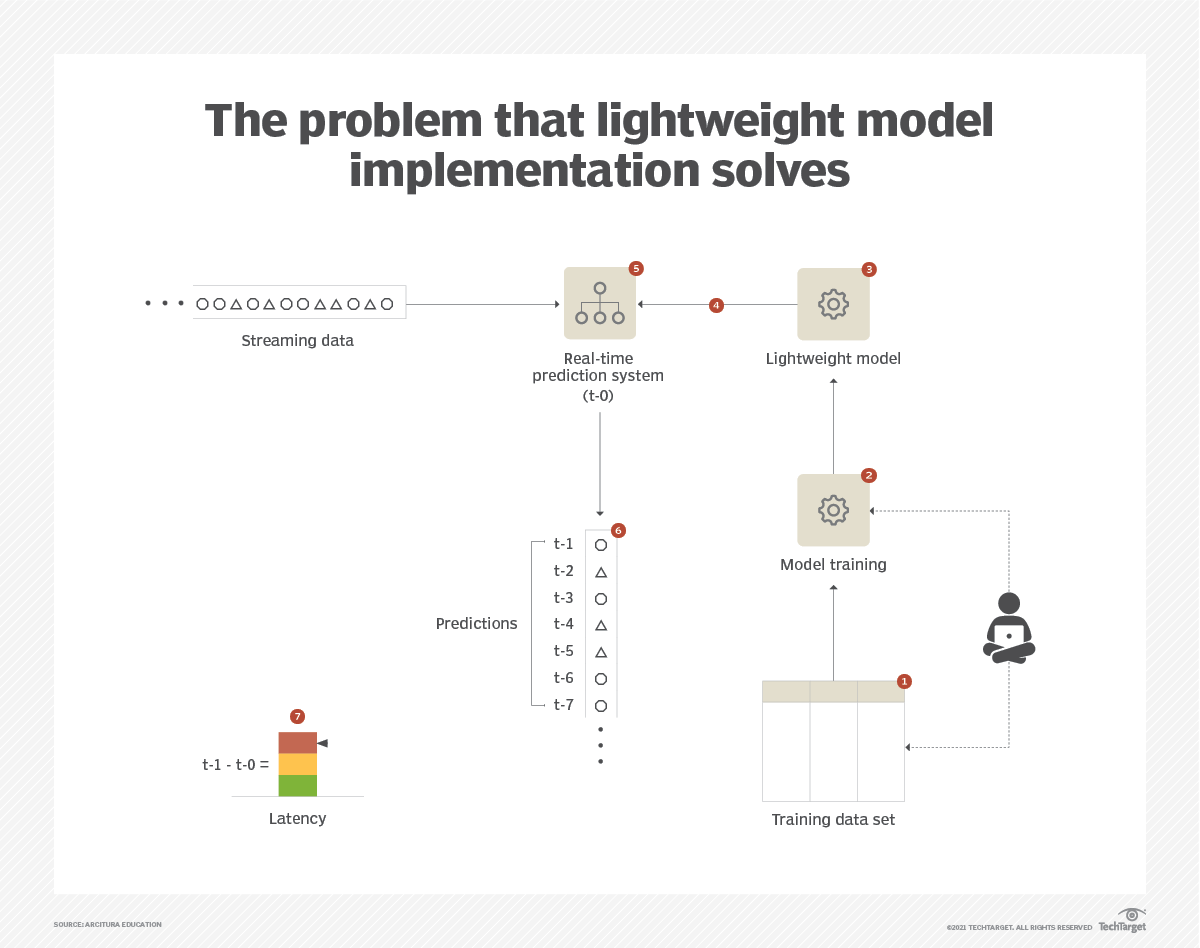

TechTarget ArticlesPicture of the Week

To make sure you keep getting these emails, please add [email protected] to your browser’s address book.

Join Data Science Central | Comprehensive Repository of Data Science and ML Resources

Videos | Search DSC | Post a Blog | Ask a Question Follow us on Twitter: @DataScienceCtrl | @AnalyticBridge This email, and all related content, is published by Data Science Central, a division of TechTarget, Inc.

275 Grove Street, Newton, Massachusetts, 02466 US You are receiving this email because you are a member of TechTarget. When you access content from this email, your information may be shared with the sponsors or future sponsors of that content and with our Partners, see up-to-date Partners List below, as described in our Privacy Policy . For additional assistance, please contact: [email protected] copyright 2021 TechTarget, Inc. all rights reserved. Designated trademarks, brands, logos and service marks are the property of their respective owners. |