We’re moving at the Cagle house and we’re discovering that, after eight years of living at the same place, one family can collect a lot of crap. The issue came up, in discussions with my spouse, that my mother-in-law had no sense of organization — which seemed odd because my wife’s mother was the kind of person to organize wrapping paper for holidays by season and family. On the other hand, my wife, a writer, has a particular scheme for organizing the dishes in the dishwasher and for finding her books on educational theory in the house’s myriad bookshelves, but has a largish pile of boxes specifically labeled “Christmas Stuff.” My daughter, an artist, has bookshelves full of manga categorized by Japanese publishers, titles, and issues, has drawers designated for drawing pads, pens, buttons that she produces, and similar content. Yet her clothes are mostly scattered in her room, and games are stacked helter-skelter.

If three genetically related people could have such wildly divergent ways of organizing informational content, why is it surprising that organizations with thousands of people in them have so much difficulty organizing their relevant information space? Organizations are organic, They come into existence at different points in time, they usually tend to form in smaller groups that then get absorbed into varying (and not always consistent) departments. Every so often this structure gets shaken up in order to become more flexible in the face of change.

In the last couple of decades, two distinct roles have emerged in an organization: The taxonomist and the ontologist. The taxonomist ordinarily works within an existing knowledge representation system to classify content into the appropriate classification. One of the roles of a librarian, for instance, is to take a previous unclassified work, such as a textbook, and determine the classification that most closely matches that. The simplest of these schemes creates a direct addressing system such as the Dewey Decimal System or the Library of Congress classification schema, in which a line is divided up into different segments and each segment in turn is assigned relatively related values.

This kind of system makes sense for a library because a library can be seen as a single continuous row of books that are broken down into cases and shelves respectively, with each shelf having a certain amount of space to grow as more items are added into the collection. This ensures that related information is (for the most part) in contiguous parts of the building. Admittedly, eventually the number of items exceeds the shelf space and the position of information in space has to be recalculated within a new library.

What taxonomists generally don’t do is determine the system used for classification in the first place. Melville Dewey was not the first ontologist – you’d have to go all the way back to Aristotle for that particular distinction – but he was memorable in that he abstracted out an organizational trend (the tendency to place related works in the same rooms and shelves), and combined it with a novel realization (the bookshelves of a library could be thought of as segments of a line) in order to build a classification system that worked well for libraries. This is what an ontologist does – they conceptualize an organizational scheme. In a given company, this scheme may have many names, but the most prominent one is an Enterprise Data Model (EDM).

EDMs started appearing around the turn of the Millennium, primarily as an evolution of what analysts call “data dictionaries.” Data dictionaries in turn are a direct descendent of contracts. In law, you must first declare the terms that you are going to use in the contract, then you must define what you mean by the term in the context of a legal framework. Note that what is generally defined in contracts are generalities (classes) and instances (things that satisfy the constraints of those classes). The terms thus defined are the variables that define the contract template, and when these variables have been assigned and agreed to (validated), then the contract can be used to direct the stipulations of work.

To go back to our previously discussed roles, the ontologist is the lawyer who draws up the contract template, identifying the various parties, actions, limitations, restrictions, conditions, satisfactions and penalties. The taxonomist is then like a different lawyer who works with the participants to fill out the contract.

This analogy is useful from an automation standpoint. When you create a program, you are in essence doing the same thing as creating a contract template. You are defining the variables, often using pre-existing libraries of terms, then specifying the actions, constraints, exception logic, and so forth. Ideally, you want to eliminate side effects, meaning that when you apply the same input data, you should always get the same output data.

This side-effect free (aka declarative) approach has subtle implications: things like time, position, and random seeds always have to be external inputs, as do streams. It often means that you are transforming a graph of information into a different graph. It means that you generally do not destroy data, meaning that it tends to be computationally heavier. This becomes especially important once you start working at an enterprise scale.

In an enterprise data model, you do not have the luxury of arbitrarily defining variables or even methods. You are instead extracting a graph, transforming that graph, and inserting a new graph into the overall graph set. Put another way, even ten years ago the dominant model of software development was to focus on the application first, with the database subordinate to (and usually hidden by) the application logic, making for very siloed data systems. Increasingly, that’s getting turned on its head, with the data existing before the applications, the applications querying the data system for the data that it needs, then returning control back to the context of the database. The application becomes subordinate to the data. Fifty years of proving practices have been rendered moot as a consequence.

We are finding increasingly sophisticated ways of storing, categorizing, and invoking actions from data, but because that data is a common, trusted resource, the design of that data becomes paramount. At the same time, the design itself assumes a particular bias or viewpoint and as was shown at the beginning of this article, everyone (and every organization and even department within that organization) has their own particular perspective. The next stage of ontology will be bridging that gap, reducing the need for the specialized ontologist. I believe we are getting close to seeing that next stage become real, making it possible for our systems to learn our preferred mechanisms for organizing.

In Media Res

Kurt Cagle

Community Editor,

Data Science Central

To subscribe to the DSC Newsletter, go to Data Science Central and become a member today. It’s free!

Data Science Central Editorial Calendar

DSC is looking for editorial content specifically in these areas for March, with these topics having higher priority than other incoming articles.

- Military AI

- Knowledge Graphs and Modeling

- Metaverse and Shared Worlds

- Cloud and GPU

- Data Agility

- Astronomical AI

- Intelligent User Interfaces

- Verifiable Credentials

- Digital Twins

- 3D Printing

DSC Featured Articles

- The Hybrid to Give Your AI the Gift of Knowledge Marco Varone on 01 Mar 2022

- Ten years of Google Knowledge Graph Alan Morrison on 01 Mar 2022

- How to Make Glowing Visualizations – Literally Vincent Granville on 01 Mar 2022

- Abundance Mentality is Key to Exploiting the Economics of Data Bill Schmarzo on 28 Feb 2022

- Abundance Mentality is Key to Exploiting the Economics of Data Bill Schmarzo on 28 Feb 2022

- DeFi platforms: What dumb data and dumb code have in common Alan Morrison on 28 Feb 2022

- DSC Weekly Digest 22 February 2022: Graphology Kurt Cagle on 28 Feb 2022

- Application Integration vs Data Integration: A Comparison Ryan Williamson on 28 Feb 2022

- Why We Can’t Trust AI to Run The Metaverse Stephanie Glen on 28 Feb 2022

- Could an explainable model be inherently less secure? ajitjaokar on 28 Feb 2022

- Amazing Benefits of Big Data Analytics Career Aileen Scott on 27 Feb 2022

- Approach to AGI: how can a “synthetic being” learn? Ignacio Marrero Hervas on 27 Feb 2022

- Fuzzy Bootstrap Matching Dale Harrington on 22 Feb 2022

- Data Observability Goes Far Beyond Data Quality Monitoring and Alerts Sameer Narkhede on 22 Feb 2022

- An Overview of the Big Data Engineer Aileen Scott on 22 Feb 2022

- A Framework for Understanding Transformation–Part III Howard M. Wiener on 22 Feb 2022

- How to Ensure Data Quality and Integrity? Indhu on 22 Feb 2022

- IoT in Construction — Construction Technology’s Next Frontier Nikita Godse on 22 Feb 2022

- Scene Graphs and Semantics Kurt Cagle on 22 Feb 2022

- AWS Cloud Security: Best Practices Ryan Williamson on 22 Feb 2022

- Top Best Practices to Keep in Mind for Azure Cloud Migration Ryan Williamson on 22 Feb 2022

- The One Algorithm Change That Could Impact the World ajitjaokar on 22 Feb 2022

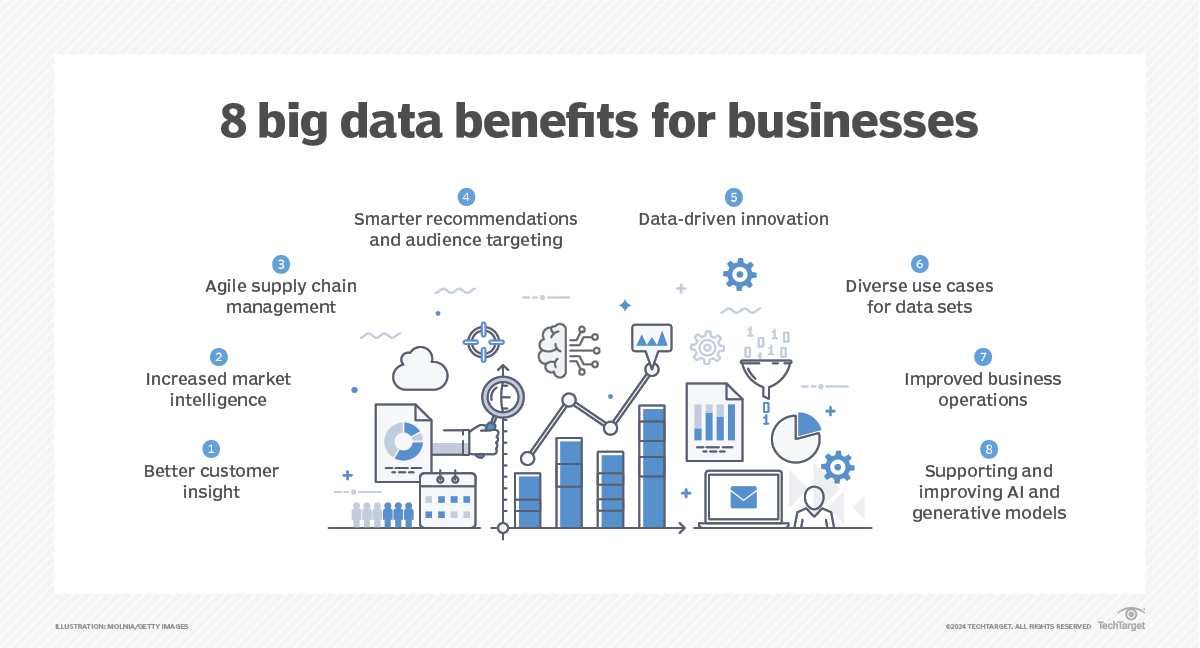

Picture of the Week