It is no coincidence that companies are investing in AI at unprecedented levels at a time when they are under tremendous pressure to innovate. The artificial intelligence models developed by data scientists give enterprises new insights, enable new and more efficient ways of working, and help identify opportunities to reduce costs and introduce profitable new products and services.

The possibilities for AI use grow almost daily, so its important not to limit innovation. Unfortunately, many organizations do just that by tethering themselves to proprietary tools and solutions. This can handcuff data scientists and IT as new innovations become available, and results in higher costs than an open environment that supports best-of-breed AI model development and management. This article presents guidance for avoiding proprietary lock-in in enterprise AI, and why it is important to do so.

AI Evolution Has Similarities to Analytics, But the Differences Are More Important

AI grew out of data analytics that later evolved to business analytics and business intelligence. Early analytics were dominated by a few proprietary solutions, which had a limited ecosystem of innovative companies developing complementary tools and technologies to enhance the vendor platform. That slowed analytics adoption.

The AI market is the Wild West in comparison. Hundreds of companies, ranging from some of the best-known names in tech to incredibly innovative startups are offering solutions for every stage of AI and the AI model life cycle. Enterprises dont have to use the same limited tools and techniques as their competitors, and they are taking advantage. In 2021, 81% of financial services companies were using more than one AI model development tool, and 42% were using at least five, according to the 2021 State of ModelOps report. That doesnt even count the tools and solutions used for other stages of AI and the model life cycle.

Many tools are oriented to developing models for a specific purpose (e.g. fraud detection, assisted shopping) or execution environment (e.g. in-house hardware or AWS and other hyper cloud services). Having multiple purpose-built, best-of-breed model development options available to data scientists has broadened the scope of use cases that are possible and valuable, which has helped AI use grow within organizations.

No Single Tool is Best for the Entire AI Model Life Cycle

AI lock-in occurs when organizations rely on the same tool they used to create models to also run and manage them in production. That may have been OK in the early days of analytics when models and model operations were more transparent, but models are too sophisticated now. The requirements and tools for model development are very different from those for model operations. Models in production must interact with many more systems, including business applications, risk management frameworks, IT systems, information security controls and more.

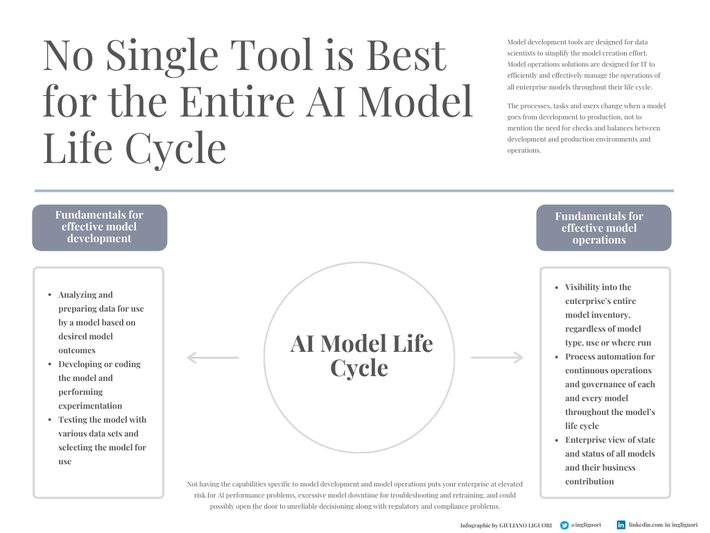

Model development tools are designed for data scientists to simplify the model creation effort. Model operations solutions are designed for IT to efficiently and effectively manage the operations of all enterprise models throughout their life cycle.

The processes, tasks and users change when a model goes from development to production, not to mention the need for checks and balances between development and production environments and operations.

The fundamentals for effective model development are:

- Analyzing and preparing data for use by a model based on desired model outcomes

- Developing or coding the model and performing experimentation

- Testing the model with various data sets and selecting the model for use

The fundamentals for effective model operations are:

- Visibility into the enterprises entire model inventory, regardless of model type, use or where run

- Process automation for continuous operations and governance of each and every model throughout the models life cycle

- Enterprise view of state and status of all models and their business contribution

Not having the capabilities specific to model development and model operations puts your enterprise at elevated risk for AI performance problems, excessive model downtime for troubleshooting and retraining, and could possibly open the door to unreliable decisioning along with regulatory and compliance problems.

It doesnt have to be that way. There are model operations (ModelOps) management solutions that are technology agnostic so they can support all the development tools and execution environments your data science team might want to use. The fewer management tools you need, the easier your AI program will be to scale, orchestrate and manage.

Flexibility Helps Win the Talent War

Its no secret that data scientists are in high-demand. Imagine youre an experienced data scientist thats mulling over some of the well-paying job opportunities that recruiters present you each week. Which of the following positions would you be more likely to respond to?

Data scientist with experience in [specific AI programming language] needed to create and maintain models running in [specific IT infrastructure]. or

Use your favoured tools and development environment to break new with AI in our industry.

Your organization should not miss out on the chance to hire someone with specialized, coveted skills because you couldnt accommodate the experience that made the person valuable in the first place.

The Cloud Wont Bring More Clarity

There is a school of thought that as AI matures its ecosystem will thin out, and eventually most AI models will run in the cloud. Therefore, the thinking goes, an enterprise can meet its AI model operations management needs through a solution developed for or by its favoured cloud environment. In reality, that isnt the situation today and probably never will be. Hybrid, multi-cloud environments are the norm for AI: 58% of financial services companies run at least some of their AI models in AWS, but, 58% also run models in Google Cloud Platform (GCP), 27% run them in Azure and 48% execute their AI models in house[1]. These figures total more than 100%, which means enterprises are embracing hybrid infrastructure and running models in different places, choosing the best environment to execute each particular model. That approach should also be taken for managing model operations. Committing to an AI ModelOps s solution that is optimized for one cloud platform creates the classic vendor lock-in problems. This lock-in presents problems for any area of business operations; and for artificial intelligence, it can place costly, unnecessary limitations on what AI can do for the enterprise.

Your AI innovations and efforts are too important to trust a single solution, execution environment or limited set of vendors. You dont manage your IT systems or business applications with the same tools that were used to develop them why not take the same approach with AI? Enterprises need to open themselves to take advantage of all the possibilities that AI maturity and advancements are bringing about. Develop an AI model operations platform s that can support a wide range of tooling and future advances, give your data science teams the freedom to innovate and your IT teams the ability to efficiently and effectively govern and manage all AI models across the enterprise at scale, regardless of how they were developed, what they are used for, or where they are run.

[1] Corinium Intelligence and ModelOp 2021 State of ModelOps Report April 14, 2021.