This article was written by Tinniam V Ganesh.

This is the first in the series of posts, I intend to write on Deep Learning. This post is inspired by the Deep Learning Specialization by Prof Andrew Ng on Coursera and Neural Networks for Machine Learning by Prof Geoffrey Hinton also on Coursera. In this post I implement Logistic regression with a 2 layer Neural Network i.e. a Neural Network that just has an input layer and an output layer and with no hidden layer.I am certain that any self-respecting Deep Learning/Neural Network would consider a Neural Network without hidden layers as no Neural Network at all!

This 2 layer network is implemented in Python, R and Octave languages. I have included Octave, into the mix, as Octave is a close cousin of Matlab. These implementations in Python, R and Octave are equivalent vectorized implementations. So, if you are familiar in any one of the languages, you should be able to look at the corresponding code in the other two. You can download this R Markdown file and Octave code from DeepLearning -Part 1

Check out my video presentation which discusses the derivations in detail

1. Elements of Neural Networks and Deep Le- Part 1

2. Elements of Neural Networks and Deep Learning – Part 2

To start with, Logistic Regression is performed using sklearn’s logistic regression package for the cancer data set also from sklearn.

1. Logistic Regression

To check on other classification algorithms, check my post Practical Machine Learning with R and Python – Part 2.

Checkout my book ‘Deep Learning from first principles: Second Edition – In vectorized Python, R and Octave’. My book starts with the implementation of a simple 2-layer Neural Network and works its way to a generic L-Layer Deep Learning Network, with all the bells and whistles. The derivations have been discussed in detail. The code has been extensively commented and included in its entirety in the Appendix sections. My book is available on Amazon as paperback ($14.99) and in kindle version($9.99/Rs449).

You may also like my companion book “Practical Machine Learning with R and Python:Second Edition- Machine Learning in stereo” available in Amazon in paperback ($10.99) and Kindle($7.99/Rs449) versions. This book is ideal for a quick reference of the various ML functions and associated measurements in both R and Python which are essential to delve deep into Deep Learning.

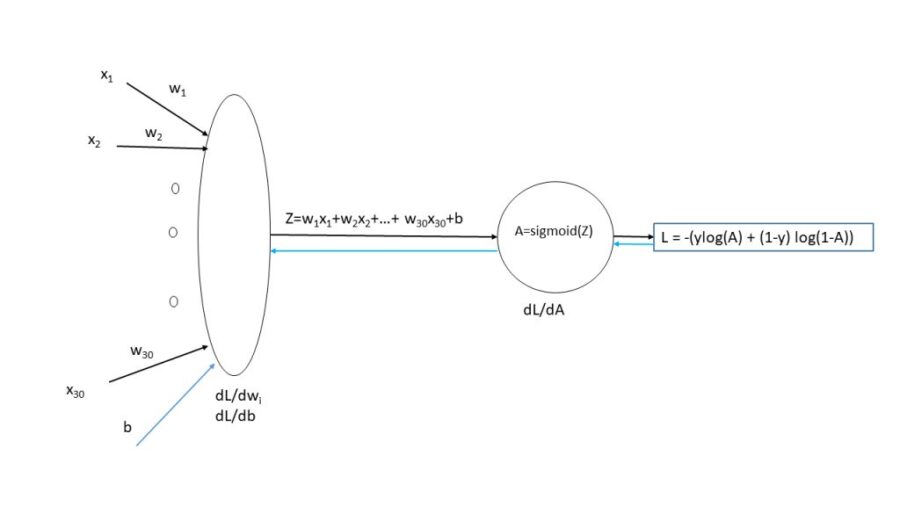

2. Logistic Regression as a 2 layer Neural Network

In the following section Logistic Regression is implemented as a 2 layer Neural Network in Python, R and Octave. The same cancer data set from sklearn will be used to train and test the Neural Network in Python, R and Octave.

The cancer data set has 30 input features, and the target variable ‘output’ is either 0 or 1. Hence the sigmoid activation function will be used in the output layer for classification.

To read the whole article, with formulas and illustrations, click here.