This article was written by Jon Krohn.

At untapt, all of our models involve Natural Language Processing (NLP) in one way or another. Our algorithms consider the natural, written language of our users’ work experience and, based on real-world decisions that hiring managers have made, we can assign a probability that any given job applicant will be invited to interview for a given job opportunity.

With the breadth and nuance of natural language that job-seekers provide, these are computationally complex problems. We have found deep learning approaches to be uniquely well-suited to solving them. Deep learning algorithms:

- trivially include millions of model parameters that are free to interact non-linearly;

- can incorporate learning units designed specifically for use with sequential data, like natural language or financial time series data; and,

- are typically far more efficient in production environments than traditional machine learning approaches for NLP.

To share my love of deep learning for NLP, I have created five hours of video tutorial content paired with hands-on Jupyter notebooks. Following on from my acclaimed Deep Learning with TensorFlow LiveLessons, which introduced the fundamentals of artificial neural networks, my Deep Learning for Natural Language Processing LiveLessons similarly embrace interactivity and intuition, enabling you to rapidly develop a specialization in state-of-the-art NLP.

These tutorials are for you if you’d like to learn how to:

- preprocess natural language data for use in machine learning applications;

- transform natural language into numerical representations (with word2vec);

- make predictions with deep learning models trained on natural language;

- apply advanced NLP approaches with Keras, the high-level TensorFlow API; or

- improve deep learning model performance by tuning hyperparameters.

Below is a summary of the topics covered over the course of my five Deep Learning for NLP lessons (full breakdown detailed in my GitHub repository):

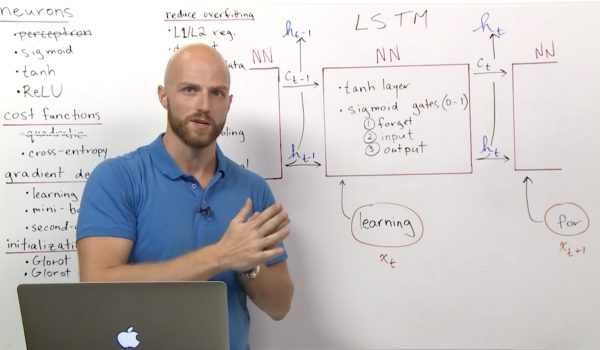

Lesson One: Introduction to Deep Learning for Natural Language Processing

- high-level overview of deep learning as it pertains to NLP specifically

- how to train deep learning models on an Nvidia GPU if you fancy quicker model-training times

- summarize the key concepts introduced in my Deep Learning with TensorFlow LiveLessons, which serve as a foundation for the material introduced in these NLP-focused LiveLessons

Lesson Two: Word Vectors

- leverage demos to enable an intuitive understanding of vector-space embeddings of words, i.e., nuanced, quantitative representations of word meaning

- discover resources for pre-trained word vectors as well as natural language data sets

- create your own vector-space embeddings with word2vec, including bespoke, interactive visualizations of the resulting word vectors

Lesson Thee: Modeling Natural Language Data

- best practices for preprocessing natural language data

- calculating the ROC curve to evaluate the performance of classification models

- pair vector-space embedding with the fundamentals of deep learning introduced in my Deep Learning with TensorFlow LiveLessons to build dense and convolutional neural networks for classifying documents by their sentiment.

To read the full original article click here. For more deep learning related articles on DSC click here.

DSC Resources

- Services: Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Contributors: Post a Blog | Ask a Question

- Follow us: @DataScienceCtrl | @AnalyticBridge

Popular Articles