But, because it can come in many different forms and theres so much of it, data is a messy mass to handle.

Modern data analytics requires a high level of automation in order to test validity, monitor the performance and behaviour of data pipelines, track data lineage, detect anomalies that might indicate a quality issue and much more besides.

DataOps is a methodology, created to tackle the problem of repeated, mundane data processing tasks, thus making analytics easier and faster, while enabling transparency and quality-detection within data pipelines. Medium describes DataOps aim as; to reduce the end-to-end cycle time of data analytics, from the origin of ideas to the literal creation of charts, graphs and models that create value.

So, what are the DataOps principles that can boost your business value?

Whats the DataOps Manifesto?

DataOps relies on much more than just automating parts of the data lifecycle and establishing quality procedures. Its as much about the innovative people using it, as it is a tool in and of itself. Thats where the DataOps Manifesto comes in. It was devised to help businesses facilitate and improve their data analytics processes. The Manifesto lists 18 core components, which can be summarised as the following:

- Enabling end-to-end orchestration and monitoring

- Focus on quality

- Introducing an Agile way of working

- Building a long-lasting data ecosystem that will continuously deliver and improve at a sustainable pace (based on customer feedback and team input)

- Developing efficient communication between (and throughout) different teams and their customers

- Viewing analytics delivery as lean manufacturing which strives for improvements and facilitates the reuse of components and approaches

- Choosing simplicity over complexity

DataOps enables businesses to transform their data management and data analytics processes. By implementing intelligent DataOps strategies, its possible to deploy massive disposable data environments where it would have been impossible otherwise. Additionally, following this methodology can have huge benefits for companies in terms of regulatory compliance. For example, DataOps combined with migration to a hybrid cloud allows companies to safeguard the compliance of protected and sensitive data, while taking advantage of cloud cost savings for non-sensitive data.

DataOps and the data pipeline

It is common to imagine data pipeline as a conveyor belt-style manufacturing process, where the raw data enters one end of the pipeline and is processed into usable forms by the time it reaches the other end. Much like a traditional manufacturing line, there are stringent quality and efficiency management processes in place along the way. In fact, because this analogy is so apt, the data pipeline is often referred to as a data factory.

This refining process delivers quality data in the form of models and reports, which data analysts can use for the myriad reasons mentioned earlier, and far more beyond those. Without the data pipeline, the raw information remains illegible.

The key benefits of DataOps

The benefits of DataOps are many. It creates a much faster end-to-end analytics process for a start. With the help of Agile development methodologies, the release cycle can occur in a matter of seconds instead of days or weeks. When used within the environment of DataOps, Agile methods allow businesses to flex to changing customer requirementsparticularly vital nowadaysand deliver more value, quicker.

A few other important benefits are:

- Allows businesses to focus on important issues. With improved data accuracy and less time spent on mundane tasks, analytics teams can focus on more strategic issues.

- Enables instant error detection. Tests can be executed to catch data thats been processed incorrectly, before its passed downstream.

- Ensures high-quality data. Creating automated, repeatable processes with automatic checks and controlled rollouts reduces the chances that human error will end up being distributed.

- Creates a transparent data model. Tracking data lineage, establishing data ownership and sharing the same set of rules for processing different data sources creates a semantic data model thats easy for all users to understandthus, data can be used to its full potential.

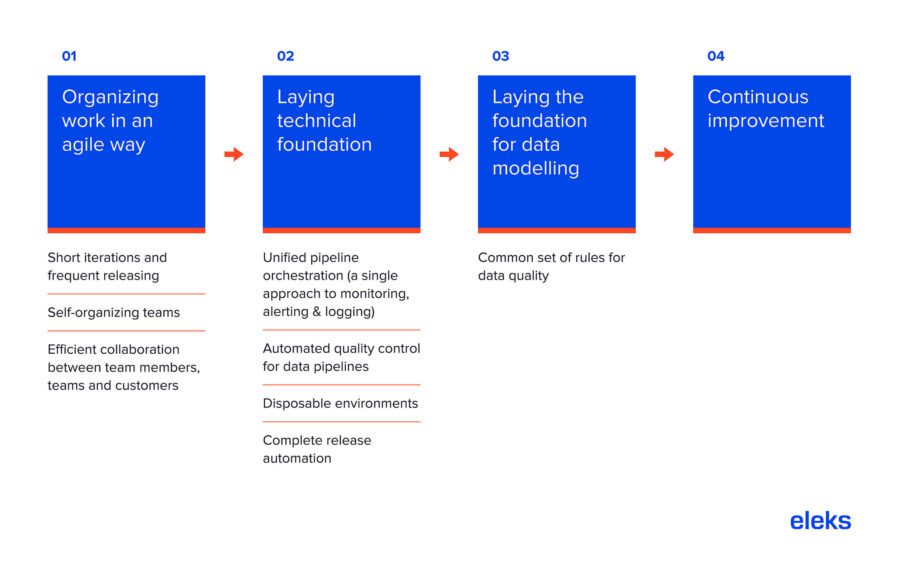

So how is DataOps implemented within an organisation? There are four key stages within the DataOps roadmap, illustrated in simple form below.

Summary

The potential stored within the vast sea of data companies now have access to is limitless. But without a proper framework for processing all this information, its potential cant be fully exploited. We now have the tools and the human intelligence to be able to harvest our data in a smarter way. DataOps is a pivotal piece of that puzzle and, by employing this methodology alongside Agile practices, it becomes the key to understanding our customers, our own inefficiencies and opportunities and, ultimately, building better businesses.

Have questions on how to apply DataOps principles? Get in touch with us any time!

Originally published at ELEKS blog.