Background knowledge attack uses quasi-identifier attributes of a dataset and reduce the possible values of output sensitive information. A well-known example about background knowledge attack is the personal health information about of Massachusetts governor William Weld using an anonymized data set. In order to overcome these types of attacks, various anonymization methods have been developed like k-anonymity, l-diversity, t-closeness.

Although the anonymization methods are applied to data sets to protect sensitive data, though, the sensitive data is still accessed by an attacker in various ways. Also, data anonymization methods are not applicable in some cases. In another scenario, consider the situation when two or more hospitals wants to analyze patient data through collaborative processes that require using each other’s databases. In such cases, it is necessary to find a secure training method that can run jointly on private union databases, without revealing or pooling their sensitive data.

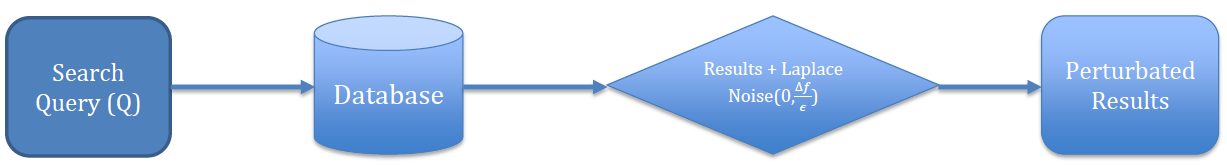

Differential privacy is a mathematical definition of privacy invented by Cynthia Dwork in 2006 at Microsoft Research Labs, aims to minimize the personally identifiable information and while maximizing accuracy of the statistical database queries. A statistical database used for statistical analysis with OLAP technologies. Allowed aggregate queries are SUM, COUNT, AVG, STDDEV, etc.

Steps:

- Trusted server computes a summary statistics (aggregate queries) Q

- Trusted server creates , from which differ on a single row

- Then Q is -differentially private if:

Differential privacy performs this by adding low sensitivity controlled noise. Popular differential privacy-preserving mechanism is the Laplace mechanism. The Laplace mechanism involves adding random noise that conforms to the Laplace statistical distribution. Then the sensitivity of the query:

Figure 1: Overall illustration of differential privacy

Figure 1: Overall illustration of differential privacy