This article was written by Ray.

Read an article in Quanta Magazine (New theory cracks open the black box of deep learning) about a talk (see 18: Information Theory of Deep Learning, YouTube video) done a month or so ago given by Professor Naftali (Tali) Tishby on his theory that all deep learning convolutional neural networks (CNN) exhibit an “information bottleneck” during deep learning. This information bottleneck results in compressing the information present, in for example, an image and only working with the relevant information.

The Professor and his researchers used a simple AI problem (like recognizing a dog) and trained a deep learning CNN to perform this task. At the start of the training process the CNN nodes at the top were all connected to the next layer, and those were all connected to the next layer and so on until you got to the output layer.

Essentially, the researchers found that during the deep learning process, the CNN went from recognizing all features of an image to over time just recognizing (processing?) only the relevant features of an image when successfully trained.

In this article, the following topics are discussed:

- Limits of deep learning CNNs

- What happens during deep learning

- Statistics of deep learning process

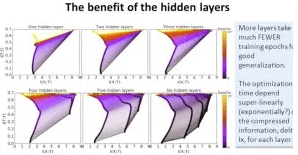

- Do layer counts and sample size matter?

To read the whole article, with illustrations, click here.