Last week, meta announced a new game changing announcement called Cicero that points to a possible new future for AI

Cicero, from meta, is an AI that can play (at human comparable capabilities) the game of Diplomacy.

The game of diplomacy presents a different set of challenges than Go.

Go is based on exploration / exploitation and hence suited to reinforcement learning.

To master Go, AlphaGo used reinforcement learning and learnt by extensively playing against itself until it could anticipate its own moves and how those moves would affect the game’s outcome.

Diplomacy, on the other hand, needs the player to maintain extensive communication, deceive other players, negotiate, form alliances and negotiate.

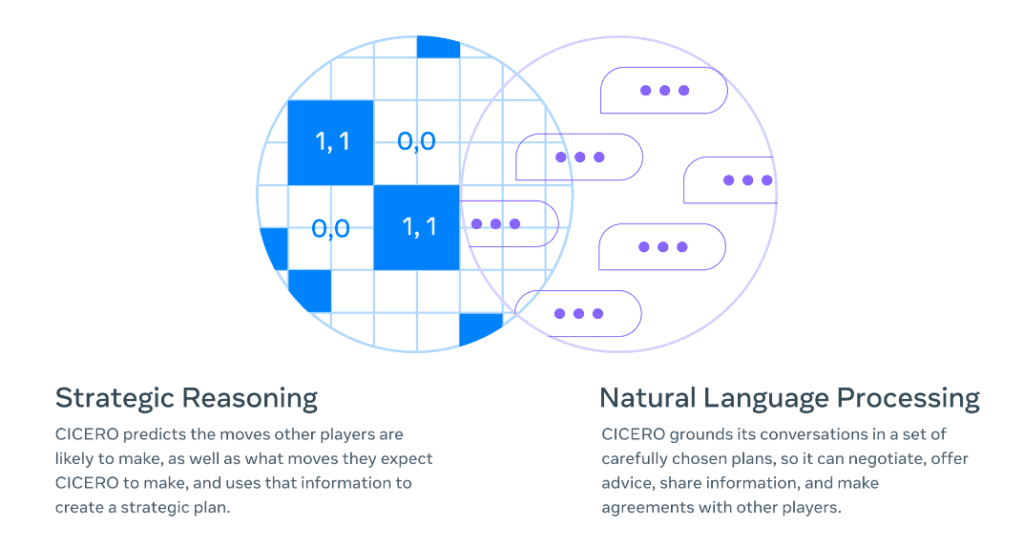

To succeed at Diplomacy, Cicero needs to integrate both language and gameplay

The code/flow of Cicero is complex because Cicero must make complex moves at each decision that involve the current state of play,, past history, and what other players say and do. Not only that, but actions of other players depend on what they think you will do and conversely, your actions depend on what you think others will do.

Cicero is trained on a corpus of human games, a large language model, thousands of expert generated annotations and a large collection of synthetic data – some of which was handcrafted

So, why does this appear any different to current AI?

According to Gary Marcus, a well known proponent of symbolic/ hybrid AI

a) some aspects of Cicero use a neurosymbolic approach to AI,

b) Cicero uses hand crafted modules created by experts

This points to the fact that the complex operations of Cicero may lead to symbolic or hybrid architectures in the future.

More analysis is needed to confirm this approach, but if true, then it could point to a new direction in the evolution of AI

Image source: Meta

References

https://garymarcus.substack.com/p/what-does-meta-ais-diplomacy-winning?utm_source=substack&utm_medium=email&utm_content=share