Data democracy has been a hot topic of late. But what does this really mean? Further, what do we actually need to DO to build a culture of data democracy?

This is a very important topic to me. Over the past few years, my team has been on a mission to build a simplified platform for self-serve data analytics; our field of dreams. Unexpectedly, the work is not strictly technical. It boils down to what I call the four cornerstones of data democracy which span technical, cultural and organizational influences.

To understand the need for change, let’s take a moment to discuss the shifting business landscape and increased expectations for data intelligence. As always, to be successful at your job, you need to understand your business. However, due to the sheer volume that businesses operate on today, most people no longer have the ability to understand their performance by looking at individual records or point to point interactions. The reach of average businesses is so large, that a high-level view can only be computed with data. This is why data has become the new language of business management. Hence, to understand your business, you need to understand your data.

Now, let’s talk about business management expectations. At a fundamental level you need to monitor the “What” questions. Such as: “What is the revenue trend?”, “What was the most popular product set purchased last month?” etc. Answering the “What” questions is a relatively straightforward task. But, to truly excel at your job you need to dig into the “Why” behind the “What” questions. For example; “Why is the quarter to quarter sales for product x declining?”. Unfortunately, the “Why” questions are often much more difficult to tackle, and they typically require a data investigation of sorts.

By this point you may buy into the idea that we need to answer both the “What” and the “Why” questions. Now how do we achieve the goal without hiring a massive data staff or expecting all business employees to become data scientists? Before we dive too far into prescribing how to build a data democracy, let’s review one definition that resonates with me:

Data democratization is the ability for information in a digital format to be accessible to the average end user. The goal of data democratization is to allow non-specialists to be able to gather and analyze data without requiring outside help. – Margaret Rouse, TechTarget.com

This is a very compact statement, so let’s unpack it a little.

“…the ability for information … to be accessible to the average end user.”

One must-have is to make the data easily accessible to those who need it. Gone are the days when you should typically require a long business justification and second line manager approval. We need to lower the barriers to access standard, non-sensitive business data.

“… to allow non-specialists to be able to gather and analyze data without requiring outside help”

This statement is the real kicker and where I think most companies are getting lost. Yes, many companies are lowering the barrier to access, but what does this mean? Is the user given permission to access a set of pre-canned dashboards? Is the user given permission to access the raw data? How does a non-data expert gather and analyze the data in a self-serve manner? To answer this question, we need to explore a number of possible solutions.

THE DASHBOARD DILEMMA

Alright dashboard fans, you may be feeling like you have this in the bag! You’ve got dashboards covering most key performance metrics. And you’ve got a data team ready and willing to assist with anything outside the scope of your dashboards. First things first, great job! You are clearly making strides towards having data that is publicly available and easily consumable.

Dashboards are great at presenting answers to the “What” questions. They are efficient at giving everyone a sense of the current and historical state of affairs. They provide an excellent vehicle to succinctly inform an audience and they typically inspire action.

It’s at the action step where we start to stumble with the dashboard approach. To take action, you have to understand the reasons behind the numbers. As I alluded to previously, dashboards are great at displaying what happened, but why it happened is a whole different matter. Trust me, if you have the right folks looking at the “What’s” they will want to know the “Why”. Understanding the why is almost always a more complicated question than a set of pre-canned dashboards can answer. It typically involves a data deep dive that needs to be tackled from a variety of angles which have not been planned for.

A DASHBOARD ANALOGY

When describing to folks the limitations of dashboard only data access, I typically use the following analogy.

Looking at a dashboard is like being in a helicopter and looking down at the ocean. When you look at it from far away, the color appears to be a uniform blue.

Let’s say your job is to monitor the health of a body of water called “Oceana”. You hire an arial photographer to gather images and report image based statistics every week about Oceana’s health. Every week the photographer flies over the ocean, takes arial photos and reports the statistics gathered.

But what if one week, the color has become slightly lighter than desired and you need to start asking the “Why”. “Why did the color of Oceana change?” How would you answer this question? Who would you involve?

Now we are entering the realm of a data investigation. If you involved the aerial photographer, they would likely fly at a lower altitude and start gathering more detailed photos of Oceana. At which point you would likely notice that the water has not been a uniform blue color this whole time. In fact, Oceana has a variety of colors that change depending on which area you are looking at and at what scale. This level detail is masked from above.

Now that we know that Oceana is not a uniform shade of blue, we need to explore the possible factors that would affect the color of a large body of water. I’m clearly not an expert in the field of marine biology, but a quick wikipedia search revealed some common factors for water color:

- The weather for the day, since the surface of the water reflects the color of the sky

- The depth of the area.

- The color of the ocean floor.

- The temperature and current.

- The marine life and flora in the area.

- External additives such as boats, garbage, spills etc

To investigate the impact of these possible factors, would you ask the aerial photographer? No, likely you would be best served to include a variety of marine experts who are adept at interpreting the values collected for weather, depth, temperature, currents etc.

To answer the “Why”, we now understand the need for two things.

- A custom investigation that the pre-canned dashboards likely didn’t account for.

- Participation by subject matter experts (SMEs) in the data investigation. Better, would be if the SMEs could explore in a self serve manner.

To continue, lets assume that we are ready for a custom investigation (#1) and the SMEs are on board and ready to start looking at the data (#2).

SUBJECT MATTER LED DATA INVESTIGATIONS

We have our SMEs ready to work on this investigation. What is the best way to enable them? From a high level, we have two options: facilitated or self-serve analysis.

Option 1: Facilitated Data Analysis.

Let’s start with the case of the facilitated data analysis. This is the type of analysis that is still performed by the data analyst team, but the investigation is lead by the SME. The flow of this project typically goes as follows:

- The SME has an idea and wants to see a particular view.

- The analyst creates the view.

- The SME finds that either to be a dead end or wants to dig in further but needs a new view.

- The analyst creates the new view.

- And iterate

This process goes on and on in a time-consuming manner until one of the following happens: there is a good enough answer, the investigation loses steam, the analyst has to move on or the SME feels bad for continuing to ask for revisions.

The main issue here is that the SME is fully dependent on the data analyst to look into any aspect of the data. Answering the why needs to be a real investigation with trial and error. They can’t feel bad for going down a rabbit hole that ends up providing no answers.

Option 2: Self Serve Data Analysis

Let’s imagine a scenario where the non-data expert SME could dive into the data to their heart’s content. This means that they can truly follow the data breadcrumb trail to see where it leads them. If they could do this without wait time, or limitations, wouldn’t it be a beautiful thing?

I think we need to be realistic that this level of freedom is required for a true data investigation. When I investigate a complex problem in my knowledge space, I will often look at 50+ angles to find the possible “Why’s”. We need to give SMEs the same independence.

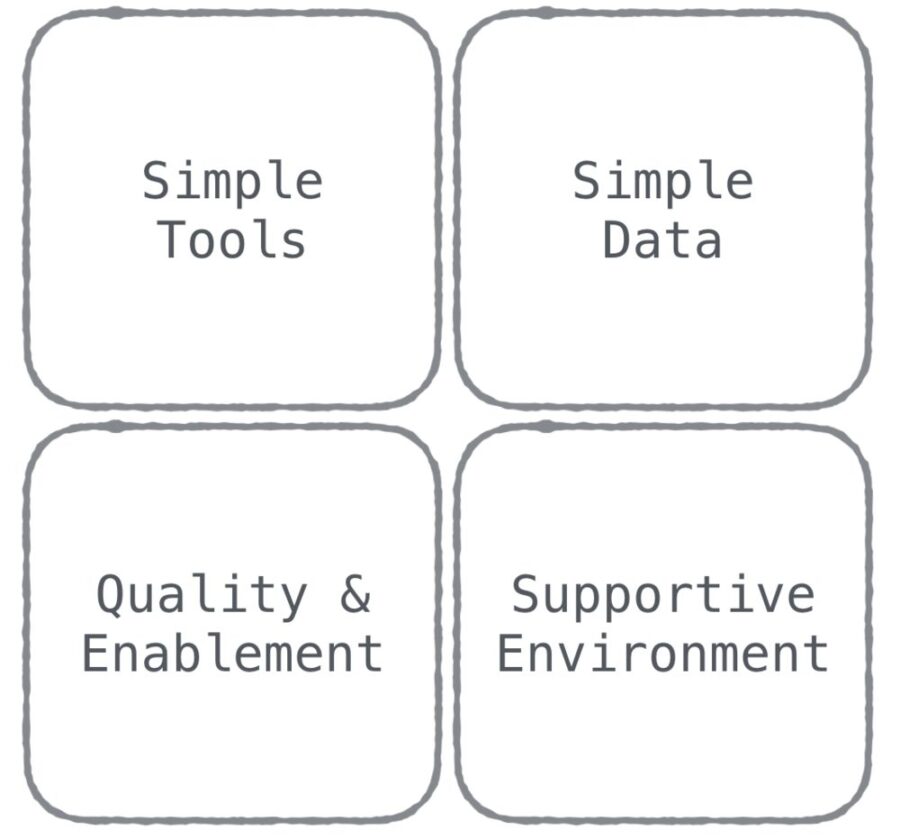

THE FOUR CORNERSTONES OF A DATA DEMOCRACY

Ideally, by now you have bought into the idea that to build a true data democracy we need to enable non-data expert SME’s to perform self-service analytics. To achieve this goal, we need to empower the SMEs with knowledge and support.

Specifically, the SMEs will require some level of knowledge on how to use a simple data tool, and they will require an of understanding of the specific data set they are to work with. Further, we need to create a culture that enables and supports SMEs to try out these new skills. Doing this is easier said than done, but certainly not impossible. There are a variety of strategies and tools that can be used to help achieve the goals.

1) Simple tools: This used to be a much more difficult problem to solve. For a long time, business intelligence software has made claims that they are usable by the regular business person. However, as much as I have tried, I’ve never seen business users proficiently using traditional BI tools at scale. Just when I started to lose hope in the self-serve analytic model, a new breed of data applications for analytics came onto the market. These data application tools have a stripped down and consistent interface which allows users to onboard and scale their skills very easily. Users can start slicing and dicing their data in an incredibly intuitive fashion. I’ve reviewed a variety of tools and the top tool I would recommend right now is Amplitude. Walking the line between a full feature set and ease of use is incredibly difficult, but they make it look easy.

2) Simple data: This is often an overlooked problem. You can have strong data skills but when you are given access to the actual data, you could be facing a warehouse with tens of thousands of tables. How can the user possibly know where to start? Enter analytic integration tooling. I like the tool Segment. Segment acts as a data collection, normalization and transportation tool. It has many benefits including some outlined in my blog on event-driven data. For the purpose of this discussion, Segment does an amazing job at keeping a simple schema. In the Segment world, we really only track people, accounts and the things they do such as page views, account creation and any other action you want to model. Keeping such a simple schema is not without its downfalls, but it pays dividends in benefits including easier user on-boarding and easier tool integration.

3) Quality and enablement: Creating quality data systems that users can trust takes constant love and attention. The last thing you need is people running around trying to figure out which data assets can be trusted. Equally important, you need to put the effort into creating appropriate enablement avenues. Ideally, you need to include documentation, training and support interfaces. You don’t want users to feel stuck and alone when they hit a roadblock. If they do, your platform adoption will suffer.

4) Supportive environment: Creating a supportive environment is part of the cultural adaptation that needs to happen. I find when doing analysis people are very often worried they are going to do something wrong and look foolish in front of their peers. Let’s remember here, our SMEs are pushing themselves out of their comfort zone. We need to create an environment that celebrates all forms of trying which includes both success and failure. It’s important for everyone to be reminded that it’s ok to make incorrect assumptions or errors and this is part of the data investigation journey. All analysis leads to some level of insight. Even if that insight is that you had an incorrect assumption, you have learned something valuable about your business!

FINAL WORDS

Thank you for taking the time to read through this article on building a data democracy; a topic that is near and dear to my heart. As you may remember from the beginning of this article, my team has invested heavily in building our version of this field of dreams. At this point, you may be wondering how it’s going. All I can say is, if you build it, they will come.