During the last few years, the hottest word on everyone’s lip has been “productivity.” In the rapidly evolving Internet world, getting something done fast always gets an upvote. Despite needing to implement real business logic quickly and accurately, as an experienced PHP developer I still spent hundreds of hours on other tasks, such as setting up database or caches, deploying projects, monitoring online statistics, and so on. Many developers have struggled with these so called miscellaneous tasks for years, wasting time instead concentrating on the project logic.

My life changed when a friend mentioned Amazon Web Services (AWS) four years ago. It opened a new door, and led to a tremendous boost in productivity and project quality. For anyone who has not used AWS, please read this article, which I am sure you will find worth your time.

AWS Background

Amazon Web Services was officially launched in 2006. Many people will have heard of it, but probably don’t know what it can offer. So, the first question is: What is AWS?

Amazon Web Services (AWS), is a collection of cloud computing services, also called web services, that make up a cloud-computing platform offered by Amazon.com.

Wikipedia

From this definition, we know two things: AWS is based in the cloud, and AWS is a collection of services, instead of a single service. Since this does not tell you much, in my opinion, it is better for a beginner to understand AWS as:

- AWS is a collection of services in the cloud, as the definition says.

- AWS provides fast computing resources online (for example, you need 10 minutes to set up a Linux server).

- AWS offers affordable fees.

- AWS provides easy-to-use services out of the box, which is saves lots of time manually setting up a database, cache, storage, network and other infrastructure services.

- AWS is always available and is highly scalable.

There are, of course, many more advantages to using AWS, so, let’s have a quick overview of how it can boost your productivity.

Create an AWS account for free

To begin using any service, you need to have an account. Creating an account for AWS should take you no more than five minutes. Make sure you have the following information at hand:

- An Email address, which is used to receive a confirmation email.

- A credit card, which will not be billed since the setup process is always free.

- A phone number, which will receive an automated system call to identify user

That’s it. Once you have the info listed above ready, visit AWS web page, and create an account following the easy-to-follow instructions.

Note the following:

- Most AWS services offer an abundance of free tier resources on a monthly basis. That is, testing AWS typically costs you little or nothing.

- The phone number and other personal information has not been abused, according to my experience

Get your first EC2 server setup

One of the benefits of a cloud service is the ability to get shared resources on demand. Amazon has provided four tiers of service for the user to access its services, listed in the order of easiness:

- Management Console,

- Command-Line-Tool,

- SDK,

- RESTful API.

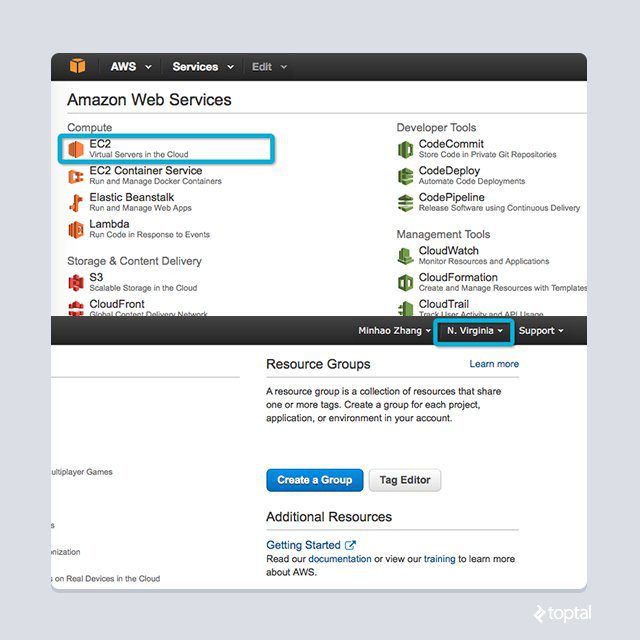

In this article, we will be using the Management Console. So, after you log in to the console, you will see a screen like below:

There are two areas to note:

- On the top-right corner, you will find the region selector. AWS provides services in 11 different regions across the world, and it is still growing. Choose a region as your choice, or leave it to US East (N. Virginia) as default. Different regions may vary in pricing, which you should bear in mind as your usage grows.

- Most of the screen is filled with a list of services. We will cover EC2 in this section. Take a quick look at what AWS provides. Don’t worry if they don’t make sense, all of the services will work on their own. However, you will get greater productivity by using a combination of them.

The most fundamental need of a cloud resource is the virtual server. EC2, or Elastic Compute Cloud, is the name chosen by Amazon for its virtual server service. Let’s have a look at how easy it is to get a Linux server online.

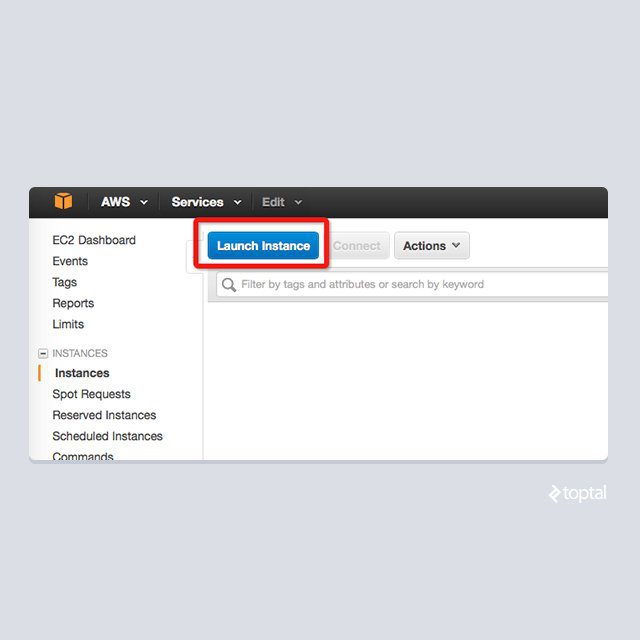

- In the EC2 management console, start the launching process like below:

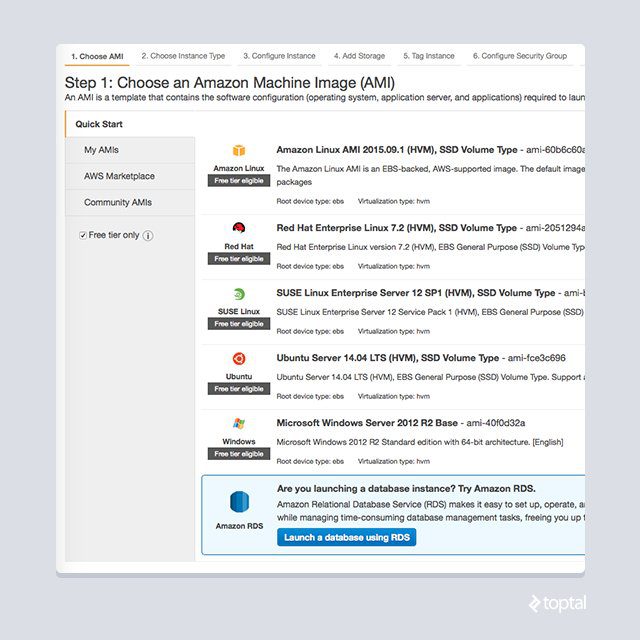

- Choose a machine image (AMI for short) to begin. This is the operating system that will run your machine. Pick any system of your preference. I recommend you start with Amazon Linux, which uses

yumto manage packages:

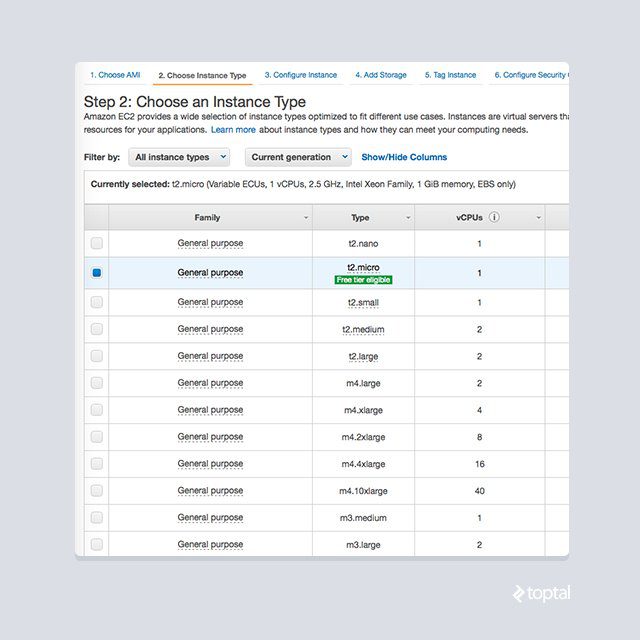

- Next, select an instance type. You can understand this as your hardware specification for your virtual server. You can start with t2.micro, because you will get 750 hours of free usage every month with this instance for the first year. Note this is valid only the first year from the date you sign up, and only for the t2.micro instance. It’s a good deal if you only want to have a taste of AWS.

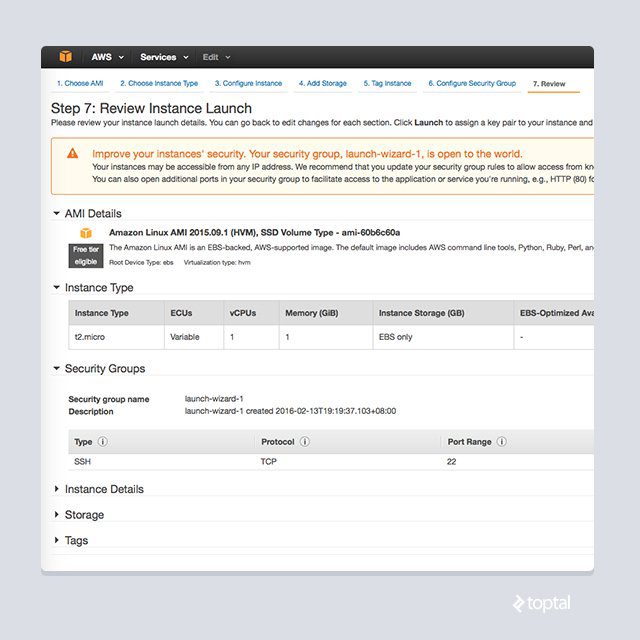

- With the ability to configure the server in more detail, you can launch the server. The first time you use EC2, you will see a screen similar to the one below. The warning about security tells us how much Amazon emphasizes the security aspect. However, we can ignore this warning until we visit the section about managed services.

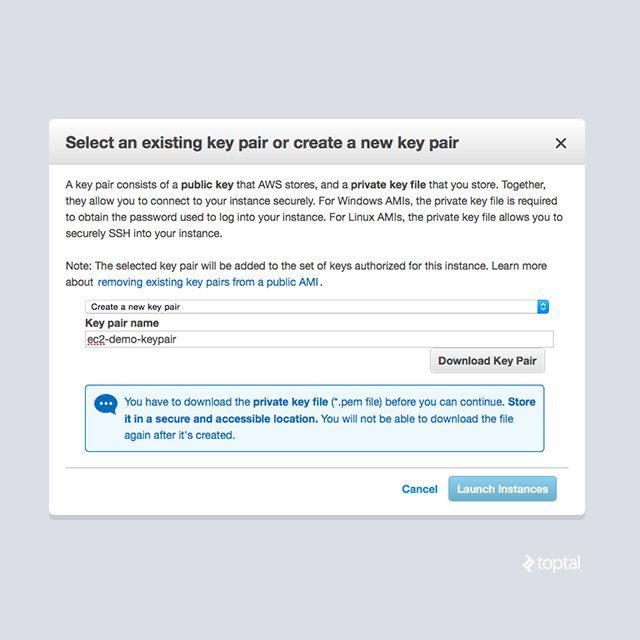

- Finally, to access a remote server, we need an identity. AWS will prompt us to choose an SSH key pair, as in the image below. Download the privacy key file and click the launch button. And yes, we are done; a new virtual server is being configured and will be ready in a few minutes.

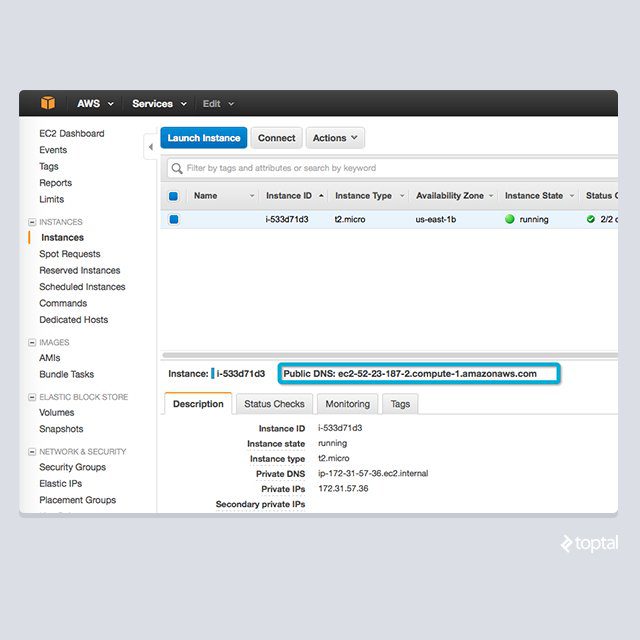

- Once the instance is ready, you may log into the system as default user

ec2-user, with your privacy key.ec2-useris the AWS default that also has sudo ability. Although it is not possible to change the default username, you may create any user and assign the appropriate privileges according to your preferences. The address of your server can be found here:

The process above should take less than five minutes, and that is how easily we get a virtual server up and running. In the next section, we will learn how AWS helps us manage the instance we just created.

On-demand billing

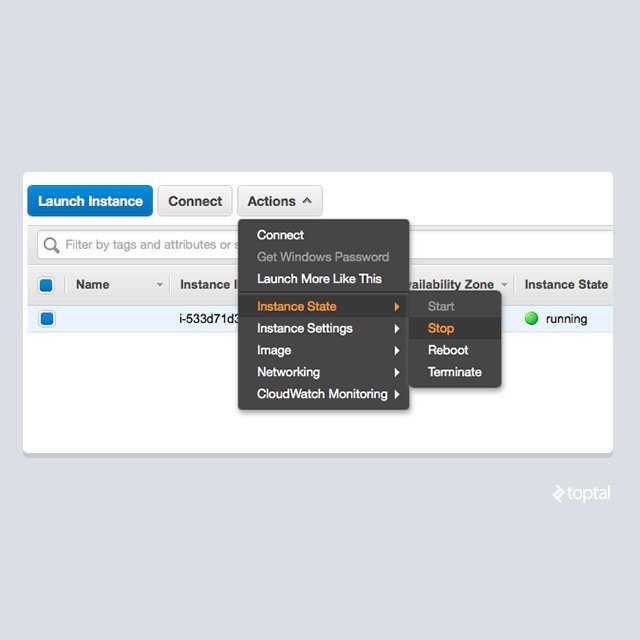

Most AWS resources are billed in hours, which provides good flexibility. For example, taking the EC2 instance we just created, there are two ways to put it out of service: stop and terminate. Both actions will stop the billing. The difference is that by stopping an instance, we can re-start it later with all our work saved. In contrast, by terminating an instance, we give the instance back to AWS for recycling and there is no way to recover the information. The need to terminate an instance results from AWS setting a limit of 20 instances per region per account by default, and a stopped instance still counts until it is terminated.

We can stop an instance quickly by:

When you stop your EC2 instance, your bill stops growing, as well. It’s especially useful in the following scenarios:

- When you want to try something new, it is more cost friendly if you only need to pay for a couple of hours, and you probably won’t exceed the free tier for some services.

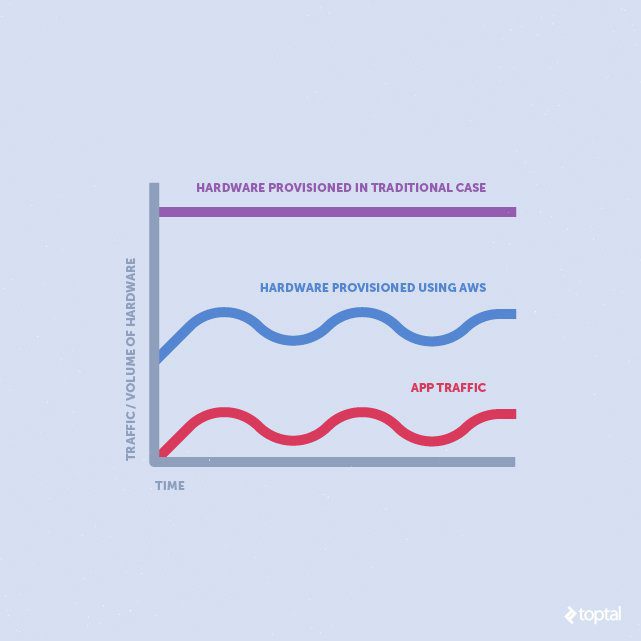

- When your computing need is in production environment scales. For example, in the past, I needed to reserve computing resources which are usually 30-50 percent more than the peak usage. With AWS, I could provision resources in a more flexible way:

Pricing information for AWS is available online. After making some calculations, you may raise the question: Is AWS actually cheaper? By multiplying the hourly rate for a month’s time, it looks like it’s not competitive at all. The answer is yes and no.

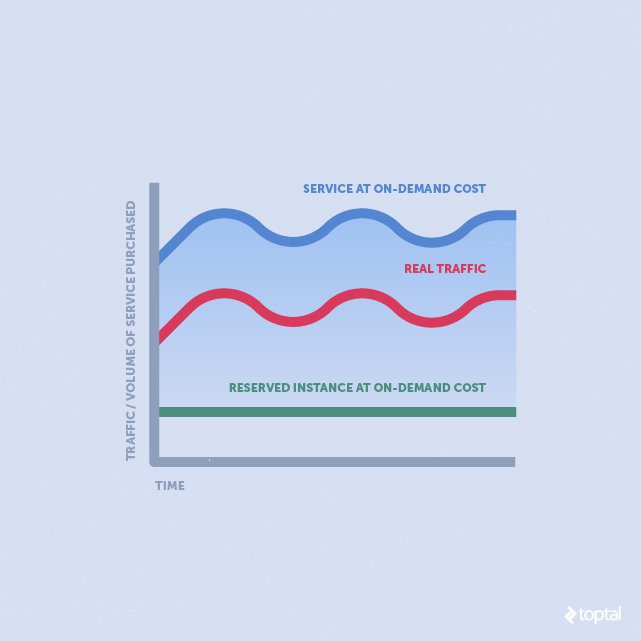

AWS is not cheaper if you simply calculate the hourly rate for the on-demand resource over a month. However, we still have the reserved instances billing choices as illustrated below:

For the minimum resource requirements, we can achieve a 30 to70 percent discount using the reserved instance, along with other varying resources billed as an on-demand instance. In practice, this will be 30 to 40 percent cheaper with the one-year commitment, and even more with a three-year commitment using reserved instances. That is why I’ll vote “Yes” on the above question. And AWS is even cheaper if you include the security and monitoring benefits.

Managed services

One aim of AWS is to eliminate as much of the operational cost as possible. Traditionally, we need a large team of system engineers to maintain the security and health of our infrastructure, either online on onsite. Experienced teams will write and deploy their automated tools to simplify the process. However, managing services becomes a complicated project in practice, as well. AWS acts as a lifesaver in helping us manage our resources. Below, I have listed some of the services provided by AWS that are most used:

- AWS Security Group,

- IAM, Identity Access Management,

- CloudWatch,

- And a list of auto deployment services such as OpsWorks(which will not be covered in this article).

AWS Security Group

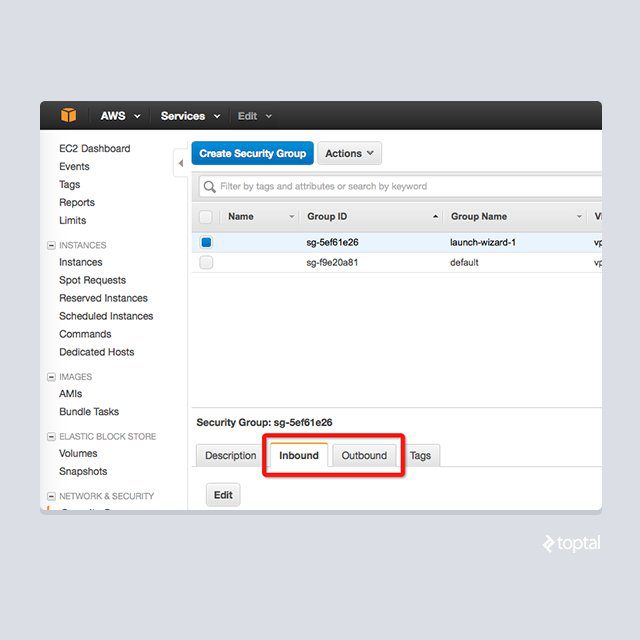

How AWS handles access control of services is done in two separate layers. On the network level, it is achieved by using an idea known as “security groups.” All AWS services are in security groups. And the security group determines who is allowed to pass through. Taking our EC2 instance, AWS has automatically created a security group for us:

We can decide what can comes in and what can go out by configuring inbound/outbound rules. TCP, UDP, and ICMP rules are supported by the EC2 service. The security group acts like an external hardware level firewall, and we never need think about patching it.

One more advantage of using security group is that it is reusable. One security group can be shared among many resources. In practice, it greatly improves maintenance efficiency by removing the hassle of setting security policy one by one for each resource. Also, the shareable nature of a security group enables us to configure it in a single place, and apply that security policy to any other resources, without the hassle of setting it manually, one by one for each resource.

Identity and Access Management

AWS provides another method to handle access control by using IAM. This is an application level security control for when you need to access the RESTful interfaces. Each REST request must be signed so that AWS knows about who is accessing the service. Also, by checking against a preconfigured list of policies, AWS will determine if the action should be denied or allowed through.

We will not cover IAM in detail in this article. However, note that AWS puts a lot of thought into security so you can be sure no unauthorized visitors can access your confidential data.

CloudWatch

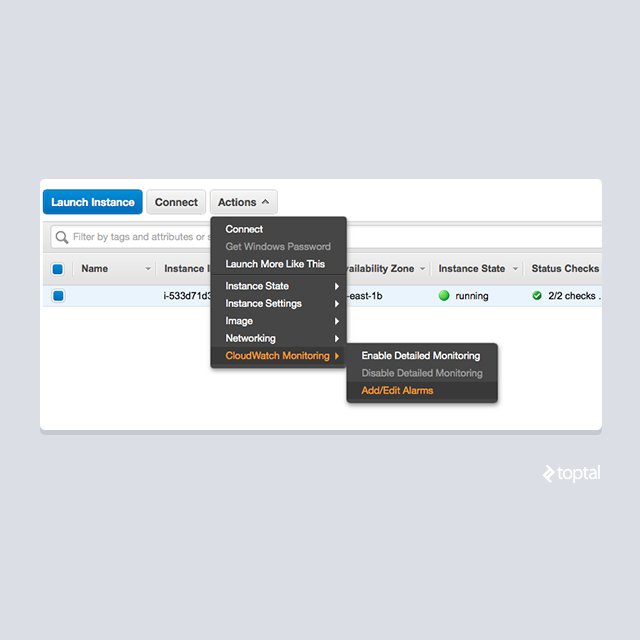

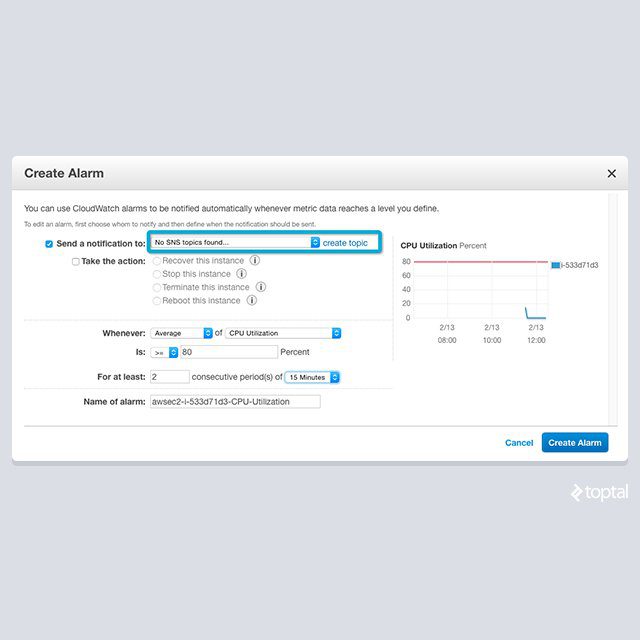

CloudWatch is a service provided by AWS to collect and track all kinds of metrics from your AWS resources. It is more powerful because of its ability to react to certain events (or alarms). With the help of CloudWatch, we can monitor the health of our newly created EC2 instance.

- We can add alarms to our EC2 instance quickly:

- Alarms may be created by specifying criteria for many different purposes:

NOTE: SNS is a topic based service provided by AWS to send notifications. Notifications can be sent by email, SMS, iOS/Android push notification and many other formats.

From monitoring to notifying, CloudWatch attempts to automate monitoring needs in a few clicks. There are tons of metrics predefined for various AWS services. For the advanced user, you can even create custom metrics for your application.

Regarding cost, the free tier service provided by CloudWatch is usually plenty for start-up projects. However, even when your business grows, the added costs are usually less than 1 percent of the service cost. Check detailed pricing for further information. Considering how easy it is to set up a monitoring system using CloudWatch, it has become the most used monitoring tool.

As developers, we have likely experienced the following scenarios:

- Our application needs a database component, which means that we have to:

- Get a server for the database.

- Install the database software.

- Configure monitors for the server and the database.

- Plan backup schemes.

- Patch the software as needed.

- And many other not listed here.

- Our application needs distributed file storage, which means that we have to:

- Find existing open source (or commercial) solutions for distributed file storage.

- Prepare the needed servers.

- Install and configure the chosen solution, which is usually not straightforward.

- Configure monitors for the server and the database

- And many other not listed here

- Our application needs a cache.

- Our application needs a message queue.

- And many other problems to be solved, plus, we need to do pre-configuration and post-monitoring work.

And, as you may have already guessed, this is another significant area where AWS helps. There are a lot of application level services available, so you won’t need to consider anything else.

Let’s cover some of them to give you a quick picture.

RDS, database managed for you but not by you

Relational databases (RDBMS) have been widely adopted by a lot of applications. In the production environment, special attention is always needed when deploying applications using RDBMS, beginning with how to setup and configure the database, followed by when and how backups are made and restored.

In our team, our Database Administrator (DBA) used to spend at least 30 percent of his time writing setup and maintenance scripts. With the introduction of AWS RDS, our DBA got more time to do SQL performance tuning, which is the preferred area to invest your DBA in.

So, what does RDS offer you? In short:

- RDS provides support for most of the popular database engines, including MySQL, SQLServer, PostgreSQL.

- A database, either a node or a cluster, can be created in a few clicks.

- RDS offers built-in support for shared database parameters, under the service named “Parameter Group”.

- RDS provides built-in support for access management with the help of Security Group , which is quite similar to the one we covered for EC2.

- RDS offers additional services by enabling Multi-AZ in a single click. All monitoring, standby and failover switching are done automatically.

- Maintenance and backup of RDS are automated.

To conclude, RDS saves a considerable amount of time when it comes to setup and maintenance of the database services. In exchange, you will pay about 40 percent more than the corresponding EC2 server. So, it is a business decision whether to opt for RDS or deploy the corresponding server on your own. However, it does allow you to invest more time in work that’s related to real business rather than infrastructure stability and scalability. Plus, you will soon notice that this is the way of business AWS advocates.

Dynamo DB, a key-value storage scales to billions of records

NoSQL has become a favorite topic in recent years. Since many real life projects do not need the support of various relational DBMS, a list of NoSQL databases has been introduced to the market. Amazon is not falling behind in this. DynamoDB (https://aws.amazon.com/dynamodb) is the key-value store announced by Amazon in 2012, and the core contributor to this service is Werner Vogels, CTO of Amazon, one of the world’s top experts on ultra-scalable systems.

It is no secret that Amazon handles massive traffic. DynamoDB is derived from Dynamo, which has been the internal storage engine for many Amazon’s businesses, including its shopping cart service that serves billions of requests every Christmas. DynamoDB has no limitation in scaling up.

Further, when compared to other NoSQL solutions, such as Cassandra or MongoDB, there is an enormous economic advantage to DynamoDB; it is billed in the unit of reserved throughput (how many write/read per second is allowed), which can be increased or decreased in real time. Below is a cost comparison table between DynamoDB and other standalone NoSQL solution:

| Business Need | DynamoDB service | DynamoDB cost | Using another service | Cost when using another service |

| Small Business (less than 1000 DAU, 16GB data) |

10 write unit 10 read unit |

$9.07/month • | t1.micro •• 16GB EBS ••• |

$14.64/month |

| Medium Business (less than 100k DAU, 160GB data) |

100 write unit 100 read unit |

$101.62/month | m4.xlarge 160GB EBS |

$190.95/month |

| Large Business (up to 1m DAU, 1TB data) |

1000 write unit 1000 read unit |

$852.58/month | Clustered c4.4xlarge • 512GB EBS • | $1329.24/month |

• to be fair, price is calculated using on-demand pricing in US-EAST region

•• AWS EC2 instances are selected to host other NoSQL services

••• EBS is the persistent storage service provided by AWS

As we can read from the table, DynamoDB provides its service out of the box, and usually at a lower price compared to building your own key-value storage. This is because unless you hit the maximum capacity of your MongoDB/Cassandra cluster, you are paying more for something you never use.

Amazon offers its service in a fully-managed manner. This means that you don’t have to worry how to setup, scale or monitor your DynamoDB; they are all done by AWS. In fact, reading and writing DynamoDB items are always measured in constant time complexity, regardless of the size of data being manipulated. Therefore, some applications have chosen to discard cache layers after they switched to DynamoDB. Amazing, indeed.

SQS, distributed queue service

When working with large volumes of data, we often distribute calculations to many computing nodes. When doing business globally, we are often in need of a pipeline to process data collected from nodes distributed in a geographically wide range. To help meet the requirements for such events, AWS introduces SQS, Simple Queue Service. Like many acknowledged queue services, SQS offers a way to pass messages/jobs between different logical components, in a persistent manner.

As its name indicates, SQS is a basic service that is available at the beginning of AWS. However, Amazon has steadily been developing SQS, and depending on the need, SQS maybe as simple or as powerful as you need with many customizable parameters. Some of the advanced features of SQS are:

- Retaining messages for up to 14 days.

- Visibility mechanism for avoiding message loss in a failure event.

- Delivery delay per message.

- Redrive policy to handle failed messages (so-called dead letter).

Queue services shouldn’t be too complicated. You may wonder why it is worth using a whole section just to introduce SQS. Perhaps you have already guessed the reason; like other AWS service, SQS is a fully managed service, which means:

- The queue is highly scalable; it can be tens of messages you are passing through or millions per second, so SQS scales on the fly.

- The queue is persistent and distributed, which means critical data will not be lost unless they expire.

- You do not need to setup a server to deploy your queue software. And of course, you do not need to setup complex monitoring for the service, either

S3, a file storage, but not only a file storage

S3 stands for Simple Storage Service andis like Dropbox as a service for the end-user, but this is for applications. By definition, S3 is an object-based storage with a simple web interface.

S3 is simple for the user, but also comes with lots of advanced features. I S3 has become an industry standard, especially for applications using other AWS services. This is mainly because S3 is so easy to integrate that it has become a popular external storage destination for most AWS services. Also, many services, such as DynamoDB, SQS and so on, make heavy use of S3 internally.

Understanding S3 should amplify the benefits of using other AWS managed services. This is because most of the services store their backups on S3. Also, S3 is the common export/import destination for services including, but not limited to, DynamoDB, RDS, and Redshift.

Finally, S3 is like other AWS services; it’s fully managed so we can simply start using the service without setting up any server or failover mechanisms. Economy wise, S3 is also a pay as you use service, so, you can always try it out without much cost.

More advanced services and SDK

There are many other AWS services also worth noting. Due to limited space, we are just listing some interesting ones here:

- Redshift: A column based database which can be used to process trillions of data in a very fast manner. You must try it if you are responsible for the ETL of a large amount of data.

- Data Pipeline: IAllows you to quickly transfer data between AWS services, and further enables periodical processing of data in a smaller shard.

- ElastiCache: Managed Memcache server, simple but does the job perfectly.

- Lambda: Next generation of cloud computing. Lambda runs an uploaded piece of code in an event-driven fashion that opens a new door for designing distributed applications.

- Route53: Powerful DNS solution with the support of weighted response, geolocation based response on top of other industrial standard DNS solutions.

- SNS: Easy-to-use notification service, designed in subscriber/publisher pattern.

- Many more.

I think it is a good habit to check AWS whenever you are introducing some new component to your application. Most often, AWS will give you a sweet surprise as it will have a ready a SaaS alternative to offer.

Furthermore, to make it easier to access RESTful interfaces, Amazon has provided SDKs in almost all popular programming languages. You should have no problem finding your favorite SDK.

Summary

We have covered some of the most widely used services of AWS in this article. For sure there are some areas that AWS will help your business. You might choose to migrate an existing service component to its AWS equivalent, such as MySQL database to RDS, for instance. You may well find yourself asking if there are any AWS services for this component of my software? So, get an AWS account today, and get your productivity boost in minutes.

The original article is by Minhao Zhang, freelance software engineer at Toptal, can be read here.