Summary: There are several things holding back our use of deep learning methods and chief among them is that they are complicated and hard. Now there are three platforms that offer Automated Deep Learning (ADL) so simple that almost anyone can do it.

There are several things holding back our use of deep learning methods and chief among them is that they are complicated and hard.

There are several things holding back our use of deep learning methods and chief among them is that they are complicated and hard.

A small percentage of our data science community has chosen the path of learning these new techniques, but it’s a major departure both in problem type and technique from the predictive and prescriptive modeling that makes up 90% of what we get paid to do.

Artificial intelligence, at least in the true sense of image, video, text, and speech recognition and processing is on everyone’s lips but it’s still hard to find a data scientist qualified to execute your project.

Actually when I list image, video, text, and speech applications I’m selling deep learning a little short. While these are the best known and perhaps most obvious applications, deep neural nets (DNNs) are also proving excellent at forecasting time series data, and also in complex traditional consumer propensity problems.

Last December as I was listing my predictions for 2018, I noted that Gartner had said that during 2018, DNNs would become a standard component in the toolbox of 80% of data scientists. My prediction was that while the first provider to accomplish this level of simplicity would certainly be richly rewarded, no way was it going to be 2018. It seems I was wrong.

Here we are and it’s only April and I’ve recently been introduced to three different platforms that have the goal of making deep learning so easy, anyone (well at least any data scientist) can do it.

Minimum Requirements

All of the majors and several smaller companies offer greatly simplified tools for executing CNNs or RNN/LSTMs, but these still require experimental hand tuning of the layer types and number, connectivity, nodes, and all the other hyperparameters that so often defeat initial success.

To be part of this group you need a truly one-click application that allows the average data scientists or even developer to build a successful image or text classifier.

The quickest route to this goal is by transfer learning. In DL, transfer learning means taking a previously built successful, large, complex CNN or RNN/LSTM model and using a new more limited data set to train against it.

Basically transfer learning, most used in image classification, summarizes the more complex model into fewer or previously trained categories. Transfer learning can’t create classifications that weren’t in the original model, but it can learn to create subsets or summary categories of what’s there.

The advantage is that the hyperparameter tuning has already been done so you know the model will train. More importantly, you can build a successful transfer model with just a few hundred labeled images in less than an hour.

The real holy grail of AutoDL however, is fully automated hyperparameter tuning, not transfer learning. As you’ll read below, some are on track, and others claim to already have succeeded.

Microsoft CustomVision.AI

Late in 2017 MS introduced a series of greatly simplified DL capabilities covering the full range of image, video, text, and speech under the banner of the Microsoft Cognitive Services. In January they introduced their fully automated platform, Microsoft Custom Vision Services (https://www.customvision.ai/).

The platform is limited to image classifiers and promises to allow users to create robust CNN transfer models based on only a few images capitalizing on MS’s huge existing library of large, complex, multi-image classifiers.

Using the platform is extremely simple. You drag and drop your images onto the platform and press go. You’ll need at least a pay-as-you-go Azure account and basic tech support runs $29/mo. It’s not clear how long the models take to train but since it’s transfer learning it should be quite fast and therefore, we’re guessing, inexpensive (but not free).

During project setup you’ll be asked to identify a general domain from which your image set will transfer learn and these currently are:

- General

- Food

- Landmarks

- Retail

- Adult

- General (compact)

- Landmarks (compact)

- Retail (compact)

While all these models will run from a restful API once trained, the last three categories (marked ‘compact’) can be exported to run off line on any iOS or Android edge device. Export is to the CoreML format for iOS 11 and to the TensorFlow format for Android. This should entice a variety of app developers who may not be data scientist to add instant image classification to their device.

You can bet MS will be rolling out more complex features as fast as possible.

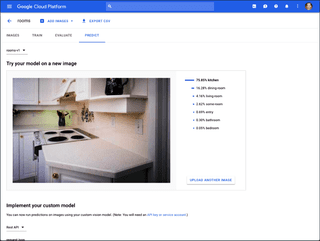

Google Cloud AutoML

Also in January, Google announced its similar entry Cloud AutoML. The platform is in alpha and requires an invitation to participate.

Like Microsoft, the service utilizes transfer learning from Google’s own prebuilt complex CNN classifiers. They recommend at least 100 images per label for transfer learning.

It’s not clear at this point what categories of images will be allowed at launch, but user screens show guidance for general, face, logo, landmarks, and perhaps others. From screen shots shared by Google it appears these models train in the range of about 20 minutes to a few hours.

It’s not clear at this point what categories of images will be allowed at launch, but user screens show guidance for general, face, logo, landmarks, and perhaps others. From screen shots shared by Google it appears these models train in the range of about 20 minutes to a few hours.

In the data we were able to find, use appears to be via API. There’s no mention of export code for offline use. Early alpha users include Disney and Urban Outfitters.

Anticipating that many new users won’t have labeled data, Google offers access to its own human-labeling services for an additional fee.

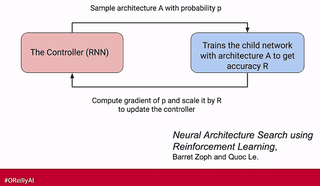

Beyond transfer learning, all the majors including Google are pursuing automated ways of automating the optimal tuning of CNNs and RNNs. Handcrafted models are the norm today and are the reason so many often unsuccessful iterations are required.

Google calls this next technology Learn2Learn. Currently they are experimenting with RNNs to optimize layers, layer types, nodes, connections, and the other hyperparameters. Since this is basically very high speed random search the compute resources can be extreme.

Google calls this next technology Learn2Learn. Currently they are experimenting with RNNs to optimize layers, layer types, nodes, connections, and the other hyperparameters. Since this is basically very high speed random search the compute resources can be extreme.

Next on the horizon is the use of evolutionary algorithms to do the same which are much more efficient in terms of time and compute. In a recent presentation, Google researchers showed good results from this approach but they were still taking 3 to 10 days to train just for the optimization.

OneClick.AI

OneClick.AI is an automated machine learning (AML) platform new in the market late in 2017 which includes both traditional algorithms and also deep learning algorithms.

OneClick.AI would be worth a look based on just its AML credentials which include blending, prep, feature engineering, and feature selection, followed by the traditional multi-models in parallel to identify a champion model.

However, what sets OneClick apart is that it includes both image and text DL algos with both transfer learning as well as fully automated hyperparameter tuning for de novo image or text deep learning models.

Unlike Google and Microsoft they are ready to deliver on both image and text. Beyond that, they blend DNNs with traditional algos in ensembles, and use DNNs for forecasting.

Forecasting is a little explored area of use for DNNs but it’s been shown to easily outperform other times series forecasters like ARIMA and ARIMAX.

For a platform with this complex offering of tools and techniques it maintains its claim to super easy one-click-data-in-model-out ease which I identify as the minimum requirement for Automated Machine Learning, but which also includes Automated Deep Learning.

The methods used for optimizing its deep learning models are proprietary, but Yuan Shen, Founder and CEO describes it as using AI to train AI, presumably a deep learning approach to optimization.

Which is Better?

It’s much too early to expect much in the way of benchmarking but there is one example to offer, which comes from OneClick.AI.

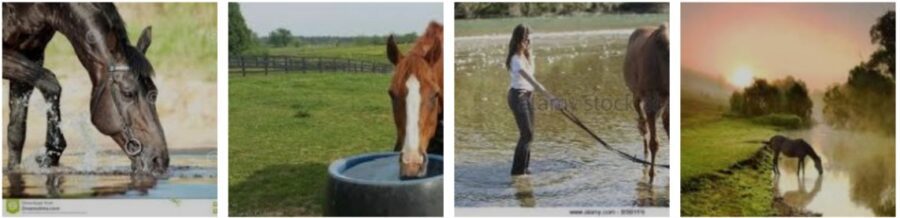

In a hackathon earlier this year the group tested OneClick against Microsoft’s CustomVision (Google AutoML wasn’t available). Two image classification problems were tested. Tagging photos with:

Horses running or horses drinking water.

Detecting photos with nudity.

The horse tagging task was multi-label classification, and the nudity detection task was binary classification. For each task they used 20 images for training, and another 20 for testing.

- Horse tagging accuracy: 90% (OneClick.ai) vs. 75% (Microsoft Custom Vision)

- Nudity detection accuracy: 95% (OneClick.ai) vs. 50% (Microsoft Custom Vision)

This lacks statistical significance and uses only a very small sample in transfer learning. However the results look promising.

This is transfer learning. We’re very interested to see comparisons of the automated model optimization. OneClick’s is ready now. Google should follow shortly.

You may also be asking, where is Amazon in all of this? In our search we couldn’t find any reference to a planned AutoDL offering, but it can’t be far behind.

Previous articles on Automated Machine Learning

Next Generation Automated Machine Learning (AML) (April 2018)

More on Fully Automated Machine Learning (August 2017)

Automated Machine Learning for Professionals (July 2017)

Data Scientists Automated and Unemployed by 2025 – Update! (July 2017)

Data Scientists Automated and Unemployed by 2025! (April 2016)

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at: