Topic detection

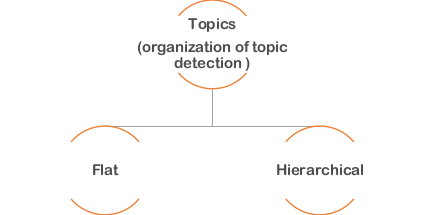

Introduction to topic model: In machine learning and natural language processing, a topic model is a type of statistical model for discovering the abstract “topics” that occur in a collection of documents. Topic modeling is a frequently used text-mining tool for discovery of hidden semantic structures in a text body. In topic modeling, a topic is defined by a cluster of words with each word in the cluster having a probability of occurrence for the given topic, and different topics have their respective clusters of words along with corresponding probabilities. Different topics may share some words and a document can have more than one topic associated with it. Overall, we can say topic modeling is an unsupervised machine learning way to organize text (or image or DNA, etc.) information such that related pieces of text can be identified. Architecture of topics : Figure 1. Organization of topics Figure1. Architecture of topics Figure 1. Shows the organization of topics. Topics comes in two forms, a flat and a hierarchical. In the other word, there are two methods for topic detection (topic model) a flat and a hierarchical. The flat topic models means, these topic models and their extensions can only find topics in a flat structure, but fail to discover the hierarchical relationship among topics. Such a drawback can limit the application of topic models since many applications need and have inherent hierarchical topic structures, such as the categories hierarchy in Web pages , aspects hierarchy in reviews and research topics hierarchy in academia community . In a topic hierarchy, the topics near the root have more general semantics, and the topics close to leaves have more specific semantics. Topic model approach: figure 2 topic modeling approach A popular topic modeling approaches are illustrated in figure 2. These approaches could be divided into two categories, i.e., probabilistic methods and non-probabilistic methods. Probabilistic methods usually model topics as latent factors, and assume that the joint probability of the words and the documents could be described by the mixture of the conditional probabilities over the latent factors i.e., LDA and HDP. On the contrary, non-probabilistic methods usually use NMF and dictionary learning to uncover the low-rank structures using matrix factorization.NMF methods extends matrix factorization based methods to find the topics in the text stream. All the above mentioned methods are static topic detection methods and cannot handle the topic evolving process in the temporal dimension. Thus, various extensions of these methods have been proposed to handle this issue. Reference: Xu, Y., Yin, J., Huang, J., & Yin, Y. (2018). Hierarchical topic modeling with automatic knowledge mining. Expert Systems with Applications, 103, 106-117. Chen, J., Zhu, J., Lu, J., & Liu, S. (2018). Scalable training of hierarchical topic models. Proceedings of the VLDB Endowment, 11(7), 826-839.