This blog is the second part of a two-blog series. Here, we discuss different sectors where reinforcement learning can be used to solve complex problems efficiently. The blog is based on this paper. In the previous post, we studied the basics of reinforcement learning and how one can think of a problem as a Reinforcement Learning problem. In this follow-on post, we look at how real-world reinforcement learning applications can be developed.

In general, to formulate any reinforcement learning problem, we need to define the environment, the agent, states, actions, and rewards. This idea forms the basis for the examples in this post.

We cover reinforcement learning for –

- Recommender systems

- Energy management

- Finance

- Transportation

- Healthcare

Recommender systems

Algorithms for recommendation systems are constantly evolving and reinforcement learning techniques play a key part in recommendation algorithms. Recommender systems face some unique challenges which can be addressed using reinforcement learning techniques. These challenges are

- The idiosyncratic nature of actions and

- A high degree of unobservability and

- Stochasticity in preferences, activities, personality etc.

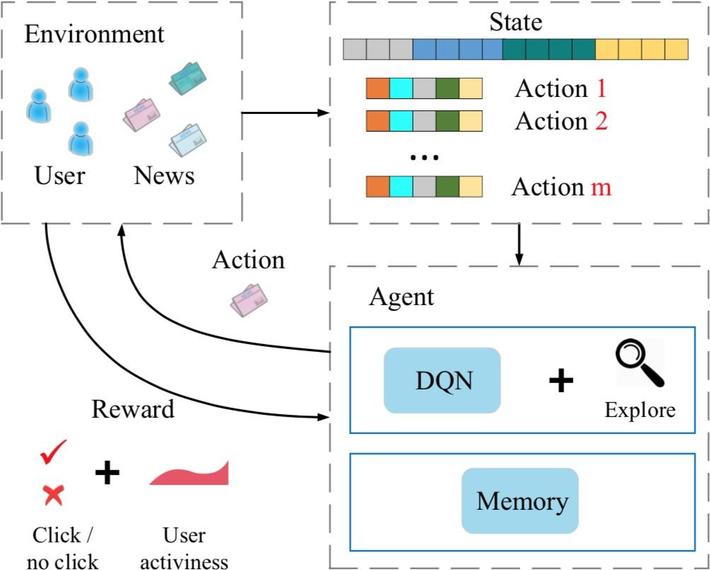

Horizon is Facebooks open source applied reinforcement learning platform for recommendations. We formulate the illustrations in the figure below in terms of reinforcement learning as

- Action is sending or dropping of a notification.

- Agent is the recommender system

- Rewards are the interactions and activities on Facebook.

- Environment is the user and the news.

- State space is ongoing interaction and engagement of the user and the news along with the features representing the candidate to be notified.

Deep RL news recommender system. (from Zheng et al. (2018))

Energy

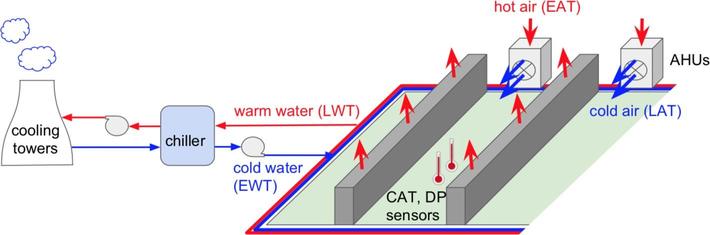

Cooling is quite an essential process for data centers in order to lower high temperatures and conserve energy. Reinforcement learning can be efficiently used for data center cooling. Ideally MPC (Model-predictive method) is used to monitor or regulate the temperature and airflow for the components in the data center, such as the fan speeds, water flow regulators, air handling units (AHUs) etc. This problem can be solved as a reinforcement learning technique as

- The agent will be the controller. The agent learns a linear model of the data center operations with random, safe exploration, with little or no prior knowledge.

- The variables used to manipulate (ex: fan speed to control airflow, valve opening, etc.) represent the controls or actions

- The reward is the cost of a trajectory

- The process variables to predict and regulate (differential air pressure, cold-aisle temperature, entering air temperature to each AHU, leaving air temperature (LAT) from each AHU, etc.) represent the state.

- The agent optimizes the cost (reward) of a trajectory based on the predicted model and generates actions at each step to mitigate the effect of model error catering for unexpected disturbances.

For reinforcement learning purposes, the data center is modelled as a control loop for cooling processes. The figure below illustrates the process.

Finance

I have done a good amount of work in the financial sector and with the same domain knowledge, I think there are multiple problems that can be modelled as sequential decision problems in the financial sector. Reinforcement learning can be employed for some of these, which include problems such as option pricing, portfolio optimization, risk management, etc.

In case of option pricing, the challenge comes with determining the right price for the option. To formulate option pricing as a reinforcement learning problem, we again define states, actions, and rewards as below

- The uncertainties affecting the option price are captured as part of the state. These include financial and economic factors such as interest rates.

- The actions could be the act of exercising the option.

- The reward is the intrinsic value of the option due to the change in state.

When we model option pricing as a reinforcement learning problem, the entire training or process depends on learning the state-action-value function.

Transportation

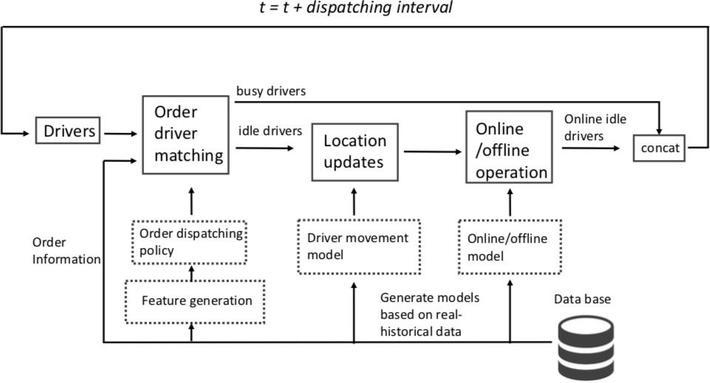

Reinforcement Learning aims to improve efficiency and reduce cost for its applications in the transportation sector. Order dispatching process in ridesharing systems is one of the best applications of RL in transportation (example Uber). The process of allocating a driver to a passenger is a complex process and depends on various factors such as demand prediction, route planning, fleet management, etc. The problem of order dispatching includes both spatial and temporal components. This problem could be formulated as a reinforcement learning problem where:

- The state is composed of a drivers geographical status, the raw timestamp, and the contextual feature vector (ex: driver service statistics, holiday indicators).

- An option represents state change for a driver in multiple time steps.

- A policy represents the probability of taking an option in a state. The RL algorithm aims to estimate an optimal policy and/or its value function.

Composition and workflow of the order dispatching simulator. (from Tang et al. (2019))

The model is initialized using historical data. After that, the process is driven by an order dispatch policy learned with reinforcement learning.

Healthcare

Healthcare is one of the most crucial sectors where there are many opportunities and challenges for AI where reinforcement learning could be used. We will discuss some of these below

- Dynamic treatment strategies (DTRs) DTR is a process of treatment which comprises of a sequence of decision rules that determine how the patient’s ongoing treatment should evolve based on the current state and the covariate history (A covariate represents any continuous variable that is expected to correlate with the outcome variable). DTRs apply to personalized treatment plans, typically for chronic conditions.

In the case of DTRs, we could consider

- The state is composed of a multidimensional discrete-time series composed of variables of interest for the treatment (demographics, vital signs, etc.). We can use clustering to determine the state space such that patients in the same cluster are similar for the observable properties.

- An action comprises the medical treatment the patient receives administered as doses of medicine over a sequence of time.

- The reward directly is the patients health stating whether the health improves or deteriorates.

Try one for yourself?

Another healthcare application for reinforcement learning can be generation of reports from medical images. A medical report comprises specific segments such as the findings, the report’s conclusion (main finding and diagnosis), any secondary information, etc. For this case, I leave the problem on to the reader for formulating the same into a reinforcement learning problem.

Hint In this scenario, first a CNN (convolutional neural network) is used to extract a set of images’ visual features and transform the features into a context vector. From this context vector, a sentence decoder generates latent topics recurrently. Based on a latent topic, a retrieval policy module generates sentences using either a generation approach or a template. The RL based retrieval policy integrates prior human knowledge.

Hope you enjoyed reading the blog! For any questions or doubts, please drop a comment.

About Me (Kajal Singh)

Kajal Singh is a Data Scientist and a Tutor at the Artificial Intelligence Cloud and Edge implementations course at the University of Oxford. She is also the co-author of the book Applications of Reinforcement Learning to Real-World Data: An educational introduction to the fundamentals of Reinforcement Learning with practical examples on real data (2021).