Summary: Advanced analytics and AI are the fourth great lever available to create organic improvement in corporations. We’ll describe why this one is different from the first three and why the CEO needs the direct help of data scientists to make this happen.

If you’re a CEO or any other flavor of top executive leading a large company (or if you’re a data scientists trying to help one) then the mission to create continuous improvement is obvious. Ultimately you probably think about this in terms of EBITDA or shareholder value or some similar KPI like top line or bottom line growth. The real challenge however is in knowing how to make that happen.

My favorite job description of the CEO is this:

- Being a good communicator.

- Being a strategic thinker.

- Being a leg breaker.

That was told to me by a Fortune 500 CEO who said he was good at two out three. He didn’t say which.

Of course a CEO or other top executive doesn’t actually go out and break legs, or even directly hire, fire, or manage the detail operations of a company. Their job is to be a leader of leaders, to point out and prioritize the types and methods of change that are necessary now, and to provide and allocate sufficient resources in the right places.

Ultimately, if you’re looking for those levers to use to create positive organic change, there are only three places to look: people, process, and technology.

Where Advanced Analytics and AI Fits in This

Where advanced analytics and AI fits in all this can be a little tricky for someone not brought up in the ways of data science.

Is this a particular batch of people with these skills and knowledge we should be hiring for? Or is it more of a process as in the way people should be thinking about problems (analytically based on data). Or is it a set of technologies that we need to acquire and integrate?

Well yes. And that what makes it tricky, that it’s a little bit of all these things. If you’re used to thinking about people, process, and technology as separate levers you may miss the important parts of how advanced analytics and AI is enabling change.

A Little History about the Levers of Change

You can draw a straight line through the four major historical enablers of organic corporate improvement dating from the early 1990’s through today. I know. I was there to see it in my career as a management consultant prior to my pivot to data science.

You can draw a straight line through the four major historical enablers of organic corporate improvement dating from the early 1990’s through today. I know. I was there to see it in my career as a management consultant prior to my pivot to data science.

In the early 90s the major lever was Process Improvement and Process Value Analysis. The core of this was about breaking down the traditional organizational silos between ‘departments’ and charting the path the major elements of work took across those often walled-off groups.

You could save a lot of time that people spent on tasks and also speed up the desired end result significantly. That would save a lot of money and better serve customers, basically doing more with less, being efficient with resources.

By the middle 90s we began to think in terms of Reengineering. Anybody remember Tom Davenport’s seminal book that kicked this off, ‘Process Innovation – Reengineering Work Through Information Technology’? That’s where the phrase came from.

Reengineering was the marriage of Process Improvement and information technology. But we were no longer concerned with making existing processes better. We were set free to imagine how wholly new processes could be created if they were aided by IT.

At the outset, this involved specifying and developing large and elaborate custom software solutions. It was slow and very expensive but could deliver extremely large bottom line improvements and really let you break out from your competitors.

Toward the end of the 90s, the big software firms like Oracle, PeopleSoft, and SAP had created large standardized ERP systems based on our early experiences in custom reengineering. It was still expensive but at least someone else was looking after maintaining standardized software. And these new platforms had most of the best practices baked in. We called this Package-Enabled Reengineering.

In the late 90s and early 2000s we pivoted from information-technology-as-tool to information technology used to reveal the value locked up in our stored information. At the time we called this the beginning of the Information Age. These days, since we now understand that all that EDW data is historical and basically backwards looking, it’s more accurate to call this the age of Business Intelligence. EDWs were our tools and we birthed the term ‘Data Mining’.

Advanced Analytics and AI – The Fourth Lever of Change

By the mid-2000s the earliest commercial foundations of advanced analytics were easily identifiable, primarily based on SAS and SPSS. I started my practice in 2001 and business was still very thin. I spent most of my time explaining to clients what predictive modeling was when I was really hoping that would be saying give me more.

It really wasn’t until around 2012, an admittedly inexact estimate, that word got around the board rooms that advanced analytics was the next great competitive differentiator. That also marks the boom in data scientist hiring as well as the earliest commercial adoption of Hadoop and the first deep learning driven consumer applications.

Why Is It Taking So Long

Managing people, processes, and technology as separate entities is something that executives are comfortable with. Process Improvement, Reengineering, and even Business Intelligence are concepts that can be reasonably well understood by reading a good book and their adoption by corporations reflects that. None of those previous major levers of change required more than two or three years to reach majority adoption among the Fortune 500.

So why are advanced analytics and AI taking so long to reach critical mass in adoption? By the way, although there are still no definitive surveys or studies, my guess is that 2018 is the inflection point.

First off, the capabilities and maturity of our profession and toolset have taken longer than others to develop. From predictive modeling, to prescriptive modeling with optimization, to NoSQL and deep learning has been more than a decade (as measured by commercially acceptable tools and techniques, not from their much earlier academic roots).

Second, our somewhat self-inflicted shortage of data scientists, made worse by the slower productivity and lack of standardization created when we decided it was better to write code in R than to use the newly resurgent drag-and-drop platforms. Those now evolving into ever simpler, faster, more accurate automated ML tools helping alleviate the talent shortage.

And third, our development was somewhat delayed as we waited for hardware and cloud environments to catchup with our needs.

But perhaps the greatest current impediment to adoption is what we discussed earlier, that embracing advanced analytics and AI requires thinking about people, process, and technology in a wholly new integrated way.

As McKinsey said, the most valuable person in the advanced analytics value chain is now the Analytic Translator. That’s the guy or gal who can translate business problems into data science projects, and make them happen.

Data science in its many forms is a much more complex toolset than those previous levers of change. Seeing opportunities for improvement requires an understanding of what is possible and where the pitfalls lie. That requires more than a quick read of the most recent popular book. It requires some in depth understanding of all the capabilities of data science.

How to Make It Happen

So now we come full circle back to you data scientists who are the key to enabling top executives see the opportunities.

What the CEO wants and needs is not an education in data science. It is a simply laid out and well-presented set of options. Let’s call this a Portfolio of Improvement Projects (PIP) based on advanced analytics and AI.

You, the data scientist Analytics Translator are the most qualified to lead this but it’s a team effort since it needs folks who are expert in the particular target process, analysts to get at historical data, and folks who are good at estimating costs and benefits.

Each of the projects you propose should be evaluated for:

- Net benefit and time to payback

- Required investment and resources both internal and external

- Probability of success

- Risk

- Resource constraints

- Interdependencies among projects (that constrain sequence and timing).

These may seem like the common elements any good project manager would evaluate, except that you will need to use your unique insight into the special requirements the tools and techniques of data science impose.

Also, you should be prepared that not all executives or organizations may rank these in the same way. While Net Benefit, Time to Payback, and Investment (the basis for ROI) may seem the most important, I’ve personally encountered some CEOs who put greater weight on risk avoidance and probability of success.

How Detailed Should This Plan Be

It depends on where you are working in the organization. If you are working at the CEO level, the executive may guide you to present only opportunities that have a value over a certain threshold, for example likely to result in EBITDA improvements greater than $10 million.

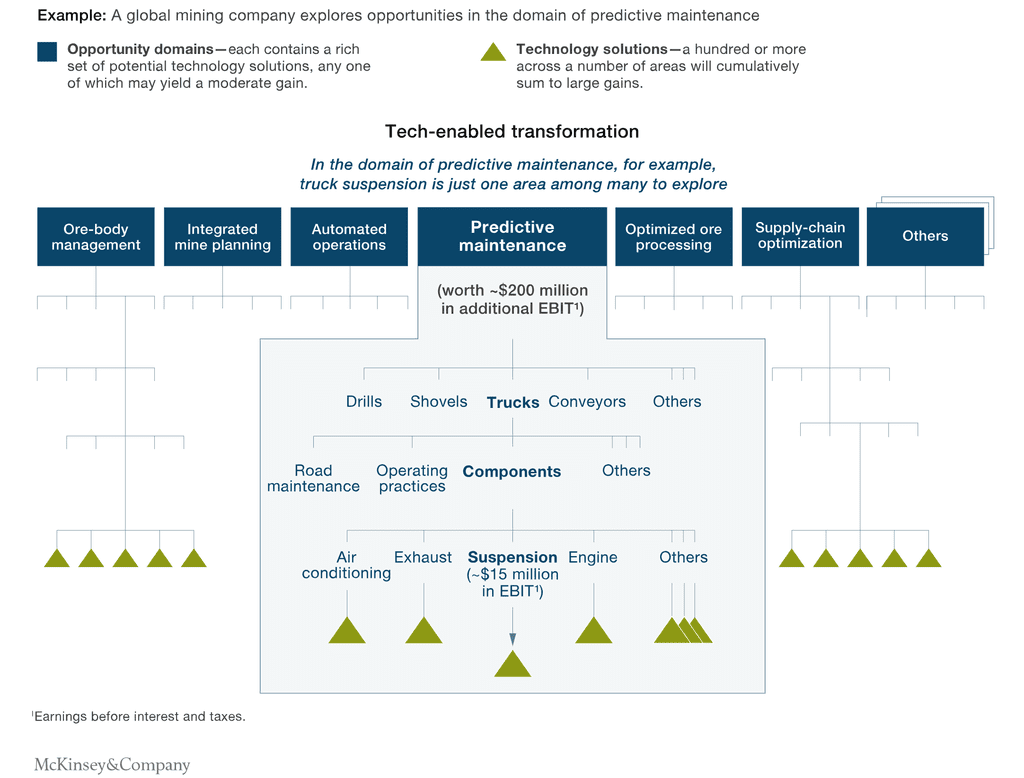

You will also need to decide if this is a bottom-up or top-down estimate. For example, your team may conclude that there are a large number of smaller projects relating to predictive maintenance and choose to make a (well supported) global estimate.

Alternatively, given the time and resources, it may be easier to gain eventual buy-in from the affected groups if you build a plan for each of the largest predictive maintenance projects then aggregate them up. This bottoms-up approach will yield better estimates and more cooperation later on during implementation, but is much more time and resource intensive.

The diagram below from McKinsey illustrates how a bottoms-up estimate for preventive maintenance in a mining company might be assembled.

Ultimately, it’s up to you as the senior data scientist and Analytics Translator to create a Portfolio of Improvement Projects that executives can quickly understand, and then use to select and prioritize the projects that make this great new fourth lever of change effective.

Other articles by Bill Vorhies.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at: