Summary: Researchers in Synthetic Neuro Biology are proposing to solve the AGI problem by building a brain in the laboratory. This is not science fiction. They are virtually at the door of this capability. Increasingly these researchers are presenting at major AGI conferences. Their argument is compelling.

If you step outside of all the noise around AI and the hundreds or even thousands of startups trying to add AI to your car, house, city, toaster, or dog you can start trying to figure out where all this is going.

If you step outside of all the noise around AI and the hundreds or even thousands of startups trying to add AI to your car, house, city, toaster, or dog you can start trying to figure out where all this is going.

Here’s what I think we know:

- The narrow and pragmatic DNN approaches to speech, text, image, and video are getting better all the time. Transfer learning is making this a little easier.

- Reinforcement learning is coming along as are GANNs and those will certainly help. Commercialization is a few years away.

- The next generation of neuromorphic (spiking) chips are just entering commercial production (BrainChip, EtaCompute) and those will result in dramatic reductions in training datasets, training time, size, and energy consumption. In addition, we hope they can learn from one system and apply it to another.

But what about artificial general intelligence (AGI) that we all believe will be the end state of these efforts? When will we get AGI that brings fully human capabilities, learns like a human, and can adapt knowledge like a human?

How Far Away is AGI?

When I looked into this two years ago the range of estimates was around 2025 to 2040.

With a few years more experience under our belt, here are the estimates given by 7 leading thinkers and investors in AI (including Ben Goertzel and Steve Jurvetson) at a 2017 conference on Machine Learning at the University of Toronto when asked ‘How far away is AGI’.

- 5 years to subhuman capability

- 7 years

- 13 years maybe (By 2025 we’ll know if we can have it by 2030)

- 23 years (2040)

- 30 years (2047)

- 30 years

- 30 to 70 years

There’s significant disagreement but the median is 23 years (2040) with half the group thinking longer. Sounds like we’re learning that this may be harder than we think.

What’s the Most Likely Path to AGI?

Folks who think about this say that everything we’ve got today in DNNs and reinforcement learning is by definition ‘weak’ AI. That is it mimics some elements of human cognition but doesn’t achieve it in the same way humans do.

This may or may not also be true of next gen Spiking neural nets that have adapted some new elements from neuroscience research. They look like they’re a step in the right direction but we really don’t know yet.

There is general agreement that weak AI, while commercially valuable will never give us AGI. Only if we create broad and strong AI systems that mimic human reasoning can we ever achieve AGI.

Is this all on the Same Incremental Pathway?

So far there have been two primary schools of thought.

The Top Down school is an extension of our current incremental engineering approach. Basically it says that once the sum of all these engineering problems is resolved the resulting capabilities will in fact be AGI.

Those who disagree however say that truly human-like intelligence can never be the result of simply adding up a group of specific algorithms. Human intelligence could never be reduced to the sum of mathematical parts and neither can AGI.

The Bottom Up school is the realm of researchers who propose to build a silicon analogue of the entire human brain. They propose to build an all-purpose generalized platform based on an exact simulation of human brain function. Once it’s available it will immediately be able to do everything our current piecemeal approach has accomplished and much more.

Personally, my bet is on the Bottom Up school though I think we are learning valuable hardware and software lessons along the way from our pragmatic DNN approach.

How About a Radical New Path

What I discovered in revisiting all this is that my own thinking has been too constrained. For example, in writing about 3rd gen spiking neural nets or the neuromorphic modeling approach of Jeff Hawkins at Numenta I assumed that hardware and software modeling of individual neurons interacting was the agreed approach.

Not only is this not true (I’ll write more about this later), but our fundamental assumption about working in silicon is not the only approach being explored.

Folks like neuroscientist George Church at Harvard are proposing that we simply build a brain in the biology lab and train it to do what we want it to.

Computational Synthetic Biology (CSB) – Wetware

Computational Synthetic Biology (aka synthetic neuro biology) is much further along than you think, and in terms of a 25 year forward timeline might just be the first horse to the finish line of AGI.

As far back as 10 years ago, the field of Systems Biology sought to reduce molecular and atomic level cellular activities to ‘bio-bricks’ that could be strung together with different ‘operators’ to achieve an understanding of how these processes worked.

If Systems Biology is about understanding nature as it is, Computational Synthetic Biology takes the next step to understand nature as it could be.

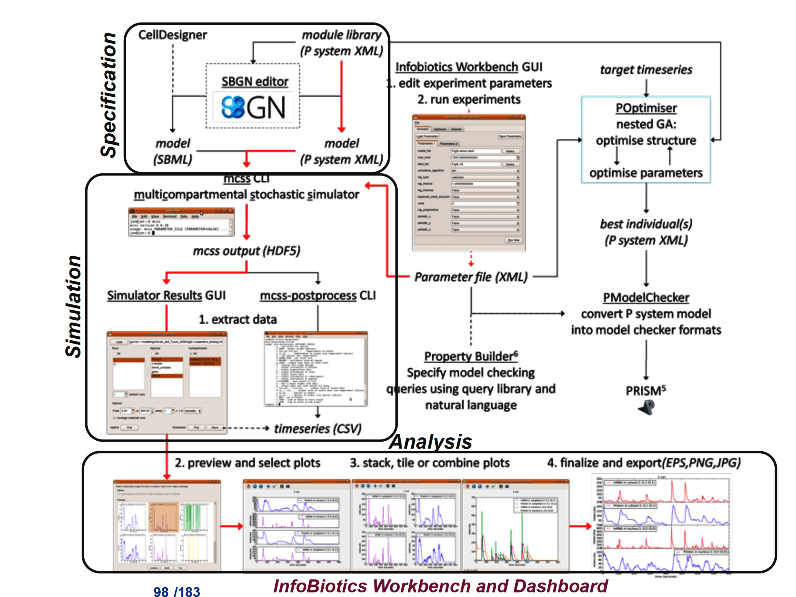

Here’s a graphic of the Infobiotics Workbench from 2010. Anyone familiar with predictive modeling will immediately recognize the similarities with a data analytics dashboard, including setting hyperparameters, loss functions, and comparing champion models.  Source: 2010 GECCO Conference Tutorial on Synthetic Biology by Natalio Krasnogor, University of Nottingham.

Source: 2010 GECCO Conference Tutorial on Synthetic Biology by Natalio Krasnogor, University of Nottingham.

Fast forward to the 2018 O’Reilly Artificial Intelligence Conference in New York where Harvard researcher George Church tells us just how much further we’ve come. (See his original presentation here. Graphics that follow are from that presentation.)

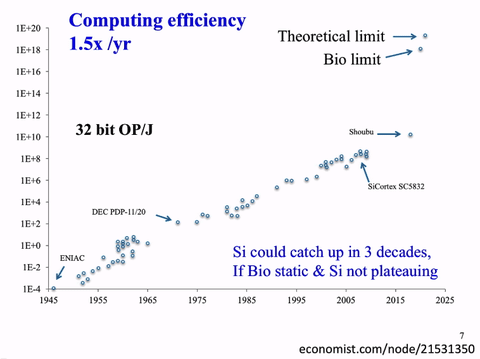

Synthetic neuro biology (SNB) is already ahead of silicon simulations in both energy (much lower) and computational efficiency. Capabilities are increasing exponentially faster than Moore’s Law, in some years by a factor of 10X.

In the upper right, SNB is already operating close to the biological limit of compute efficiency. Church says silicon could catch up in 3 decades at the current growth rate, except that silicon is already plateauing.

Church says “We are well on our way to reproducing every kind of structure in the brain”.

In the lab, he can already create all types of neurons to order including being able to build significant human cerebral cortex structures complete with supporting vasculature. This includes myelin wrapping of the axons so that signals can be sent over long distances at high speeds allowing action potentials to jump from node to node without signal dissipation.

In short, the position of SNB researchers is it’s easier to copy an unknown (brain function, human cognition, AGI) in a made-to-order biological brain than it is to translate that simulation into silicon. In the silicon simulation you’ll never really know if it’s actually right.

To add one more level to these futuristic projections, what is the possibility that we can modify or augment our current physiology to create super intelligence? The field of biological augmentation is already well underway principally in the field of curing disease. Why not extend it?

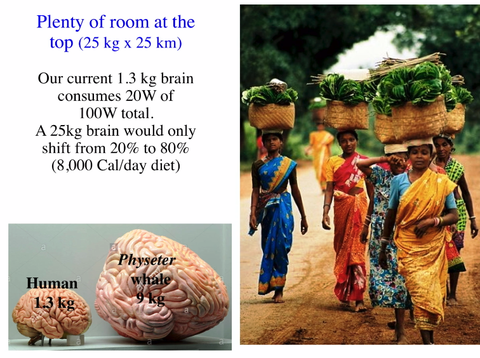

For example, the average human brain weighs 1.3 kg and consumes 20W of power (compared to the 85,000W required by Watson to win Jeopardy). What if we could enlarge it to 25kg, about 3X the size of a whale’s brain, but still within the physical limit of what our skeletons could support? It seems the only penalty would be to have to provide about 100W of power, equivalent to eating about 8,000 calories per day. Donuts – yumm.

Could Silicon Based AGI Ever Be Completely Human-Like?

While silicon AGI modelers still struggle to understand brain function sufficiently to build their simulations there are legitimate questions about whether their best result will ever be enough. Will they ever have the capabilities that make us philosophically human? Our science fiction robots have some or all of these qualities:

Consciousness: To have subjective experience and thought.

Self-awareness: To be aware of oneself as a separate individual, especially to be aware of one’s own thoughts and uniqueness.

Sentience: The ability to feel perceptions or emotions subjectively.

Sapience: The capacity for wisdom.

If we construct an intelligent ‘brain’ in the lab from the same human biological components – is it still just a simulation? Will it be a ‘mind’?

So far computational synthetic biological research remains in the lab or where it has matured, is being applied to cure disease. But increasingly researchers like George Church are presenting at major AGI conferences. They are already able to skip the entire step of creating a silicon analog of the brain where most silicon AGI researchers are currently stuck. If there’s a 15 or 25 year runway to develop AGI, I wouldn’t bet against this wetware approach.

Other articles on AGI:

In Search of Artificial General Intelligence (AGI) (2017)

Artificial General Intelligence – The Holy Grail of AI (2016)

Other articles by Bill Vorhies.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at: