This article was written by Dallin Akagi.

What is deep learning?

I like to use the following three-part definition as a baseline. Deep learning is:

- a collection of statistical machine learning techniques

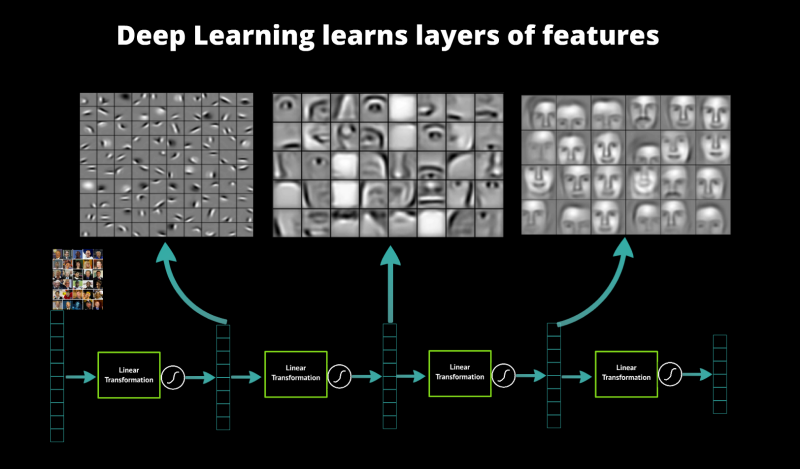

- used to learn feature hierarchies

- often based on artificial neural networks

That’s it. Not so scary after all. For sounding so innocuous under the hood, there’s a lot of rumble in the news about what might be done with DL in the future. Let’s start with an example of what has already been done to motivate why it is proving interesting to so many.

Deep learning has been all over the news lately. In a presentation I gave at Boston Data Festival 2013 and at a recent PyData Boston meetup I provided some history of the method and a sense of what it is being used for presently. This post aims to cover the first half of that presentation, focusing on the question of why we have been hearing so much about deep learning lately. The content is aimed at data scientists who might have heard a little about deep learning and are interested in a bit more context. Regardless of your background, hopefully you will see how deep learning might be relevant for you. At the very least, you should be able to separate the signal from the noise as the media hype around deep learning increases.

What does it do that couldn’t be done before?

We’ll first talk a bit about Deep learning in the context of the 2013 kaggle-hosted quest to save the whales. The game asks its players the following question: given a set of 2-second sound clips from buoys in the ocean, can you classify each sound clip as having a call from a North Atlantic right whale or not? The practical application of the competition is that if we can detect where the whales are migrating by picking up their calls, we can route shipping traffic to avoid them, a positive both for effective shipping and whale preservation.

In a post-competition interview competition’s winners noted the value of focusing on feature generation, also called feature engineering. Data scientists spend a significant portion of their time, effort, and creativity working on engineering good features; in contrast, they spend relatively little time running machine learning algorithms. A simple example of an engineered feature would involve subtracting two columns and including this new number as an additional descriptor of your data. In the case of the whales, the winning team represented each sound clip in its spectrogram form and built features based on how well the spectrogram matched some example templates. After that, they then subsequently iterated new features that would help them correctly classify examples that they got wrong through the use of a previous set of features.

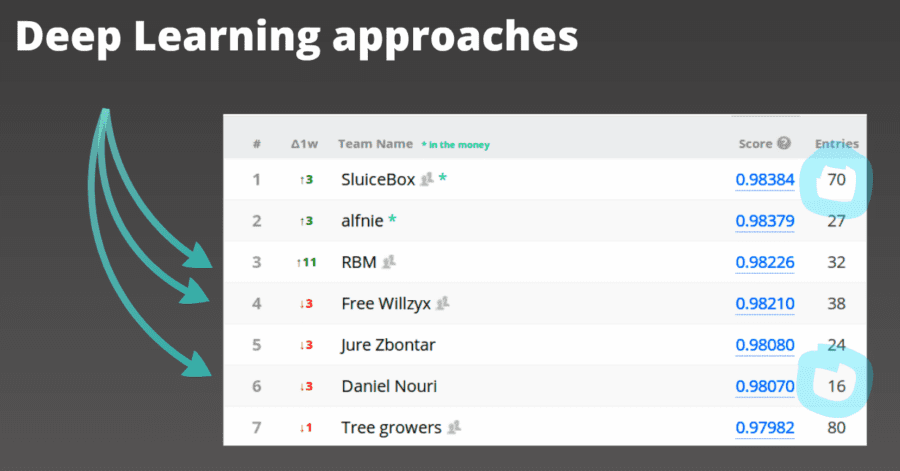

This is a look at the final standings for the competition. The results within the top contenders were pretty tight, and the winning team’s focus on feature engineering paid off. But how is it that several deep learning approaches could be so competitive while at the same time using as few as one fourth the submissions? One answer to that question arises from the unsupervised feature learning that deep learning can do. Rather than using data science experience, intuition, and trial-and-error, unsupervised feature learning techniques spend computational time automatically developing new ways of representing the data. The end goal is the same, but the experience along the way can be drastically different.

This is not to say that ‘deep learning’ and ‘unsupervised learning’ are necessarily the same concept. There are unsupervised learning techniques that have nothing to do with neural networks at all, and you can certainly use neural networks for supervised learning tasks. The takeaway is that deep learning excels in tasks where the basic unit, a single pixel, a single frequency, or a single word has very little meaning in and of itself, but the combination of such units has a useful meaning. It can learn these useful combinations of values without any human intervention. The canonical example used when discussing the deep learning’s ability to learn from data is the MNIST dataset of handwritten digits.

To read the full original article click here. For more deep learning related articles on DSC click here.

DSC Resources

- Services: Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Contributors: Post a Blog | Ask a Question

- Follow us: @DataScienceCtrl | @AnalyticBridge

Popular Articles