Structured Query Language (SQL) analysis is key to extracting meaningful insights from heaps of raw data. This method is prevalent in many organizations across the globe, enabling professionals to do their best work. Although SQL was first introduced as a language for interacting with databases over 50 years ago, it remains a staple of data analytics, with the majority of databases in production today supporting SQL queries.

While SQL is the go-to language for professionals to pull slices of information from databases, it’s hardly accessible to line-of-business decision-makers and “citizen data scientists” who require code-free, menu-based interfaces. What’s more, these people might not know the best way to turn their business questions into cut-and-dry queries, preferring more of an iterative, discovery-based analysis process.

That’s why so many data science teams are keen to integrate their SQL databases with Large Language Models (LLMs). Using an AI chatbot powered by an LLM, users can directly pose a question in plain English, and the model will convert it into an SQL query, run it to fetch the required information, and present it neatly. This streamlines the whole process, although it does raise concerns about data privacy pitfalls.

Let’s look at how enterprises can safely leverage these powerful models to remove friction from self-service data analytics workflows.

Understanding the risks of directly connecting LLMs to databases

While it may seem that directly hooking up an LLM with your company database is efficient, there are plenty of inherent and potential downsides.

The biggest challenge is to ensure the data security and privacy of the stakeholders. LLMs generally process data on cloud servers, which may not comply with regulations like CCPA, GDPR, or HIPAA, which is crucial for enterprises handling enterprise data. Moreover, leveraging proprietary generative AI models can be even riskier. Additionally, practically all LLM providers employ user queries to train their models, which raises the possibility of a data breach, which has regrettably happened.

“In that regard, the privacy risks are extreme, in my opinion,” explains Avi Perez, CTO and co-founder of Pyramid Analytics. “Because you’re effectively sharing your top-secret corporate information that is completely private and frankly, let’s say, offline, and you’re sending it to a public service that hosts the chatbot and asking it to analyze it. And that opens up the business to all kinds of issues – anywhere from someone sniffing the question on the receiving end, to the vendor that hosts the AI LLM capturing that question with the hints of data inside it, or the data sets inside it, all the way through to questions about the quality of the LLM’s mathematical or analytical responses to data.”

Unauthorized access is another potential issue. Balaji Ganesan, CEO and co-founder of Privacera recognizes the vulnerability this brings to the table and advises teams to “implement strict access controls to limit who can retrieve and interact with the AI-generated content, ensuring that only authorized individuals can access sensitive data.”

The final threat is unintentional query execution. AI-powered chatbots write SQL queries based on prompts. Inadequate quick construction or incorrect interpretation by the LLM may result in data deletion or excessively performance-intensive inquiries that raise processing expenses.

Establishing a layer that isolates the LLM from the actual SQL analysis will help to prevent all of the aforementioned issues and their possible repercussions.

Methods to safely leverage LLMs for SQL analysis

The following methods eliminate the chances of data misuse, leaks, and corruption while enabling teams to take advantage of LLMs in their analytics processes.

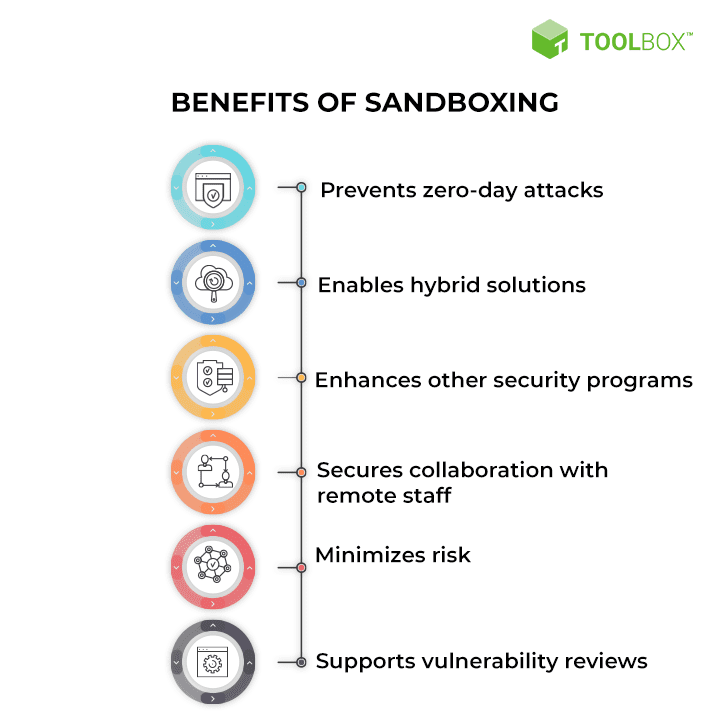

1. Implement sandboxing

In this context, sandboxing is the process of creating a controlled environment where LLMs run SQL queries on a sample or synthetic database. This set-up acts as a replica, mimicking a real-world scenario while the live database remains in isolation.

It is an effective strategy to generate, test, and validate SQL queries without running any risks of data manhandling or compliance issues. Additionally, you can also get accustomed to the process of using LLMs for analysis and fine-tune your own model as needed.

Sandboxing is safe, as it confines the errors or issues to the isolated environment. Even if the data gets modified or accessed by unauthorized personnel, the actual risks are slim to none.

Although it is great for innovation, this approach can get hectic as teams need to regularly update the database in the sandbox. Moreover, as a technique, it isn’t viable for businesses that need real-time analytics.

2. Use unconnected query translators

Query translators, as the name suggests, convert a natural language prompt or description into an SQL statement. The resulting queries can be reviewed and executed in a secure environment to extract insights.

These tools or workflows aren’t connected to the live database. The LLM interacts with the user only and generates queries based on their request. You can fine-tune LLMs to perform well for your specific use cases.

Disconnected query translators enable real-time analysis as humans can execute and review generated queries directly in live databases.

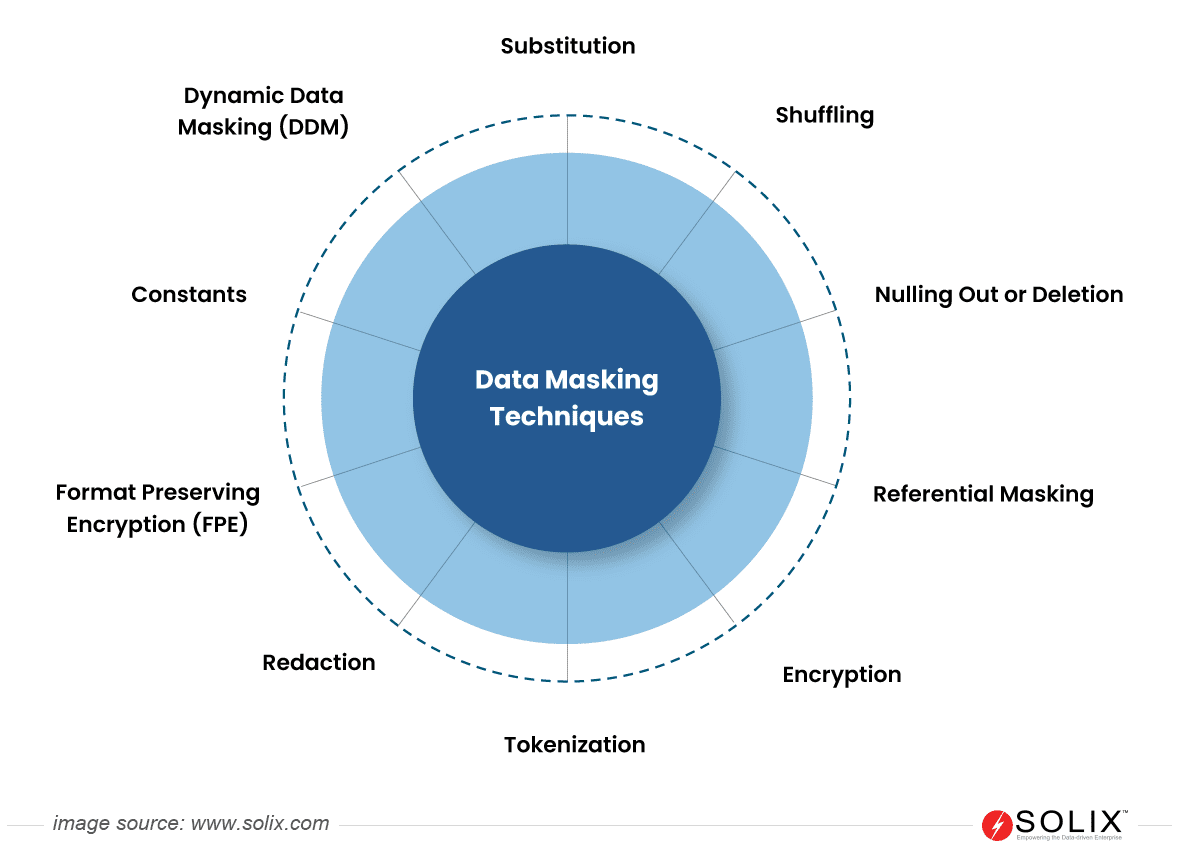

3. Opt for an architecture that hides data

With this tactic, you can adopt anonymized, aggregated, placeholder, or synthetic data instead of the real one. The alternate data source should mimic the structure and pattern of real datasets to ensure accuracy.

Various masking techniques, including encryption and tokenization, hide sensitive variables while preserving the fundamental schema and structure. The dataset links to the LLM for trend analysis, allowing queries to run on the live dataset after passing validation.

You can also set up an additional layer that only shares your data indexes, semantics, and meta-tags with the LLM, without sharing any of the actual database values. The LLM returns a series of prompts for you to validate by running on your real data. This method ensures seamless data migration and secure analysis when transitioning workflows from SQL Server to BigQuery.

Business intelligence software platforms have implemented this architecture in the backend so that end users can use chatbots to set up dashboards and charts based on their actual databases seamlessly.

This approach of leveraging LLMs for SQL analysis ensures efficiency while eliminating the risk of data breaches and regulatory non-compliance. The methodology ensures that nothing sensitive spills by preventing the tracing of original data points.

Wrapping up

Using LLMs to conduct SQL analysis democratizes data throughout the organization while increasing efficiency. Teams can crunch heaps of data to uncover actionable insights without needing specialized training or assistance.

However, it is equally crucial to protect the integrity of data to uphold stakeholder confidence and ensure compliance. To do that, avoid connecting LLMs directly to live databases to protect the latter from various vulnerabilities.

Businesses and enterprises can leverage sandboxing, unconnected query translators, and architectures that hide data to do the same. These strategies, although require a few additional intermittent steps, help teams innovate without compromise while remaining safe.

Hi Pritesh Patel,

I enjoyed reading your post of handling pitfalls when integrating LLM in creating relational database insights. Your ideas focus on having AI to read human-text and generate such requests into SQL queries, then having there SQL queries executed on a mimicked database or data masked database. For your sandbox integration, is there any complications regarding security that the users need to consider when having a part of the live database set up in sand box?