AI isn’t just the next big thing; it’s here now, and whoever isn’t using it is missing out.

Our clients often ask about AI tools in our development process, but finding a truly

valuable approach hasn’t been simple. Here’s how my team and I integrated AI into

quality assurance and what we learned along the way.

How we got the request to start investigations

Amid all the AI hype, our QA team at NIX received a question from sales: “What AI tools

are you using? Clients are asking.” As we discussed this further, it became clear that if

done right, introducing AI could mean increased productivity, time and cost savings, and

added quality gates in our process—all benefits we wanted.

Categorizing AI Tools

After researching AI for QA, we divided modern tools into three categories:

- Existing tools with AI features added on the hype wave: These ranged

from adequate to great, but most added AI as a gimmick because “everyone

wants AI.” The genuinely useful AI features were minimal—like generating user

avatars or spell-checking test cases. However, these tools were often too costly

for the benefits they provided. - New AI-based products: These tools aimed to be “intelligent” but lacked polish.

User interfaces were clunky, bugs abounded outside the “happy path,” and some

ideas didn’t work as intended. Still, they showed hints of what we imagined for

QA’s future—maybe in three to five years. - False advertising: Some tools promise to test entire applications and eliminate

all bugs in complex systems. These were clearly cash grabs, yet their sheer

number was surprising.

What actually works?

Despite the hype, a few genuinely good features emerged. Some tools auto-generated

tests for real applications and documented test cases, while others created test cases

from feature descriptions or fixed broken auto tests. Other tools made testing

recommendations based on existing test cases. But not all of these tools were ready for

commercial-scale projects. Some lacked the full feature set, others demanded too much

access (a security risk), and many couldn’t handle scaling.

Summary of our findings

In our evaluation, none of the AI-based tools met all our requirements for commercial

use on large projects. Either they weren’t complete, had significant security risks, or

didn’t scale well. So, while AI in testing holds potential, current tools didn’t meet our

standards.

Exploring known AI tools

From the outset, we excluded major tools like ChatGPT, Google’s Gemini, and GitHub

Copilot because we already used them in various ways. But after realizing there were

few viable AI tools, we turned to these options to see how we could use them more

systematically in QA.

Understanding Real Use Cases

Our first step was to survey our QA engineers—about 400 in total—on how they were

already using AI. Roughly half were using AI for:

● Test Automation Assistance: Speeding up test writing and debugging.

● Brainstorming: Generating ideas, including test cases.

● Data Generation: Creating test data for varied scenarios.

● Proofreading: Checking emails and documentation.

● Routine Automation: Automating repetitive QA tasks.

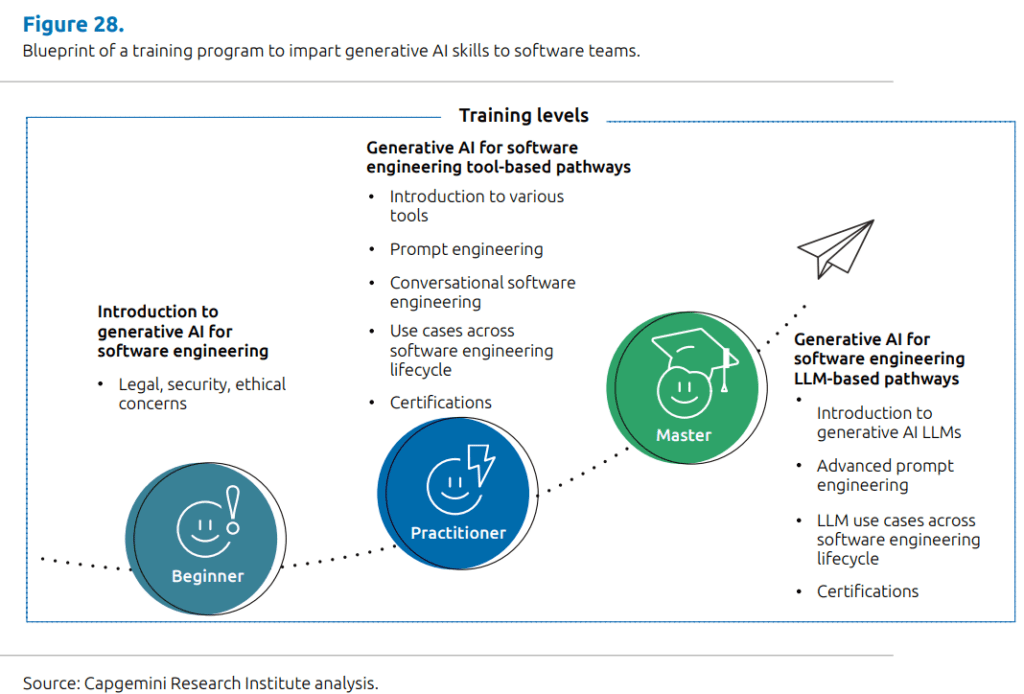

With these insights, we designed an in-house course for our QA team on using

generative AI effectively, covering essential skills and tools that could benefit our clients.

The overall contents of our course look like this:

And now as engineers all across the company are learning – they also are starting to

come up with more new use cases and optimizations to the existing ones.

How profitable is it?

The impact has been positive. Mid- to senior-level manual engineers now spend about

20% less time on test generation and documentation. AI also lets us quickly generate

multiple test frameworks to see which works best, something that used to take days. AI

enables solo engineers to receive code reviews, adding value to smaller teams.

The downside

AI isn’t flawless—it’s a helpful assistant but needs double-checking. For junior

engineers, AI can be tricky, as they may struggle to recognize when AI makes mistakes.

Just like a human assistant, AI can enhance an experienced engineer’s productivity but

doesn’t replace expertise.

Why companies get it wrong

Many companies still misunderstand AI. Tempted by exaggerated claims, they try to

incorporate AI tools that don’t deliver as promised, resulting in frustration and poor

outcomes.

Our experience led to a few important takeaways:

- AI isn’t a miracle worker: It won’t double or triple productivity overnight, but it

can create steady gains as people grow familiar with it. - Tailored implementation is crucial: For AI to be useful, it needs to be adapted

to the specific needs of the company. Following the hype isn’t enough. - AI doesn’t empower juniors to work like seniors: It’s useful for experienced

engineers but can overwhelm juniors, who may struggle to distinguish between

AI’s helpful and erroneous outputs. - Purpose-built AI tools for QA aren’t ready yet: While there are promising

tools, they don’t yet add as much value as hoped compared to traditional non-AI

tools for similar purposes.

AI continues to evolve, and while it’s changing some workflows, quality assurance

remains a complex process requiring human oversight. For now, AI serves as an

assistant rather than a replacement, but as it matures, we’re optimistic about its

potential.

About the author

I’m Serhii Mohylevskyi, QA Practice Leader at NIX, and for over a decade, I’ve worked

across manual, automated, and performance testing. My role includes identifying tech

shifts and upgrading our QA standards, which impact hundreds of projects across the

company.