In 1993, I was at a conference in Seattle where I stumbled onto someone who was showing this new application called the Mosaic WWW Browser. At the time, I’d been doing programming work in the burgeoning gaming field after having spent some time working in publishing and advertising. The demo was, to be honest, not all that exciting – the fonts were fixed, the layout was primitive, the images were shown separately. They had yet to introduce even the most basic of form elements, and there was neither a scripting language nor stylesheets. I’d like to say that I saw this and thought to myself: “I really need to get involved with this!” But we had a game deadline coming up, and I didn’t get around to downloading Mosaic until late 1994, back when Web 1.0 was still mainly CGI gateways – though later that year I did end up writing one of the first JavaScript apps for Microsoft.

Web 2.0 came about a decade later. I’d been working with XML from 1997 onward (and had begun communicating with the W3C at the time, though I wasn’t yet a member). The opportunity came up to be the editor for xml.com, which had just been purchased by O’Reilly, and for much of the decade, I covered XML-based technologies. I’d also been blogging pretty regularly at that point, around when Tim O’Reilly observed the fact that the web had become read-writeable. With JavaScript beginning a new reach for maturity, with CSS becoming much more sophisticated, and with XML looking at that point like it would be the foundation of a new era, Web 2.0 became the cool term for a short while, before mobile tech sucked up all the oxygen. However, 2004 was a pretty good year to put a flag in the ground and say “Whoozaah! It’s Web 2.0!!”

Since then, JSON eclipsed XML as the next major data format (though I’d argue that XML is actually far from dead, that’s fodder for another rant), the Semantic Web got off to a rocky start, then we had Big Data, Data Science, Machine Learning, Deep Learning, and suddenly you could only get a job in the computer field if you had a Ph.D. from a major university (which may be one reason why suddenly there aren’t enough programmers out there to fill all the requisite jobs).

About this time, you also saw the emergence of this impressive technology called blockchain, and Silicon Valley programmers who were in the know became overnight gazillionaires by speculating in virtual currencies. After Bitcoin climbed to nosebleed heights, crashed, went to even greater heights, and collapsed again, the money chase was on, and the future of everything would be Bitcoin this and Ethereum that, and programmers would give the finger to the Man (i.e., the banks, the government, the gnomes of Zurich, the Illuminati, you know, Them!). Recently, supposedly more than a trillion dollars in electronic currency disappeared overnight, and while the stock market stumbled a bit, it picked itself up, dusted itself off, and went off on its merry way.

Now, this same group of electronic coin speculators is claiming that blockchain and decentralized finance (or DeFi, as the cool kids are now calling it) is Web 3.0, partially in a bid to legitimize what has become a rather impressive Ponzi scheme. Is it Web 3.0? Not that it really matters, but no, I don’t think it is. We have been doing eCommerce since about 1997 (who remembers Pets.com?). The various players that truly deal with finance have been moving away from paper and coin currency for some time now and were in fact behind the push towards making HTTP secure (HTTPS) so that they could engender trust in their transactions. HTTPS isn’t perfect, but it’s a damn sight better than what came before.

There’s also nothing wrong with distributed ledger technology, save that you have to engender some form of immutability in that tech, something that’s very difficult to do in a virtual world. This is where trust comes in. You need it, and most trustless systems are going to fail because trust is a human attribute, not a computable one. Indeed, the concept of proof of work is the essence of non-computable problems and is in fact a fairly weak deterrent, especially given that quantum computers are now reaching a stage of sufficient complexity that they can in fact begin solving ever more sophisticated encryption keys within a manageable window of time. At that point, what you want are quantum systems generating rolling encryption keys. This means proof of work blockchain has at best another eight to ten years before it becomes obsolete.

Now, there are several things on the horizon that I do believe deserve the title of Web 3.0. In this week’s stories, I talk about Solid, which is a W3C specification for networks of coordinated data pods that provide several solutions, including data privacy, distributed (or decentralized) data, and federated query, and which will be going live within 2022. Immutable pods would actually make fantastic decentralized ledgers because they are designed to work with both trusted and trustless networks and because they also abstract out these protocols in order to adapt to new kinds of encryption. Similar efforts are going on right now with Decentralized identifiers and Verifiable Credentials, both of which are essential for supporting any kind of authentic network. Finally, pods are containers for graphs, so can support both linked-list style blockchains and more sophisticated data topologies, with far more room available than the parsimonious blockchain standard for data.

The second aspect of Web 3.0 is the infrastructure necessary to support entity virtualization, which comes into play with digital twins, augmented reality, gaming, and media. Again, this is something of a stack – you need the ability to support two-dimensional, two-and-a-half-dimensional, and three-dimensional structures, which implies GPUs pretty much as a minimum configuration, and you need consistent standard protocols for describing objects in all three spaces (a 2 1/2D space is one where you have projected 3D sprites in a scrollable 2D world). You need bandwidth support for high-speed video and audio, and you need the ability to do this asynchronously.

The third aspect is perhaps the most subtle but is also very important. Web 3.0 is ultimately about data interchange, which means RDF. At this point some of you, gentle readers, are likely saying that this is ridiculous, after all, JSON is the dominant language for data interchange on the web. The problem with this is that JSON, in and of itself, does not have any sense of context, and shared data requires the ability to share context. This is not to say that JSON-LD could not be used, primarily because JSON-LD is a form of RDF. It just means that ad hoc JSON is insufficient for the task. It also may mean that Turtle has its moment to shine as well, but that’s less important here. That such data is asynchronous, immutable, and declarative should go without saying, but unfortunately, it does need to be said.

All that being said, from the above description it would seem that the Metaverse and Web 3.0 are indistinguishable. They aren’t. The metaverse is a social construct, but it requires Web 3.0 as an operating system. Just as in the first web, Web 3.0 will likely be unevenly distributed, though it is my belief that it will likely evolve as extensions to the browser, rather than as stand-alone apps. Why? Because the browser has evolved as a generalized platform, is ubiquitous, and at its core is remarkably sophisticated. It’s an addressing mechanism that’s ideally suited for retrieving content both synchronously and asynchronously, and changes to it, in general, can be done via continuous integration. Now, it could be that a 3D environment may be rendered in any number of ways (I’m not sure that Web3D will be that way, but it’s not hard to see it as an antecedent) but the point remains that in five years time, we may hit a sufficient critical mass of GPUs to make what’s shared feasible.

There is also, ultimately the question of whether such a world is declarative or imperative (or both).

So, does it matter whether we call blockchain+ Web 3.0? I think it does. The web has worked best when there was broad agreement (after much experimentation) about a number of different interrelated technologies, and the move from the Read-Write Web to the Spatial Web is, in my opinion, evolutionary in nature, not revolutionary. It’s still going to take a while – in part because right now everyone is essentially heading off in different directions trying to claim as large a slice of a closed garden model as they can for fear of losing a competitive edge. So long as this state of affairs holds true, there will not be a web 3.0. Instead, we’ll just be dealing with everyone’s failed Second Life clones.

Data Science Central Editorial Calendar

DSC is looking for editorial content specifically in these areas for March, with these topics having higher priority than other incoming articles.

- AI-Enabled Hardware

- Knowledge Graphs

- Metaverse

- Javascript and AI

- GANs and Simulations

- ML in Weather Forecasting

- UI, UX and AI

- GNNs and LNNs

- Digital Twins

DSC Featured Articles

- Agent IRIS Sergey Lukyanchikov on 01 Feb 2022

- Data Literacy Education Framework – Part 2 Bill Schmarzo on 01 Feb 2022

- Divergent thinking and true AI innovation Alan Morrison on 01 Feb 2022

- How Solid Pods May End Up Becoming the Building Blocks of the Metaverse Kurt Cagle on 31 Jan 2022

- There’s Trouble Brewing with Smart Contracts Stephanie Glen on 31 Jan 2022

- How Mobile Point of Sale Terminals Are Evolving PragatiPa on 31 Jan 2022

- Insights from workshop on Bayesian deep learning at neurips 21 ajitjaokar on 31 Jan 2022

- Environmental Sustainability of Assets emerges as Key Pivot for Uptake of IoT Solutions Nikita Godse on 31 Jan 2022

- Big data engineering – A complete blend of big data analytics and data science Aileen Scott on 31 Jan 2022

- The Piano Keyboard as a Contextual Computing Metaphor Alan Morrison on 31 Jan 2022

- Cloud Data Platforms and the Too-Much-of-a-Good-Thing Effect Sameer Narkhede on 29 Jan 2022

- Common Reasons Why Organizations Fail to Implement SAP SuccessFactors Eric Smith on 27 Jan 2022

- Benefits of Using Kafka to Handle Real-time Data Streams Sameer Narkhede on 27 Jan 2022

- DSC Weekly Digest for 25, 2022: They’re Coming To Take My Job Away! Kurt Cagle on 26 Jan 2022

- Is the GPU the new CPU? Kurt Cagle on 26 Jan 2022

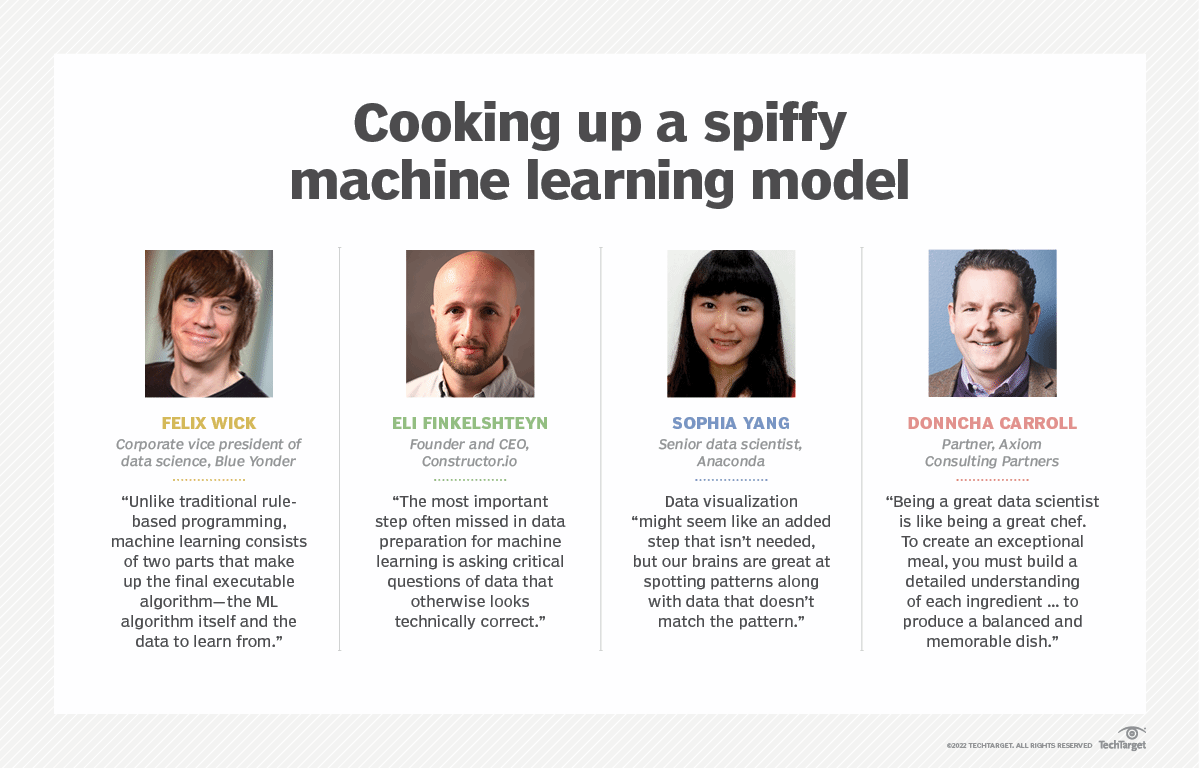

Picture of the Week

Cooking up a spiffy machine learning model