The history of F1 motor racing and the use of telemetry as a way to monitor car setup and performance dates back to the 80s. The first electronic systems were installed onboard the car, collected information for only one lap and the data were then downloaded when the car was back in the garage. The explosion of computing capabilities, in the 90s, contributed to the growth of intelligent data usage in the F1 and the need to monitor data with a much higher frequency from a car. Nowadays each car has from 150 to 300 sensors that transmit data in real-time (www.mclaren.com), and data acquisition is so detailed and accurate that FIA sets rules to limit the usage of them to preserve the unpredictability of the racing. An example? No data can be transmitted to a driver during the race. Sensors installed onboard usually monitor speed, tire temperatures and wears, engine conditions, brake temperatures, fuel in the tank, water levels, and so on. These data are also combined with weather conditions, track information, pilots’ state of health and GPS (“F1 telemetry: The data race” Nicolas Carpentiers, 2016). Analysts integrate this large amount of data from different sources with scalable machine learning algorithms to make predictive and prescriptive intelligence.

As a use case, our Team has modeled telemetry data collected by F1-2017 Codemaster for Playstation 4. The game data, called UDP telemetry, are available as raw data from PS4 and could be outputted to other devices connected to the console or ingested by servers. The nature of the data is very similar to the one produced by a real Formula 1 machine whatever this game is not aspiring to be a simulator but it’s more near to an arcade game.

Telemetry has been integrated and streamed in real-time with our Streaming Platform. Analytics is shown in a dashboard that streams data of telemetry while the player is turning the laps; moreover, a machine learning model is computed and predictions are provided also in real-time inside the Platform.

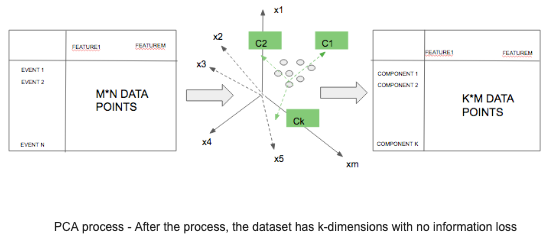

The choice of the ML model has been influenced by a large amount of quantitative and correlated measured features and the main goal was to obtain some latent variables that could sum up car performances. The PCA (Principal Component Analysis) meets these requirements as a data reduction technique. It allows capturing the variance of a set of features making a linear combination (basically a weighted average) of them and creating new variables non-correlated, called components. Once the model is defined, predictions are computed submitting to the model each new stream event and extracting the predicted k-components.

The PCA model has been implemented thanks to our platform integration with H20 ML Platform, which allows calling directly the model inside our platform pipelines. H20 ML Platform is an open-source machine learning and artificial intelligence platform that allows data scientists to build scalable and in-memory ML models using a web-UI, called Flow.

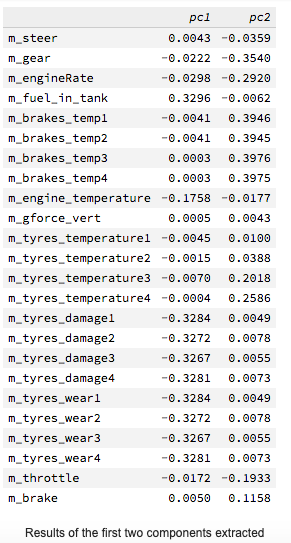

The trained ML model was computed in batch and the first two components extracted captured 63% of the variability of the whole training set. The first component could be interpreted as a car performance index while the second as a driver style guide index, opportunely rescaled from 0 to 100.

The car performance index is high related to tires wear (0.33), engine temperature (0.18) and fuel in the tank (-0.33) so it could express the potentiality that the car has at that moment during the game. The driver style guide index instead is highly related to brakes temperature (0.40), gear (0.35), engine rate (0.29), rear tires temperatures (0.25) and throttle (-0.19) so it could express the capabilities of the driver while he is playing.

Thanks to PCA modeling and our streaming platform, a dashboard shows in real-time the car performance index and the driving style guide index of each new player, giving new smart information to the user, based on previous users’ experiences and combined in a fast and intelligent way.

This use case is an example of usage of the streaming machine learning: actually, the algorithm processes the real-time data streams and gives results instantly. In fact, our streaming platform speeds up the process: from raw data collected to the published dashboard, the time passed is little and this avoids the obsolescence of the models trained in batch and to give an instantaneous answer to the stakeholders that need to make decisions.