Actually I’ve known about MXnet for weeks as one of the most popular library / packages in Kaggler, but just recently I heard bug fix has been almost done and some friends say the latest version looks stable, so at last I installed it.

MXnet: https://github.com/dmlc/mxnet

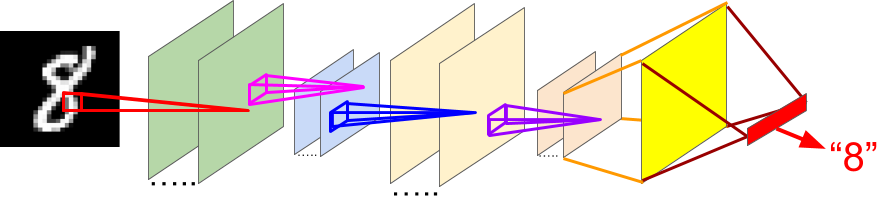

I think that the most important feature of MXnet is its implementation of not only Deep Neural Network (DNN) but also Convolutional Neural Network (CNN) and Recurrent Neural Network (RNN) in R, because as far as I’ve known there has been no R packages implementing CNN (and/or RNN).

In the original post of my blog, I tried a CNN {mxnet} R package with a short version of MNIST handwritten digit datasets whose maximum accuracy may be less than 0.98 for its small sample size.

As a result, CNN of {mxnet} performed accuracy 0.976: this is better than Random Forest (0.951), Xgboost (0.953) or DNN by {h2o} (0.962). In addition, CNN by {mxnet} ran very fast (270 sec), indeed faster than DNN by {h2o} (tens of mins).

MXnet is a framework distributed by DMLC, the team also known as a distributor of Xgboost. Now its documentation looks to be completed and even pre-trained models for ImageNet are distributed. I think this should be a good news for R-users loving machine learning… so let’s go.

CNN is a variant of Deep Learning and it has been well known for its excellent performance of image recognition. In particular, after CNN won ILSVRC 2012, CNN has gotten more and more popular in image recognition. The most recent success of CNN would be AlphaGo, I believe.

Original (long) post with source code, illustration on a classification problem, and detailed explanations is here.