Summary: What comes next after Deep Learning? How do we get to Artificial General Intelligence? Adversarial Machine Learning is an emerging space that points to that direction and shows that AGI is closer than we think.

Deep Learning, Convolutional Neural Nets (CNNs) have given us dramatic improvements in image, speech, and text recognition over the last two years. They suffer from the flaw however that they can be easily fooled by the introduction of even small amounts of noise, random or intentional. This phenomenon can result in the CNN misclassifying an image and yet having a very high degree of confidence.

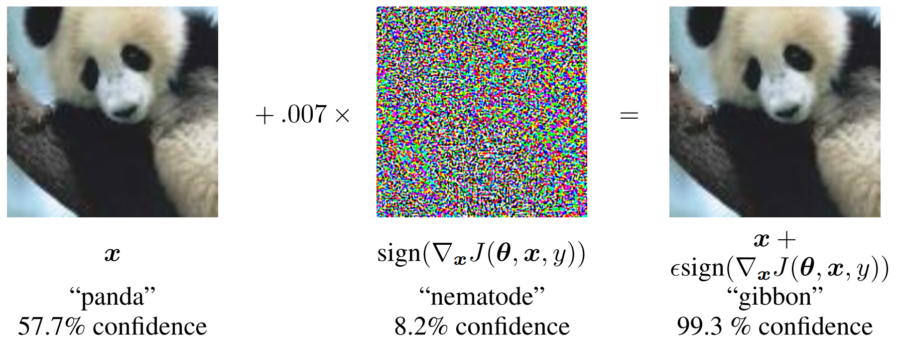

In this example (Szegedy et al., 2014) the addition of a tiny bit of noise to the original image caused the CNN to misclassify this panda as a gibbon with 99% confidence.

Even more concerning, researchers have shown that completely random nonsense images can be misclassified by CNNs with very high confidence as objects recognizable to humans, even though a human would clearly recognize that there was no image there at all (e.g. labeling white noise static as the image of a cat).

What’s the Problem?

As image and text processing become more pervasive in our applications, not only is there the possibility for unintentional error, there is the potential for outright fraud.

Consider two rising security applications, spam filtering and retinal identification. It is possible for a knowledgeable adversary to exploit this weakness to defeat a CNN spam filter. It was originally believed that the spammer would have to have deep knowledge of the original system but it’s recently been shown that even cleverly introduced random noise in the spam message, undetectable to the reader, can be used to defeat CNN spam filters.

Similarly with retinal images. Although retinal images are believed to be highly specific to individuals, how do we know that the CNN is correctly identifying that retinal image is actually yours? An adversary could introduce undetectable amounts of noise in the image resulting in misidentification.

Similarly with retinal images. Although retinal images are believed to be highly specific to individuals, how do we know that the CNN is correctly identifying that retinal image is actually yours? An adversary could introduce undetectable amounts of noise in the image resulting in misidentification.

A third possibility is to intentionally poison say an intrusion detection system. These systems are designed to constantly retrain on the most current system observations. If those system observations are intentionally tainted with noise designed to defeat the CNN recognition, the system will be trained to make incorrect conclusions about whether a malevolent intrusion is occurring.

Adversarial Machine Learning (AML)

Adversarial Machine Learning is an emerging area in deep neural net (DNN) research. Although the problems of noise and misclassification have been recognized for a decade, only in the last 12 to 24 months have major advances been made. It’s important to understand that AML is an emerging area. Industrial strength applications aren’t ready today but they are close.

The Basic Concept

The Basic Concept

The basic concept of AML is to have two separate CNNs battle it out. The first CNN is known as the Discriminator. Its task is to correctly classify the image. The second and adversary CNN is the Generator. Its task is to produce images that will fool the Discriminator. This architecture is often also called Generative Adversarial Networks (GANs).

We’ll stick with image processing for this example. Suppose we want to train our GAN to recognize 18th Century paintings. This is completely unsupervised with the system simply being shown a large sample of these paintings. Remember that CNNs reduce images through a series of layers to simple numerical vectors that describe edges, colors, and the like and can also create images in the reverse process by simply starting with a random selection of these numerical vectors.

As the contest begins, the Generator feeds images which it has created and are therefore false to the Discriminator which sees these images along with true images.

The Discriminator wants to be good at this and optimizes itself not to be fooled by the Generator. The Generator also wants to be good at fooling the Discriminator and optimizes itself to produce images that the Discriminator can’t tell are false. Eventually the Generator produces such realistic images that the Discriminator only has a 50/50 chance of being correct and the GAN optimization is complete.

I’m sure you recognized that the Generator-produced images in this example play the same role as the noise in description of CNN failures and that this procedure effectively trains against the noise.

An Unexpected Bonus – Getting Humans Out of the Process

The current state of AI has advanced to general image, text, and speech recognition, and tasks like steering the car or winning a game of chess. Basically the eyes, ears, arms, and legs of AI, but not yet AGI, Artificial General Intelligence.

Among the barriers to be overcome is the continued requirement for a human data scientist to be involved in the modeling process. CNNs do an excellent job of image processing, but only when they are supervised and given tagged examples from which to learn.

- The first bonus from Adversarial Machine Learning is that the data set is untagged and the process is unsupervised.

- The second bonus is in the design of the loss (fitness) function. The design of an appropriate loss function that basically tells the CNN whether it is getting warmer or colder after each iteration is still a complex task accomplished by data scientists. With AML this is removed and replaced by the evolutionary process of the two systems attempting to optimize against one another.

- The third bonus is interpretability. Up to this point CNNs have been true black boxes with data scientists unable to discern the purpose of each layer or algorithm. AML offers the opportunity to train the layers individually and develop a hierarchy of layers that allow the DS to directly view and understand the role of each layer.

This is as close as we have yet come to having machines learn by themselves without human intervention.

AML Brings Us a Step Closer to Artificial General Intelligence

Now that we are on the brink of achieving unsupervised, self-optimizing machine learning, we are several steps closer to machines that learn like humans from their experience of the world.

Our machine learning to this point shows deductive reasoning (from prediction to observation) and inductive reasoning (from the observation to the generalization). What we don’t have yet is abductive reasoning in which the machine takes incomplete information about the world and takes action based on the likeliest possible explanation for the group of observations, an educated guess.

But AML offers a path forward for the machine to demonstrate human-like prediction and planning based on its own experience of the world. In a thought experiment suggested by Yan LeCun and Soumith Chintala, both doing AI research at Facebook, they propose to show several video frames of a billiards game to their AML and have it learn the rules of physics describing what will happen next – to anticipate. They plan to augment this experiment by training their AML on images set a few inches apart like binocular vision. In essence, to let it learn about the 3D world. From this they expect their AML to develop the ‘common sense’ that, for example, it cannot walk out a door without first opening it or how far someone would have to reach across a table to grasp an object.

Another step down the path toward AGI.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist and commercial predictive modeler since 2001. He can be reached at: