A few years ago, in a Q&A session following a presentation I gave on data analysis (DA) to a group of college recruits for my then consulting company, I was asked to name what I considered the most important analytic technique. Though a surprise to the audience, my answer, counts and frequencies, was a no brainer for me.

I’ve made a living doing data analysis over the years. My variant of DA is a direct descendent of the exploratory data analysis (EDA) movement that emerged in the academic world 50 years ago as a reaction to the hyper mathematization of statistical science. The EDA approach, as a counterweight, sought to front and center basic data examination using simply understood tools at the expense of complex models whose assumptions were often dubious.

While there’s no precise definition of EDA, one characterization I like is: “EDA is an approach to data analysis that postpones the usual assumptions about what kind of model the data follow with the more direct approach of allowing the data itself to reveal its underlying structure and model.” In other words, EDA digs into the data, simply examining variables alone and in tandem.

In my work, DA starts with an extensive look at data distribution, both within and between attributes of interest. Measures of central tendency, order statistics/quantiles, density functions, outlier/anomaly detection, missing values, correlations etc. are critical, as are visualizations that support these techniques, such as dotplots, barplots, histograms, stripplots, boxplots, violinplots, densityplots, scatterplots, heatplots, and binplots. Add a trellis or small multiple capability to view distributions and relationships “by” values of additional attributes.

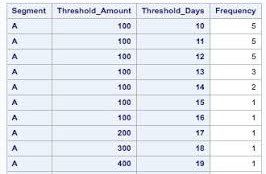

For all this, however, the most important DA technique for my money is the distribution of frequencies and counts, both uni and multi-dimensional. And this is for all data types, including character, category, and numeric.

I’ve spent a fair amount of time and effort building frequency tabulation tools in R, Python-Pandas, and Julia. What follows are some of my current illustrations in R. The code snippets below, featuring functions freqsdt and allfreqs, revolve on the outsized capabilities of the splendid R data.table package, which routinely support my work with 25+ GB data on a notebook. data.table’s all about power, speed, and size for data analysis. Indeed, the emergence of data.table, along with the complementary tidyverse ecosystem, has revolutionized analysis in R. And though I love Python-Pandas, I wouldn’t be at all surprised if the Python version of data.table soon becomes a formidable competitor.

The workhorse freqsdt function drives most illustrations that follow. Building off data.table’s query and grouping metaphor, freqsdt takes two string arguments — the first, a valid data.table name that can include a filter; and, the second, a comma-delimited string of attribute names that can include dynamically-created variables. R’s eval-parse combo executes the final string. This combination, detailed below, provides a comprehensive, multi-dimensional frequency generating interface.

To illustrate freqsdt and allfreqs, I use the trusty Chicago Crime 2001-present data set, which “reflects reported incidents of crime (with the exception of murders where data exists for each victim) that occurred in the City of Chicago from 2001 to present, minus the most recent seven days.” The daily data are available following a separate process that writes a portable “feather” file from the downloaded CSV and subsequently available to R, Python-Pandas, and Julia. Alas, there are in excess of 7M records (1+ GB RAM) pertaining to the what, where, and when of Chicago crime over 20 years. Most analyses with this data involve counts — frequencies — among one or more dimensional attribute axes.

The supporting platform is a Wintel 10 notebook with 128 GB RAM, along with software JupyterLab 1.2.4 and R 3.6.2. The R data.table, tidyverse, pryr, plyr, feather, and knitr packages are featured.

Read the entire blog here.