Let’s be honest: The capabilities of Generative AI (GenAI) tools like OpenAI’s ChatGPT, Microsoft Copilot, and Google Gemini are truly amazing. In the right hands, these tools can act as tireless research assistants, continuously learning and adapting as you explore and research new topics or refine your understanding of existing topics.

However, significant concerns remain due to a lack of transparency in how these GenAI tools generate their outputs. These GenAI tools are ” black boxes,” with internal decision-making processes that are opaque to users. This lack of transparency can lead to mistrust and hesitancy in adopting AI technologies. Users want to understand the rationale behind the information provided to trust and fully embrace these advanced tools.

An AI Utility Function could serve as a transparent framework explaining the rationale behind the insights generated by GenAI tools. The AI Utility Function would detail the variables and associated weights influencing the AI model’s recommendations.

Despite these benefits, AI Utility Functions are not widely used today for several reasons.

- Firstly, the complexity of the AI models and their learning processes makes it challenging to distill their decision-making processes into a simple, understandable format.

- Secondly, there is a lack of standardized methods for creating and implementing an AI Utility Function that can be universally applied across different AI systems (Samek, Wiegand, & Müller, 2017).

- Finally, there are concerns about intellectual property and competitive advantage, as companies may be reluctant to disclose the inner workings of their AI models.

However, studies have indicated that implementing transparency mechanisms like AI Utility Functions can lead to better user engagement and more informed decision-making (Hind et al., 2019). For instance, research by Ribeiro, Singh, and Guestrin (2016) introduced the LIME (Local Interpretable Model-agnostic Explanations) framework, which aims to explain the predictions of any classifier in an interpretable manner. Similarly, research by Lundberg and Lee (2017) on SHAP (SHapley Additive exPlanations) values has shown promise in making model outputs more interpretable.

An AI utility function can demystify the AI’s decision-making process by detailing the variables and weights that influence AI recommendations. This helps users understand why a particular recommendation was made and provides insights into how the AI prioritizes different factors, building trust and driving the adoption of these GenAI tools.

What is the AI Utility Function?

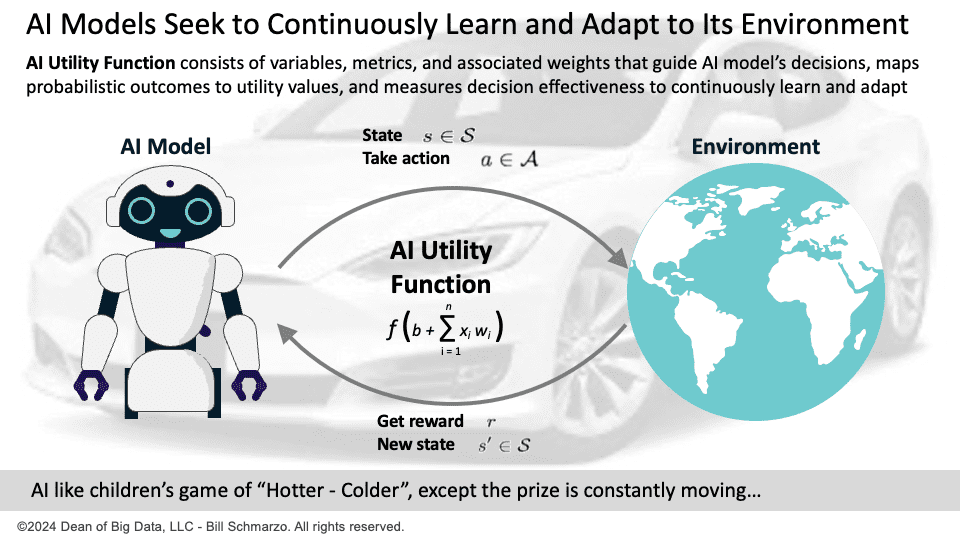

The AI Utility Function is a transparent framework outlining the variables, metrics, and associated weights influencing an AI model’s actions, decisions, and recommendations.

The AI Utility Function can be conceptualized as a transparent framework that elucidates the decision-making process of AI systems. This function incorporates variables such as the context of the user’s query, historical interactions, the credibility of sources, and the relevance to the user’s stated preferences. The AI Utility Function provides a structured approach to understanding how AI models generate recommendations by identifying and detailing these variables. This transparency is crucial for users to appreciate the nuances of AI decision-making, fostering greater trust and reliability in AI outputs.

Each variable is assigned a specific weight that reflects its significance in decision-making. For instance, the context of the user’s query might be given more weight than historical interactions if the AI system prioritizes real-time relevance. Similarly, the credibility of sources can be weighted heavily to ensure that recommendations are based on trustworthy information. The decision logic then combines these variables and their weights to influence the AI’s recommendations. By explicitly defining this logic, users can understand why certain information is recommended and how different factors are balanced to arrive at a final output.

AI Utility Function User Benefits

The benefits of investing the time to integrate an AI Utility Function into your GenAI tool are vital in driving the use and adoption of these tools. Key benefits include:

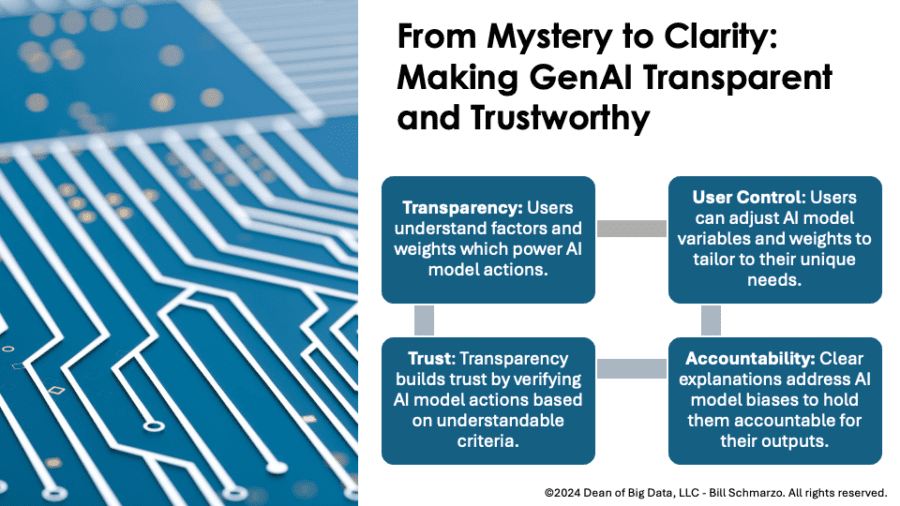

- Transparency: Users can understand the factors influencing the AI model’s recommendations, which demystifies the AI model’s decision-making process. This clarity lets users see how their inputs and the AI’s internal logic combine to produce specific outputs.

- Trust: Enhanced transparency can build confidence in AI systems by showing users that recommendations are based on clear and understandable criteria. When users know how decisions are made, they will likely feel more confident in the AI model’s reliability and fairness.

- Accountability: Clear explanations can help hold AI models accountable for their outputs by making identifying and addressing errors or biases easier. This accountability ensures that AI models can be audited and improved based on transparent criteria.

- User Control: Users might be able to adjust the variables or weights to better tailor the AI model’s responses to their needs, providing a more personalized experience. This level of control empowers users to optimize the AI’s performance according to their specific preferences and requirements.

Potential Implementation Impediments

But as with all things, mandating an AI Utility Function is not without its challenges:

- Complexity: The underlying algorithms and decision processes of AI models like ChatGPT are highly complex, making it challenging to simplify these for transparency without losing accuracy. Striking a balance between comprehensibility and maintaining the model’s sophisticated capabilities is a significant hurdle.

- Dynamic Nature: AI models continuously learn and update, meaning the utility function must be adaptable to reflect these changes. Ensuring that the transparency framework evolves in tandem with the AI model adds a layer of complexity to its implementation.

- Privacy Concerns: Transparency should not compromise user privacy or expose sensitive data, which can be challenging to manage when detailing the variables and weights influencing AI decisions. Safeguarding user information while providing meaningful transparency is a critical challenge that must be addressed.

- Technical Implementation: Developing a transparent utility function would require collaboration between AI developers, ethicists, and user experience designers to ensure its effectiveness and ethicality. This interdisciplinary effort is necessary to create a utility function that is technically robust and user-friendly while adhering to ethical standards.

- User Education: Users would need some education to understand the utility function and its implications, which could involve training or resources to help them interpret and utilize the provided transparency. Ensuring users can effectively engage with the utility function is essential for its success.

- Regulatory Support: Regulatory guidelines may be needed to ensure transparency standards are met, providing a framework for consistency and accountability across different AI systems. Such regulations would help establish industry-wide norms and protect users from potential misuse of AI technologies.

Example: GenAI-powered Financial Analyst

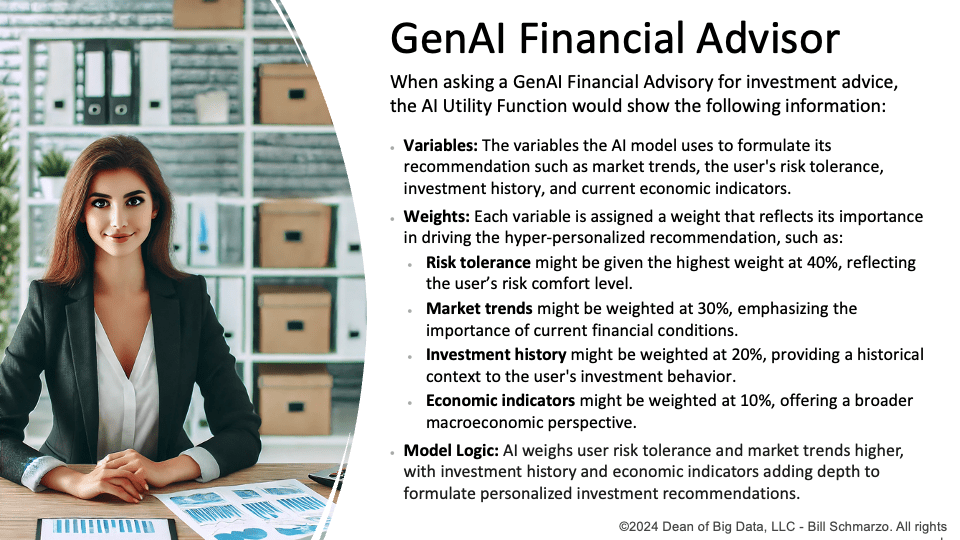

I’m not trying to put Financial Analysts out of business, but let’s review a real-world scenario using an AI Utility Function with a GenAI tool. Imagine a customer asking ChatGPT for investment advice. In this scenario, the AI Utility Function could show the following components behind its financial investment recommendations:

- Variables: The AI considers several vital variables to formulate its recommendation, including market trends, the user’s risk tolerance, investment history, and current economic indicators. These variables are crucial for tailoring advice that aligns with the user’s financial goals and the prevailing economic conditions.

- Weights: Each variable is assigned a weight that reflects its importance in decision-making. For example, risk tolerance is given the highest weight at 40%, as it is essential to align recommendations with the user’s comfort level regarding potential losses and gains. Market trends are weighted at 30%, emphasizing the importance of current financial conditions. Investment history is weighted at 20%, providing a historical context to the user’s investment behavior. Lastly, economic indicators are weighted at 10%, offering a broader macroeconomic perspective.

- Decision Logic: The AI’s recommendation is predominantly influenced by the user’s risk tolerance and current market trends, as indicated by their higher weights. This ensures that the advice is personalized and relevant to current market conditions. Investment history and economic indicators play a supporting role, adding depth and context to the recommendation. For instance, the AI might suggest more aggressive investment options if the user’s risk tolerance is high and market trends are favorable. Conversely, even a user with high-risk tolerance might receive more conservative advice if market trends are volatile.

By clearly outlining these elements, the AI Utility Function provides transparency into how the AI arrived at its recommendation. Users can see how their risk tolerance and market conditions influence the advice, with additional insights from their investment history and broader economic trends. This transparency helps users understand the rationale behind the recommendation, fostering trust and enabling more informed decision-making.

Conclusion

In conclusion, the AI Utility Function provides a transformative approach to making GenAI tools like ChatGPT more transparent and trustworthy. We could foster greater confidence and informed decision-making by clarifying the variables, weights, and decision logic behind GenAI tool recommendations.

I urge developers, businesses, and policymakers to adopt transparent AI frameworks, bridging the gap between users and AI technologies. Let’s advocate for transparency—share this blog, initiate conversations, and push for regulatory guidelines that promote ethical and effective AI use. Together, we can transform the mystery of AI into mastery for the benefit of all.